In order to do these examples we first need to introduce the StructType(),StructField() and ArrayType() methods which are used to define the columns in the PySpark DataFrame. By using these methods, we can define the column names and the data types of the particular columns.

StructType()

This method is used to define the structure of the PySpark dataframe. It will accept a list of data types along with column names for the given dataframe. This is known as the schema of the dataframe. It stores a collection of fields

StructField()

This method is used inside the StructType() method of the PySpark dataframe. It will accept column names with the data type.

ArrayType()

This method is used to define the array structure of the PySpark dataframe. It will accept a list of data types. It stores a collection of fields. We can place datatypes inside ArrayType().

In this article, we have to create a dataframe with an array. Let’s create a dataframe with 2 columns. First column is Student_category which refers to the integer field to store student ids. The second column – Student_full_name is used to store string values in an array created using ArrayType().

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#and import struct types and other data types

from pyspark.sql.types import StructType,StructField,StringType,IntegerType,FloatType,ArrayType

from pyspark.sql.functions import array_contains

#create an app named linuxhint

spark_app = SparkSession.builder.appName(‘linuxhint’).getOrCreate()

# consider an array with 5 elements

my_array_data = [(1, [‘A’]), (2, [‘B’,’L’,’B’]), (3, [‘K’,’A’,’K’]),(4, [‘K’]), (3, [‘B’,’P’])]

#define the StructType and StructFields

#for the above data

schema = StructType([StructField(“Student_category”, IntegerType()),StructField(“Student_full_name”, ArrayType(StringType()))])

#create the dataframe and add schema to the dataframe

df = spark_app.createDataFrame(my_array_data, schema=schema)

df.show()

Output:

array_remove()

array_remove() is used to remove a particular value in an array across all rows in an array type column. It takes two parameters.

Syntax:

Parameters:

- array_column is the array column that has arrays with values

- value is present in the array to be removed from the array values.

array_remove() function is used with the select() method to do the action.

Example:

In this exemple, we will remove:

- ‘A’ from the Student_full_name column

- ‘P’ from the Student_full_name column

- ‘K’ from the Student_full_name column

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#and import struct types and other data types

from pyspark.sql.types import StructType,StructField,StringType,IntegerType,FloatType,ArrayType

from pyspark.sql.functions import array_contains

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# consider an array with 5 elements

my_array_data = [(1, ['A']), (2, ['B','L','B']), (3, ['K','A','K']),(4, ['K']), (3, ['B','P'])]

#define the StructType and StructFields

#for the above data

schema = StructType([StructField("Student_category", IntegerType()),StructField("Student_full_name", ArrayType(StringType()))])

#create the dataframe and add schema to the dataframe

df = spark_app.createDataFrame(my_array_data, schema=schema)

# display the dataframe by removing 'A' value

df.select("Student_full_name",array_remove('Student_full_name','A')).show()

# display the dataframe by removing 'P' value

df.select("Student_full_name",array_remove('Student_full_name','P')).show()

# display the dataframe by removing 'K' value

df.select("Student_full_name",array_remove('Student_full_name','K')).show()

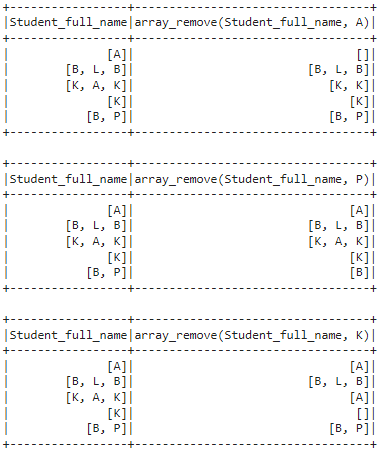

Output:

In the first output, we can see in second columns:

A,P and K values are removed.

size()

size() is used to return the length or count of values present in an array in each row of a dataframe. It takes one parameter.

Syntax:

Parameter:

array_column refers to the array type column

Example:

Get the count of values in an array in the Student_full_name column.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#and import struct types and other data types

from pyspark.sql.types import StructType,StructField,StringType,IntegerType,FloatType,ArrayType

from pyspark.sql.functions import array_contains

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# consider an array with 5 elements

my_array_data = [(1, ['A']), (2, ['B','L','B']), (3, ['K','A','K']),(4, ['K']), (3, ['B','P'])]

#define the StructType and StructFields

#for the above data

schema = StructType([StructField("Student_category", IntegerType()),StructField("Student_full_name", ArrayType(StringType()))])

#create the dataframe and add schema to the dataframe

df = spark_app.createDataFrame(my_array_data, schema=schema)

# get the size of array values in all rows in Student_full_name column

df.select("Student_full_name",size('Student_full_name')).show()

Output:

We can see that total values present in the array in each row are returned.

reverse()

reverse() is used to reverse array in each row.

Syntax:

Parameter:

array_column refers to the array type column

Example:

Reverse the array for all rows in the Student_full_name column.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#and import struct types and other data types

from pyspark.sql.types import StructType,StructField,StringType,IntegerType,FloatType,ArrayType

from pyspark.sql.functions import array_contains

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# consider an array with 5 elements

my_array_data = [(1, ['A']), (2, ['B','L','B']), (3, ['K','A','K']),(4, ['K']), (3, ['B','P'])]

#define the StructType and StructFields

#for the above data

schema = StructType([StructField("Student_category", IntegerType()),StructField("Student_full_name", ArrayType(StringType()))])

#create the dataframe and add schema to the dataframe

df = spark_app.createDataFrame(my_array_data, schema=schema)

# reverse array values in Student_full_name column

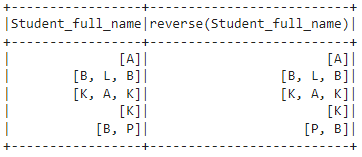

df.select("Student_full_name",reverse('Student_full_name')).show()

Output:

We can see that array values in Student_full_name (2nd column) are reversed.

Conclusion

In this article, we saw three different functions applied on PySpark array type columns. array_remove() is used to remove a particular value present in an array in all rows. Size() is used to get the total number of values present in an array and reverse() is used to reverse the array.