Artificial Intelligence is one of the most rapidly growing technologies using Machine learning algorithms to train and test models using huge data. The data can be stored in different formats but to make Large Language Models using LangChain, the most used type is JSON. The training and testing data needs to be clear and complete without any ambiguities so the model can perform effectively.

This guide will demonstrate the process of using the pydantic JSON parser in LangChain.

How to Use Pydantic (JSON) Parser in LangChain?

The JSON data contains the textual format of data that can be gathered through web scraping and many other sources like logs, etc. To validate the accuracy of the data, LangChain uses the pydantic library from Python to simplify the process. To use the pydantic JSON parser in LangChain, simply go through this guide:

Step 1: Install Modules

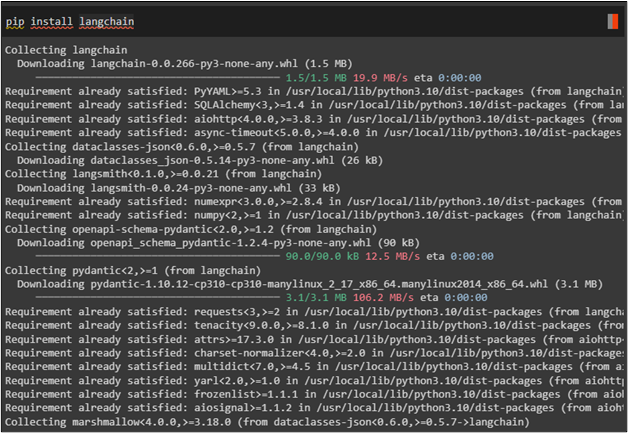

To get started with the process, simply install the LangChain module to use its libraries for using the parser in LangChain:

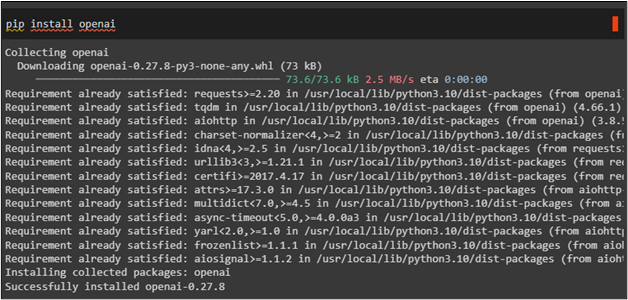

Now, use the “pip install” command to get the OpenAI framework and use its resources:

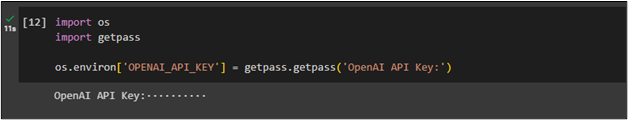

After installing the modules, simply connect to the OpenAI environment by providing its API key using the “os” and “getpass” libraries:

import getpass

os.environ['OPENAI_API_KEY'] = getpass.getpass('OpenAI API Key:')

Step 2: Import Libraries

Use the LangChain module to import the necessary libraries that can be used for creating a template for the prompt. The template for the prompt describes the method for asking questions in natural language so the model can understand the prompt effectively. Also, import libraries like OpenAI and ChatOpenAI to create chains using LLMs for building a chatbot:

PromptTemplate,

ChatPromptTemplate,

HumanMessagePromptTemplate,

)

from langchain.llms import OpenAI

from langchain.chat_models import ChatOpenAI

After that, import pydantic libraries like BaseModel, Field, and validator to use JSON parser in LangChain:

from pydantic import BaseModel, Field, validator

from typing import List

Step 3: Building a Model

After getting all the libraries for using pydantic JSON parser, simply get the pre-designed tested model with OpenAI() method:

temperature = 0.0

model = OpenAI(model_name=model_name, temperature=temperature)

Step 4: Configure Actor BaseModel

Build another model to get answers related to actors like their names and films by asking for the filmography of the actor:

name: str = Field(description="Lead Actor's Name")

film_names: List[str] = Field(description="Films in which the actor was lead")

actor_query = "I want to see the filmography of any actor"

parser = PydanticOutputParser(pydantic_object=Actor)

prompt = PromptTemplate(

template="Reply the prompt from the user.\n{format_instructions}\n{query}\n",

input_variables=["query"],

partial_variables={"format_instructions": parser.get_format_instructions()},

)

Step 5: Testing the Base Model

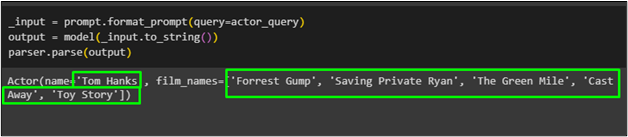

Simply get the output using the parse() function with the output variable containing the results generated for the prompt:

output = model(_input.to_string())

parser.parse(output)

The actor named “Tom Hanks” with the list of his films has been fetched using the pydantic function from the model:

That is all about using the pydantic JSON parser in LangChain.

Conclusion

To use the pydantic JSON parser in LangChain, simply install LangChain and OpenAI modules to connect to their resources and libraries. After that, import libraries like OpenAI and pydantic to build a base model and verify the data in the form of JSON. After building the base model, execute the parse() function, and it returns the answers for the prompt. This post demonstrated the process of using pydantic JSON parser in LangChain.