This guide will explain the process of wrapping out parsers to avoid errors using an auto-fixing parser in LangChain.

How to Wrap Output Parsers to Avoid Errors Using Auto-Fixing Parser in LangChain?

To wrap output parsers to avoid errors using the auto-fixing parser in LangChain, simply go through the following illustration:

Step 1: Setup Prerequisites

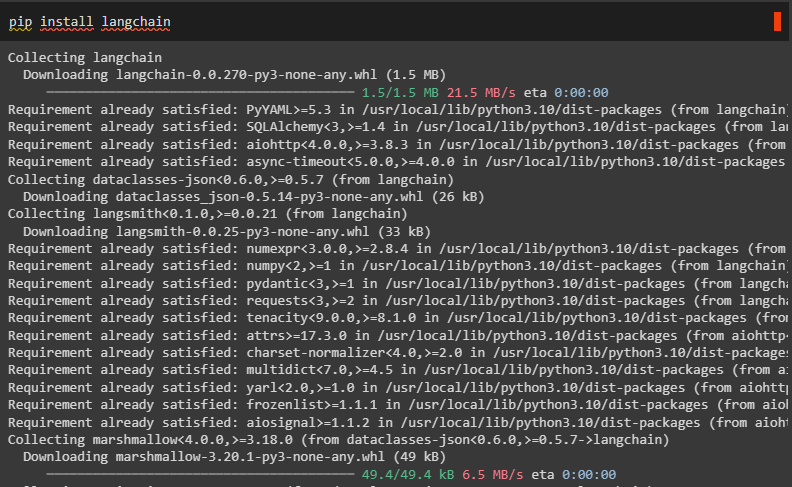

Firstly, install the LangChain framework to get on with the process of using the auto-fixing parser:

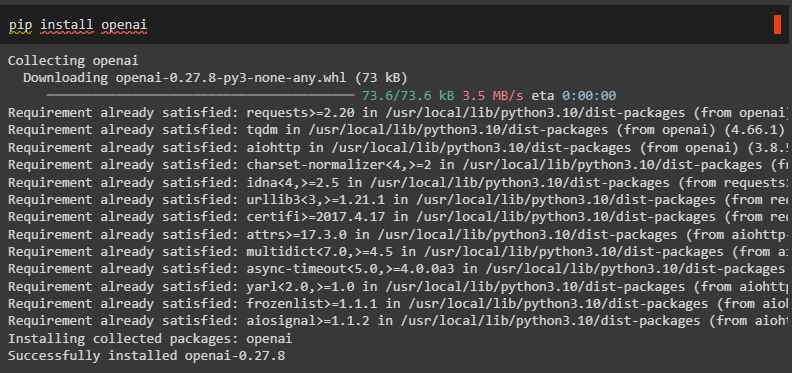

Install OpenAI framework to connect to its network and generate responses efficiently:

Connect to the OpenAI environment using its API key by accessing the operating system after importing the “os” and “getpass” libraries:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Importing Libraries

After installing all the necessary libraries from LangChain and OpenAI, build the output parser:

from langchain.prompts import ChatPromptTemplate

from langchain.prompts import HumanMessagePromptTemplate

from langchain.chat_models import ChatOpenAI

from langchain.output_parsers import PydanticOutputParser

from langchain.llms import OpenAI

from pydantic import BaseModel, Field, validator

from typing import List

Step 3: Building Output Parser

Now, create the Actor class containing BaseModel as its argument and then call the PydanticOutputParser() method:

name: str = Field(description="Lead Actor's Name")

film_names: List[str] = Field(description="Films in which the actor was lead")

actor_query = "I want to see the filmography of any actor"

parser = PydanticOutputParser(pydantic_object=Actor)

Step 4: Call the Misformatted Parser

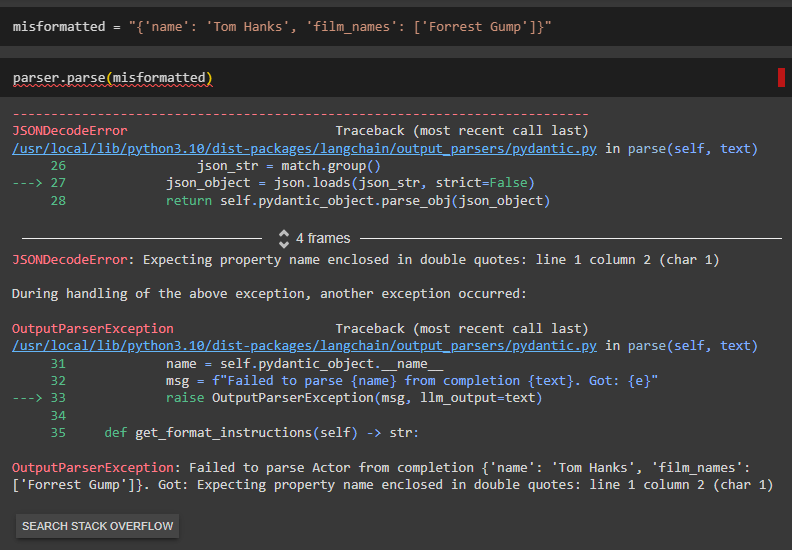

Create a “misformatted” variable with the format of the response which is not correct so it is deemed to produce an error:

Simply execute the parse() function with the variable and it will display the error response:

Step 5: Using Fixing Parser

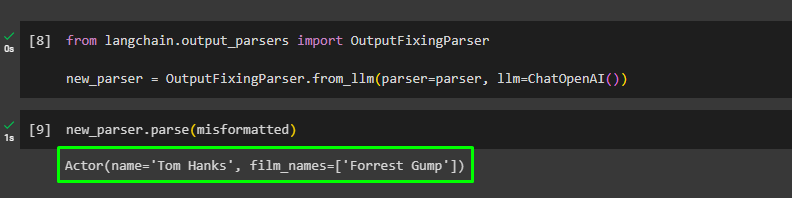

Import the OutputFixingParser library to fix the error that occurred in the previous example by configuring a new_parser variable:

new_parser = OutputFixingParser.from_llm(parser=parser, llm=ChatOpenAI())

Run the new_parser variable to solve the error and it automatically generates the correct response for the query:

That is all about wrapping output parsers to avoid errors using auto-fixing parsers in LangChain.

Conclusion

To wrap the output parser to avoid errors using the auto-fixing parser in LangChain, simply install LangChain and OpenAI modules to connect to their libraries. After that, connect to the OpenAI environment using its API key and then build an output parser using the query format. Run the parser with the wrong format of the output to get the error response from the pares. To get the correct response, wrap the output parser using the auto-fixing parser. This guide has explained the process of wrapping output parsers to avoid errors using auto-fixing parsers in LangChain.