In this tutorial, we will discuss the options to locally disable the computation of gradients within PyTorch.

What is the Definition of a Gradient?

In mathematics, a gradient is simply the slope of a curve. The concept of a gradient is that it is the change of the dependent value with respect to the change in the independent value. It can be best explained with the help of an example of a distance-time graph. The gradient is calculated by measuring the distance between the starting point ‘A’ and the ending point ‘B’ and dividing it by the time taken to travel between these two points. This essentially gives us the speed i.e., distance over time.

How to Locally Disable Gradient Computation in PyTorch?

There are two main techniques in PyTorch that are used to disable the calculation of gradients in PyTorch. These two are the “torch.no_grad()” function and the “requires_grad” method. They have several similarities but they differ in their method of operation.

A few of the features of the two methods are listed below:

- The purpose of both these techniques is to prevent the calculation of gradients in a neural network model in PyTorch.

- The flexibility of these methods ensures that the developer can use them to enable and disable gradients wherever required.

- The “torch.no_grad()” function can disable the gradients for all the code contained within it. It can encapsulate any number of PyTorch tensors in itself.

- The “requires_grad” method acts on a particular tensor that is set as “False”. It offers greater control to the user by allowing them to stop the gradient computation of any singular tensor.

- Gradients are computed for tensors present outside the “torch.no_grad()” section of the code and those that have “requires_grad” set to “True”.

Follow the steps below to learn how to use these two techniques to locally enable/disable the computation of gradients in PyTorch:

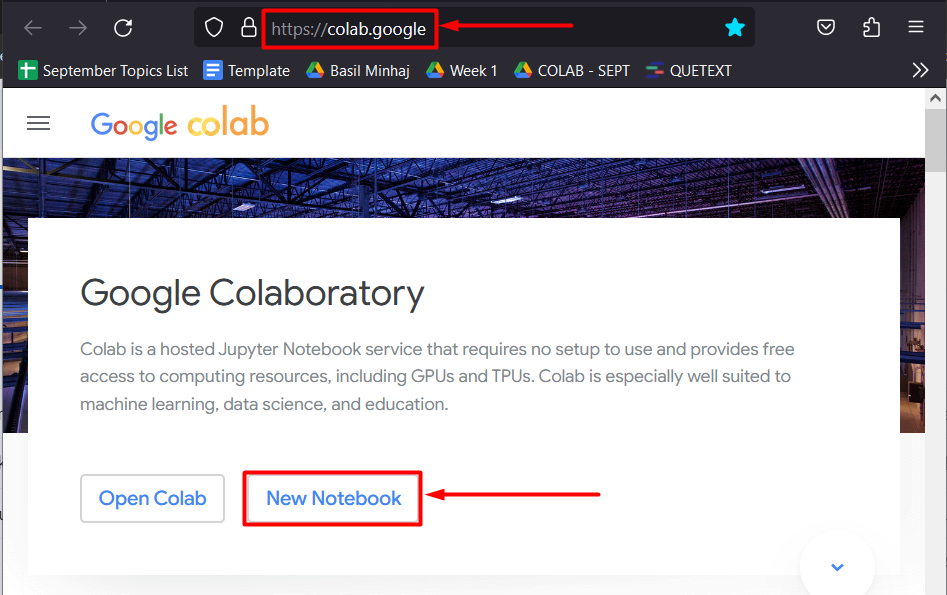

Step 1: Set up a Colab Notebook

The first step of a project is to choose an IDE and set up the working space. For this purpose, open the Google Colaboratory website and click on the “New Notebook” button to create colab notebook as shown:

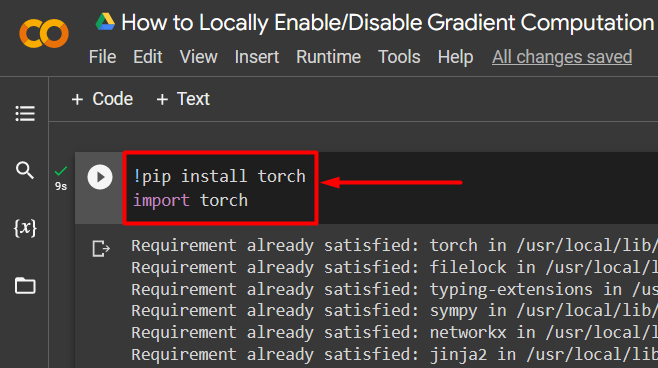

Step 2: Install and Import the Torch Library

Torch library contains all the essential functionality of the PyTorch framework. Its installation and import is vital for every PyTorch project:

import torch

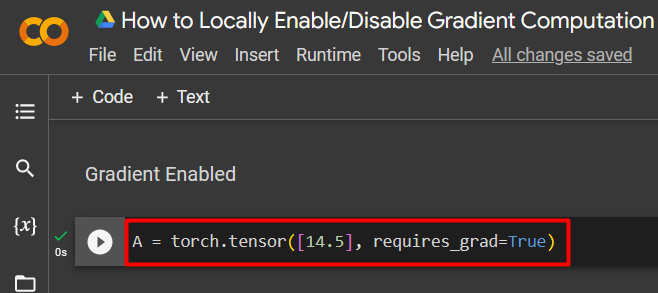

Step 3: Define a Custom PyTorch Tensor

Use the “torch.tensor()” method to define a PyTorch tensor with “requires_grad=True”. This tensor will have its gradient enabled:

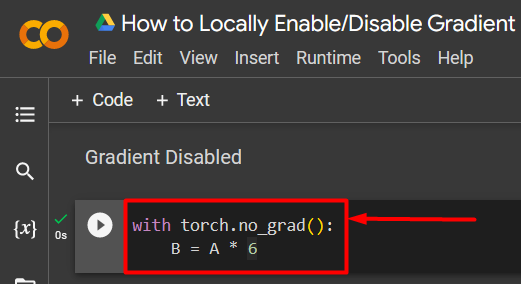

Step 4: Disable the Gradient using the “torch.no_grad()” function

The “torch.no_grad()” function is used inside the “with” loop where another tensor ‘B’ is defined by a simple arithmetic operation on the original tensor ‘A’. This new tensor ‘B’ will have its gradient disabled:

B = A * 6

Gradient disabled:

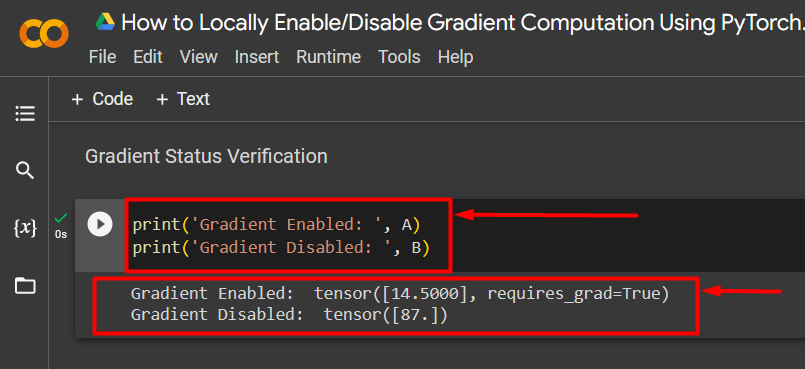

Step 5: Verify Gradients

Use the “print()” method in the project to confirm that the gradient is enabled for tensor “A” and disabled for tensor “B” as shown:

print('Gradient Disabled: ', B)

The output of the tensor with the gradient enabled and the tensor with the gradient disabled is shown below:

Note: You can access our Colab Notebook at this link.

Pro-Tip

The feature to enable and disable gradients in PyTorch is extremely handy for data scientists who wish to control what data is being utilized during the training of their neural network models. Moreover, disabling gradients can also improve processing speeds for the model because of the lesser load on the hardware.

Success! We have showcased how to enable/disable the computation of gradients in PyTorch.

Conclusion

Enable gradient computation locally by using the “requires_grad=True” method and disable it by either “requires_grad=False” or the “torch.no_grad()” function. Gradient calculation is an essential component of the optimization of neural network models during training. It optimizes the chances of the model to produce reliable results. In this blog, we have shown how the gradient calculation can be enabled or disabled as per user requirements.