LangChain is a framework that builds and manages chatbots for asking queries or prompts in natural language. The model understands and generates answers related to prompts and saves these responses to make chains in LangChain. There are millions of users building models in natural languages using LangChain and LangChainHub allows them to save these models at a single place. It is designed by LangChain where users can find and submit models related to their work.

This guide will explain the process of loading chains from LangChainHub using LangChain.

How to Load Chains from LangChainHub Using LangChain?

To load chains from the LangChainHub using LangChain, look at this guide with detailed methods:

Method 1: Load Chains Using load_chain Library

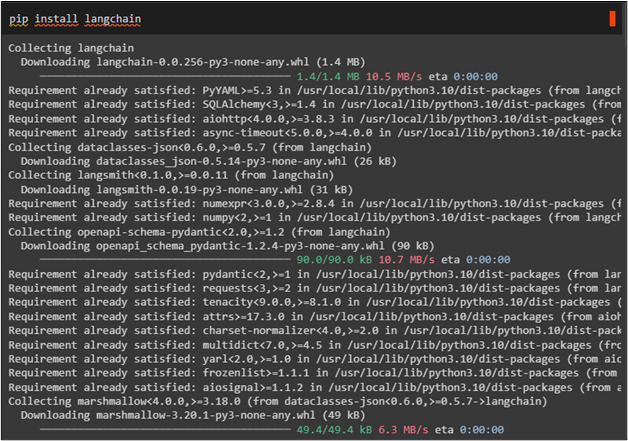

Install the LangChain module by typing the following code to start the example for loading chains from the LangChainHub:

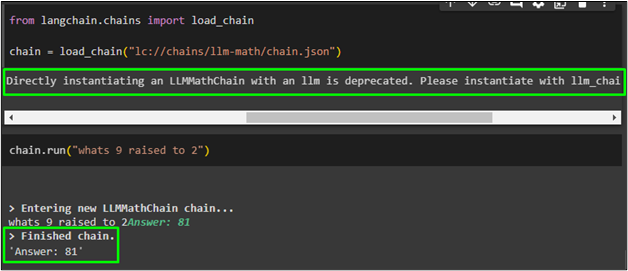

Import the load_chain library from LangChain to use its function with the path of a file that will be loaded from the LangChainHub:

chain = load_chain("lc://chains/llm-math/chain.json")

Use the run() function from the chains library to prompt the query in natural language to get an answer from the file loaded in the previous step:

The answer to the above query after running the above code is displayed in the following screenshot:

Method 2: Load Chains Using TextLoader Library

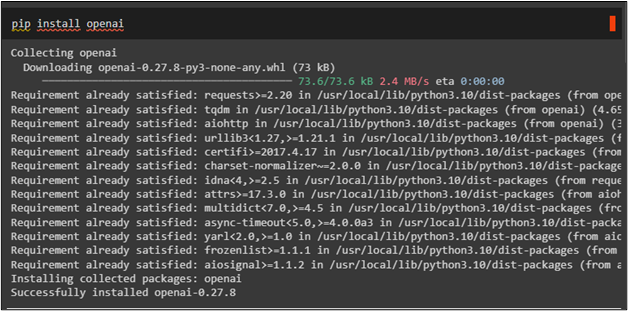

Sometimes the user needs to load chains from vector stores, and it will take extra parameters like a vector-based question answering chain requires a vector database. Install the OpenAI module to use its functions and libraries for loading chains from LangChainHub:

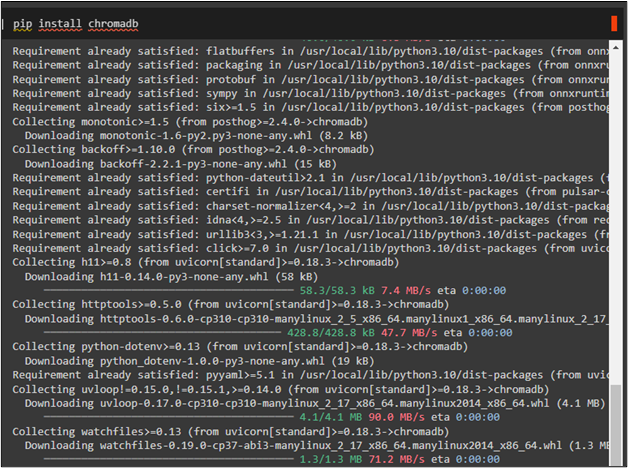

Install chromadb which is an open-source vector database to build LLM applications with embedding:

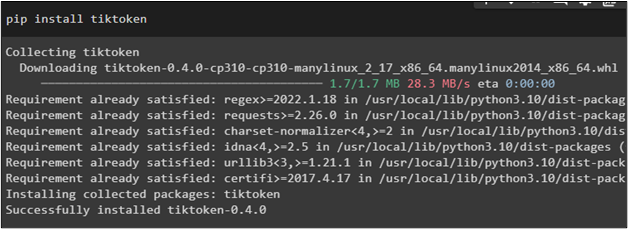

Install tiktoken to create small chunks of the data by splitting text:

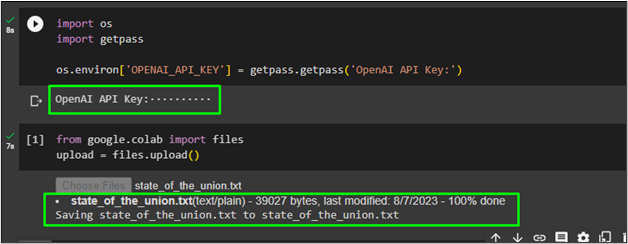

Use the OpenAI module to load the OpenAI API key using the getpass library and os library. It allows the user to interact with the operating system:

import getpass

os.environ['OPENAI_API_KEY'] = getpass.getpass('OpenAI API Key:')

Upload files using the files.upload() function to the Google Collaboratory after importing the “files” library:

upload = files.upload()

The following screenshot displays the state_of_the_union.txt dataset that is uploaded with multiple documents to use queries for retrieving data:

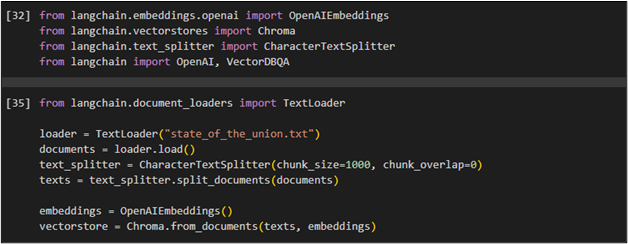

After uploading data, simply import all the necessary libraries to load chains from the LangChainHub on the vector stores database:

from langchain.vectorstores import Chroma

from langchain.text_splitter import CharacterTextSplitter

from langchain import OpenAI, VectorDBQA

Load the uploaded data in the TextLoader() function and apply text splitting on it to embed it using the OpenAIEmbeddings() function. Store the data in the “vectorstore” variable using the Chroma database with “texts” and “embeddings” as its parameters:

loader = TextLoader("state_of_the_union.txt")

documents = loader.load()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

texts = text_splitter.split_documents(documents)

embeddings = OpenAIEmbeddings()

vectorstore = Chroma.from_documents(texts, embeddings)

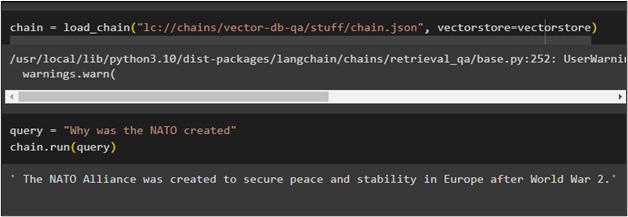

After that, simply load chains from the LangChainHub by providing the path from the file from there and provide the vectorstore as the dataset:

Use the “query” variable to initialize the prompt in the natural language and then run the chain with the query variable to get the answers from the chain:

chain.run(query)

The following screenshot displays the answers by running the query variable with a prompt related to data stored in the vector store:

That is all about loading chains from LangChainHub using LangChain.

Conclusion

To load chains from the LangChainHub using LangChain, simply load the files by providing the path of the file from the LangChainHub. After that, run the chain to fetch the answer. Sometimes, the chains require additional configuration like retrieving data from the vector stores. This guide has explained the process of loading chains from LangChainHub using LangChain.