This tutorial explains how to solve the “Too many open files” Linux error.

After reading this article you will understand the cause of the error you’re getting and you will know how to fix it. You will not only learn to fix the error but also other important aspects of your Linux OS file processes.

The Meaning of the “Too many open files” Error

When a user executes a process, the process has associated files containing information on the file usage. For example, file descriptors can inform the kernel how to access a file, or how to handle it to another resource. For example, when we use a pipe to redirect a process to another.

By default, every Linux executed process has at least 3 associated files called File Descriptors: STDIN, STDOUT and STDERR. Their function is shown in the table below.

| File Descriptor | Number | Function |

| STDIN | 0 | Refers to the keyboard input method |

| STDOUT | 1 | Prints or redirects the output, refers to the screen |

| STDERR | 2 | Prints or redirects errors, refers to the screen |

There is a limit of file descriptors users and processes that can be executed simultaneously. When the limit is reached and the user or process tries to open additional file descriptors, we get the “Too many open files error”.

Therefore, the solution to this error is to increase the limit of file descriptors a user or process can open.

Fixing the Linux “Too many open files” Error

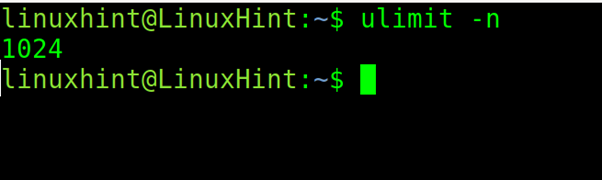

To check the descriptor files limit for the current session , run the command shown below.

As you can see above, the limit is 1024.

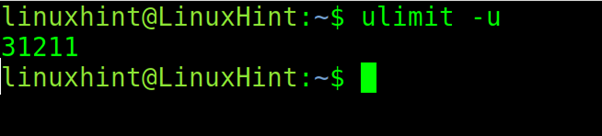

Now, let’s check the user limit by executing the following command:

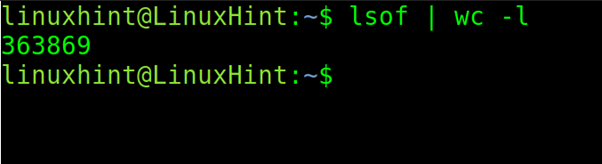

To learn the number of current files you can use the command shown in the screenshot below.

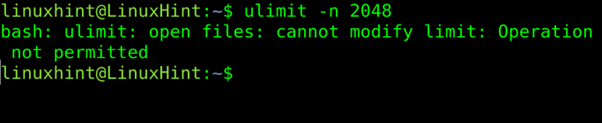

The command shown below can be used to increase the descriptor files limit to solve this error. Yet this may not work if the limit specified in configuration files is reached.

As you can see, the system does not allow us to increase the limit. In such a case, we need to edit a couple of files.

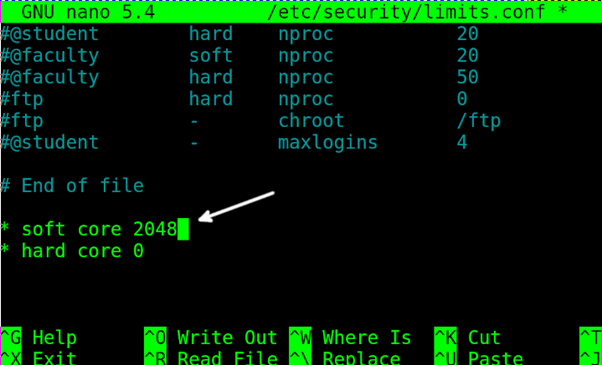

In the first file to edit, you will see two types of limits: hard and soft limits:

The hard limit represents the maximum limit allowed for the soft limit. Editing the hard limit requires privileges.

On the other hand, the soft limit is used by the system to limit all system resources for executing processes. The soft limit can’t be larger than the hard limit.

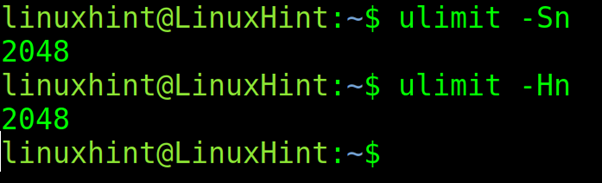

You can learn both hard and soft limits with the commands shown in the following screenshot, where ulimit -Sn shows the soft limit and ulimit -Hn shows the hard limit.

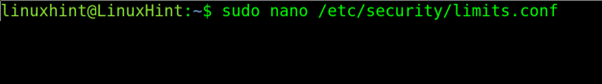

First, open the following file with privileges:

Edit the lines shown below increasing the limit. In my case, I increase the soft limit from 1024 to 2048. Here, you also can edit the hard limit if necessary.

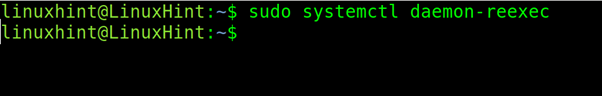

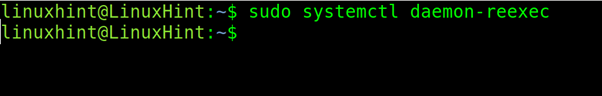

Then, restart the daemon-reexec using systemctl as shown below.

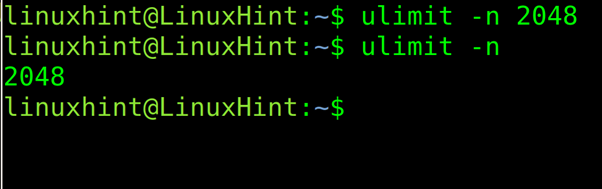

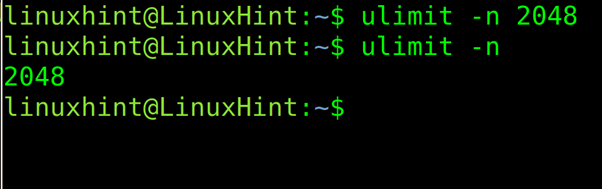

Open a new terminal or restart the session to check changes were made successfully and try to change the ulimit again by running the following command and change the limit for the one you specified in the /etc/security/limits.conf file.

As you can see above, the limit was successfully updated.

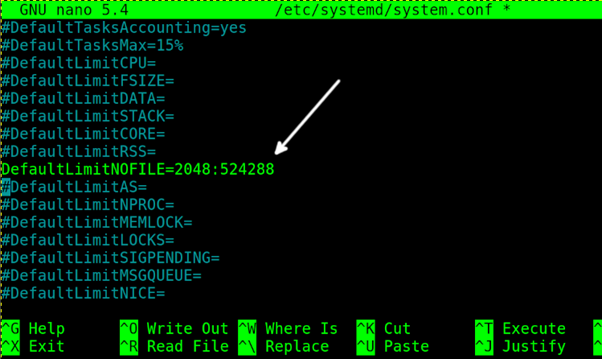

In case it didn’t work for you, open the following file with privileges:

Find the line DefaultLimitNOFILE= and change the first number before the colon, increasing the limit. In my case I increased it from 1024 to 2048. Then, close and exit the file saving the changes.

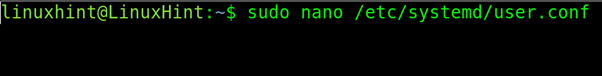

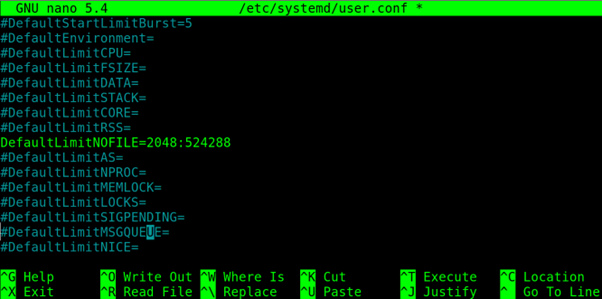

Also, open the following file with privileges:

Find the same line and edit it, repeating the changes made previously.

Restart the reexec daemon again using systemctl.

Restart the session and use the following command, replacing 2048 with the limit you want to set and configured in the configuration files:

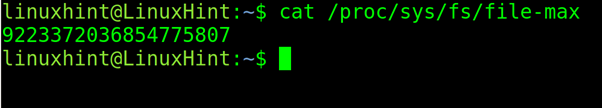

The following command returns the limit for the whole system:

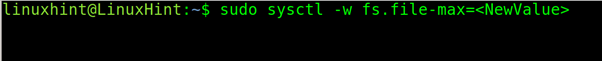

You change the system limit using the following command:

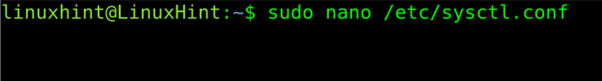

To make the change permanent, edit the following file with privileges:

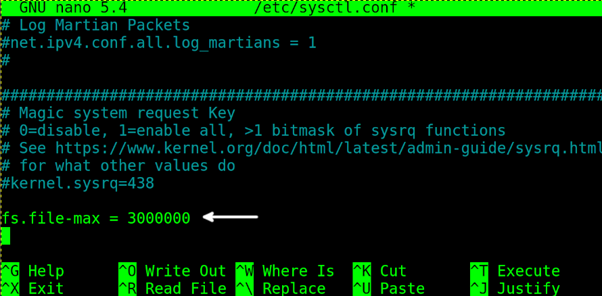

Add the following line replacing <NewValue> with the new limit you want to set:

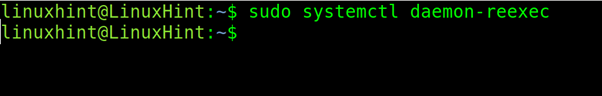

Restart to apply new changes with the command below.

That’s all, after increasing the ulimit, the “Too many open files” error must get fixed.

Conclusion

This error can be easily fixed by any Linux user. Linux offers different ways to approach this problem, feel free to try all of them to get rid of this error. The steps described above are valid for many Linux distributions but done on Debian based distros. If you are interested to learn about file descriptors, we have published specific content on this subject you can read on this site. File descriptors are a basic component of all operating systems.

Thank you for reading this tutorial explaining how to solve the “Too many open files” error in Linux. Keep following us for more professional Linux articles.