In this article, we will discuss how to tell whether PyTorch is using the GPU or not?

How to Tell if PyTorch is using GPU?

PyTorch is made available by its developers Facebook, now Meta, on a multitude of IDE platforms. One of the most popular and online IDEs is Google Colaboratory. Follow the steps given below to learn how to tell if PyTorch is using GPU or not within Colab:

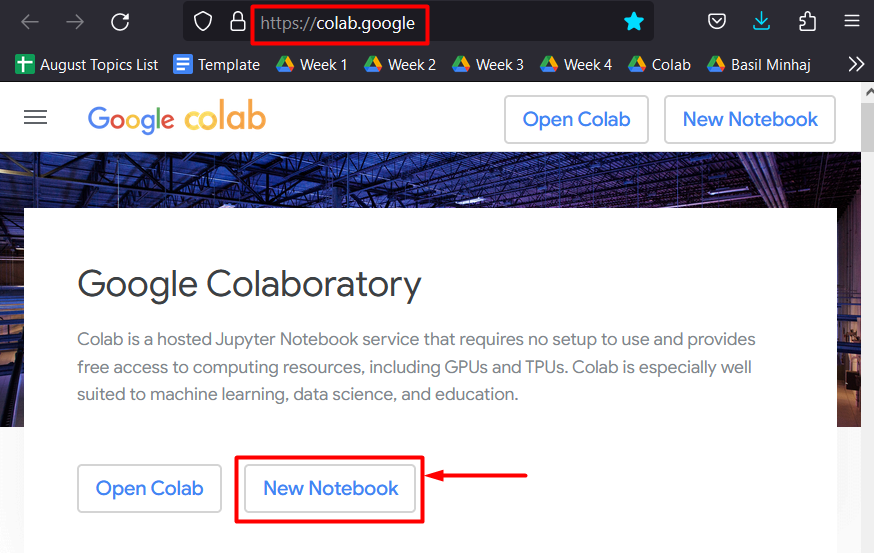

Step 1: Run Google Colab

Start by launching Google Colab from its website and open a “New Notebook” to begin the project:

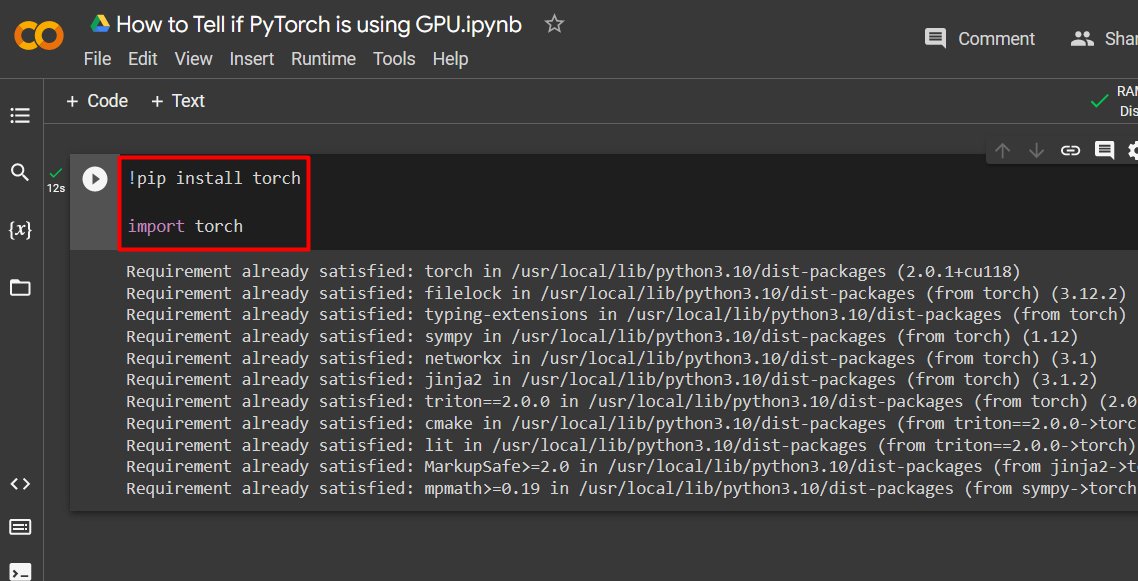

Step 2: Install and Import the Torch Library

Use the python package installer “!pip” and the “import” command to install and import the “Torch” library into the Colab IDE:

import torch

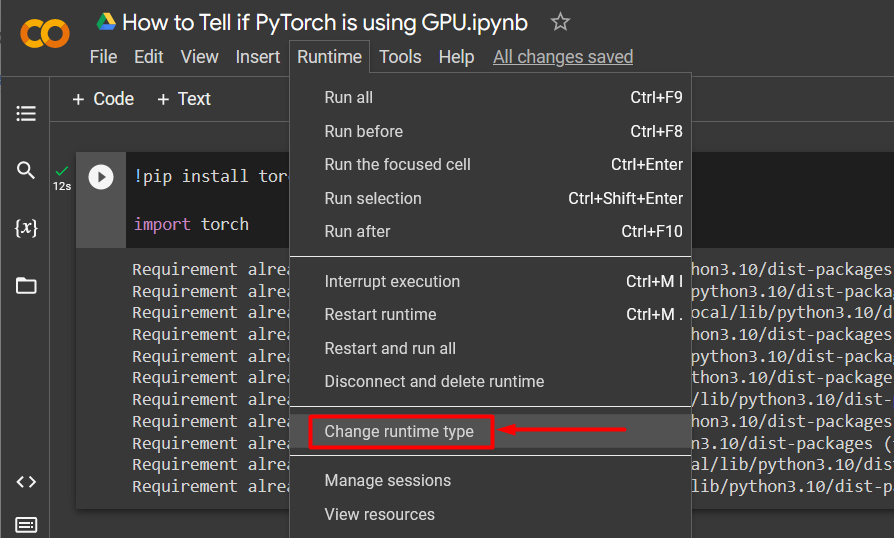

Step 3: Go to the Change Runtime Type Menu

Go over to the “Runtime” option in the menu bar and scroll down to reach the “Change runtime type” option and click on it as shown:

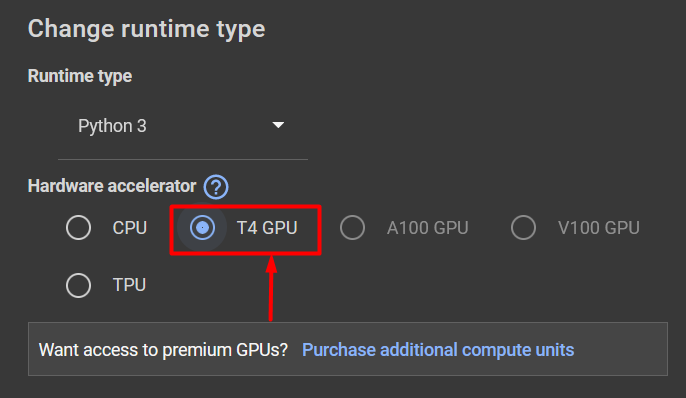

Step 4: Select the GPU Hardware Accelerator

Select the available “T4 GPU” option from the list of Hardware Accelerators in the “Change runtime type” popup and then click “Save” to apply changes:

Step 5: Check GPU Availability

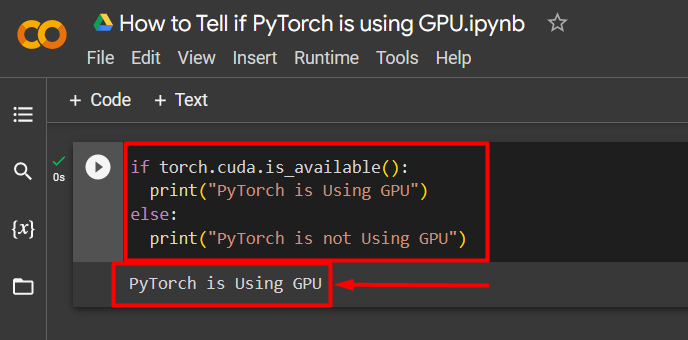

The last step is to check whether the selected GPU is available and being used by PyTorch or not. Use the “torch.cuda.is_available()” method with an “if/else” statement and the “print()” method to show the output as done below:

print("PyTorch is Using GPU")

else:

print("PyTorch is not Using GPU")

The output shows that PyTorch is currently using GPU:

Note: You can access our Google Colab Notebook at this link.

Pro-Tip

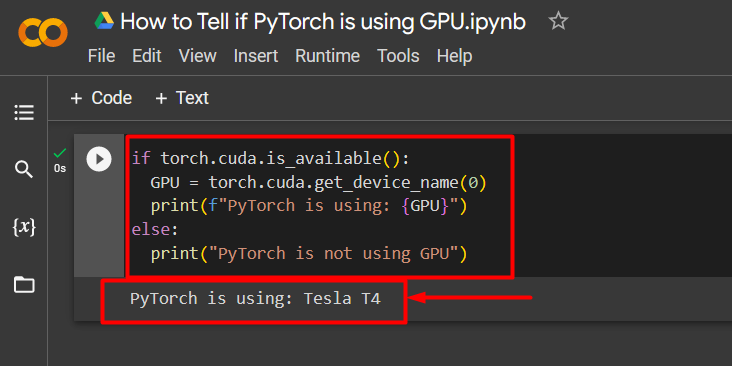

An additional step you can do is to check the “name” of the GPU in order to be exactly aware of its processing capabilities. Use the “torch.cuda.get_device_name()” method with an “if/else” statement to check the name of the GPU and then, use the “print()” method to showcase the output as shown:

GPU = torch.cuda.get_device_name(0)

print(f"PyTorch is using: {GPU}")

else:

print("PyTorch is not using GPU")

The output shows the name of GPU which is “Tesla T4”:

Success! We have just demonstrated how to tell if PyTorch is using a GPU or not.

Conclusion

Check if PyTorch is using GPU or not with the “torch.cuda.is_available()” method and an “if/else” statement. Then, show the output using the “print()” method. GPUs are able to enhance the processing capabilities for complex machine learning models and reduce runtime. This article has demonstrated how to tell if PyTorch is using a GPU and which GPU is being used.