In this blog, we will focus on the ways in which you can increase GPU utilization in PyTorch.

How to Increase GPU Utilization in PyTorch?

There are several techniques that can be employed to increase GPU utilization and ensure that the best hardware resources are being used for the processing of complex machine-learning models. These tactics involve editing the code and utilizing PyTorch features. Some important tips and tricks are listed below:

- Loading Data and Batch Sizes

- Less Memory-Dependent Models

- PyTorch Lightning

- Adjust Runtime Settings in Google Colab

- Clear Cache for Optimization

Loading Data and Batch Sizes

The “Dataloader” in PyTorch is used to define the specifications of the data to be loaded into the processor with each forward pass of the deep learning model. A larger “batch size” of data will require more processing power and will increase the utilization of the available GPU.

The syntax for assigning a Dataloader with a specific batch size in PyTorch to a custom variable is given below:

Less Memory-Dependent Models

Each model architecture requires a different volume of “memory” to perform at its optimal level. Models that are efficient at using less memory per unit of time are capable of working with batch sizes that are far greater than that of others.

PyTorch Lightning

PyTorch has a scaled-down version that is “PyTorch Lightning”. It is optimized for lightning-fast performance as can be seen from its name. Lightning uses GPUs by default and offers much faster processing for machine learning models. A major advantage of Lightning is the lack of requirement for boilerplate code that can hamper processing.

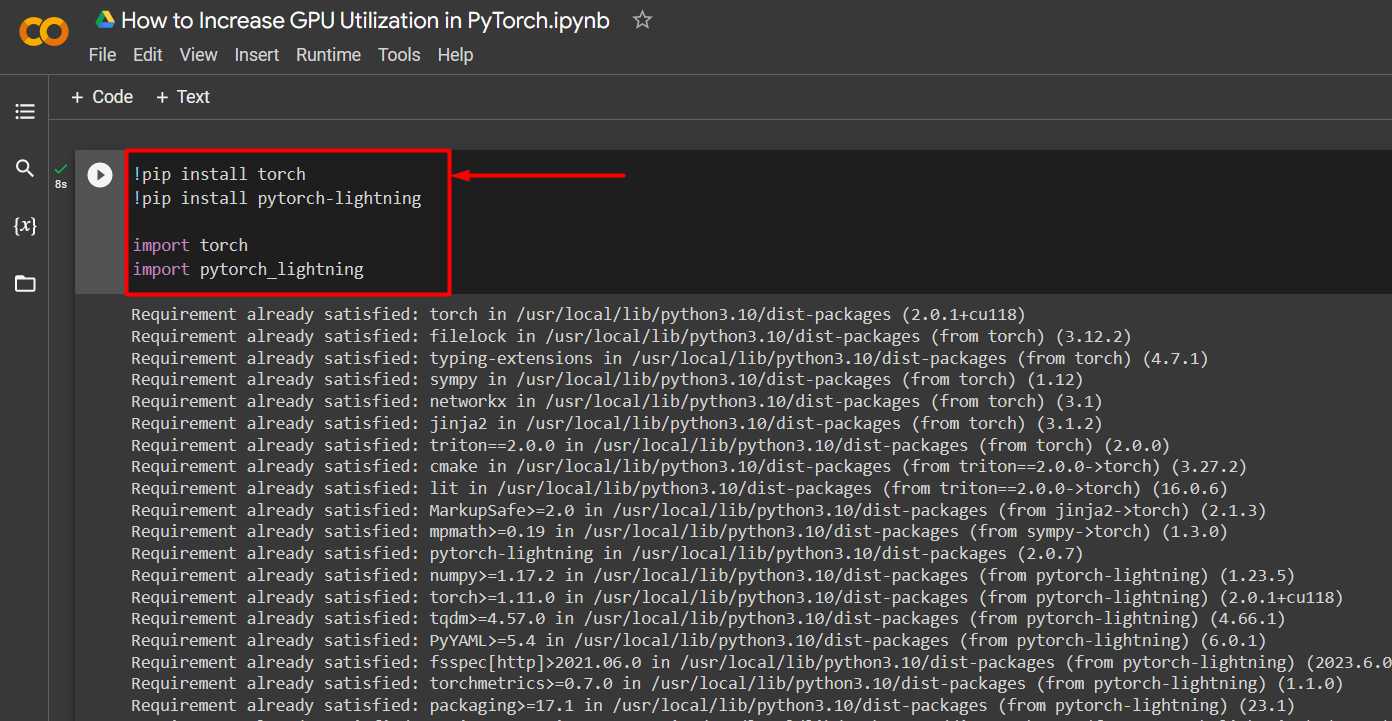

Import the necessary libraries into a PyTorch project with the syntax given below:

!pip install pytorch-lightning

import torch

import pytorch_lightning

Adjust Runtime Settings in Google Colab

The Google Colaboratory is a cloud IDE that provides free GPU access to its users for the development of PyTorch models. By default, the Colab projects are running on CPU but the settings can be changed.

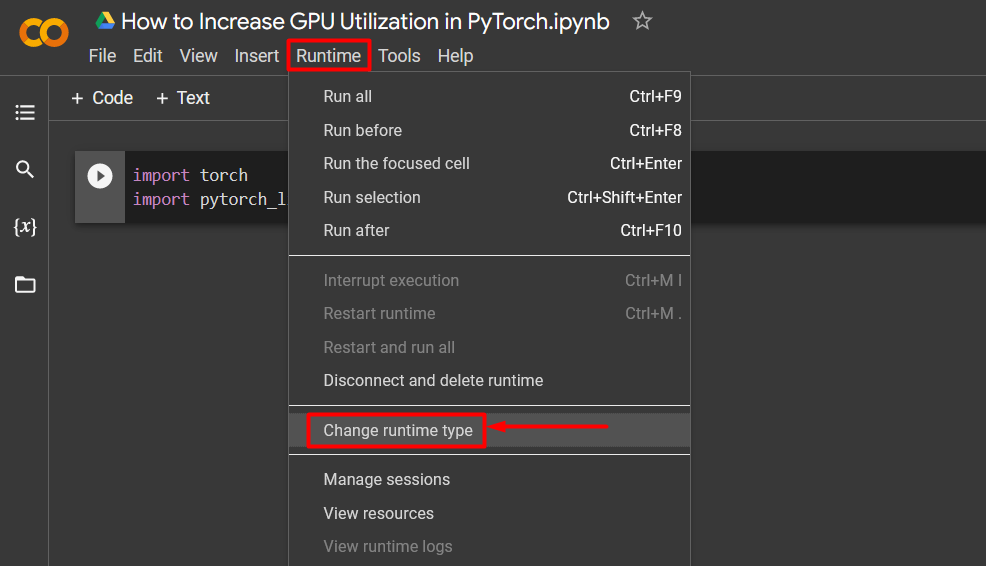

Open the Colab notebook, go to the “Runtime” option in the menu bar, and scroll down to the “Change runtime settings”:

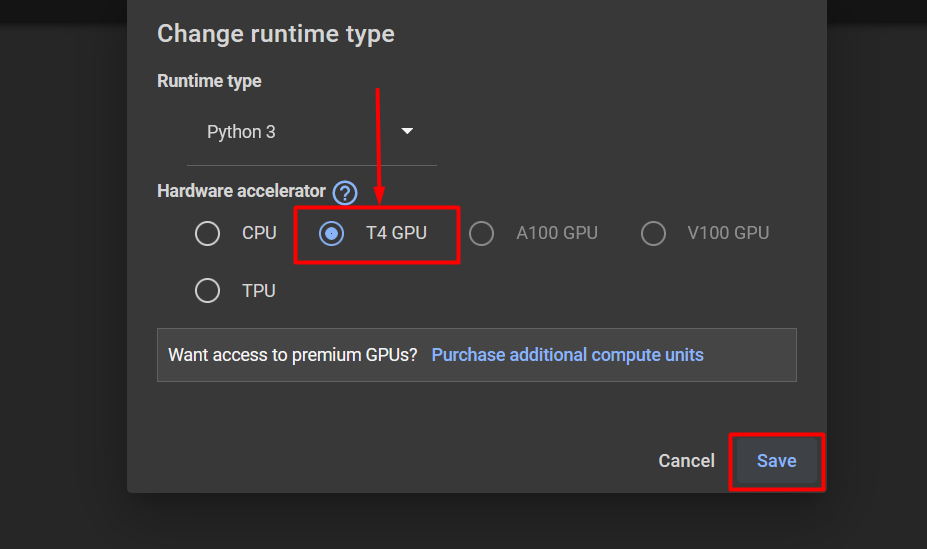

Then, select the “T4 GPU” option and click on “Save” to apply the changes to utilize GPU:

Clear Cache for Optimization

PyTorch allows its users to clear the memory cache in order to be able to free up space for new processes to run. The “Cache” stores data and information about the models being run so that it can save time that will be spent in reloading these models. Clearing the cache provides users with more space to run new models.

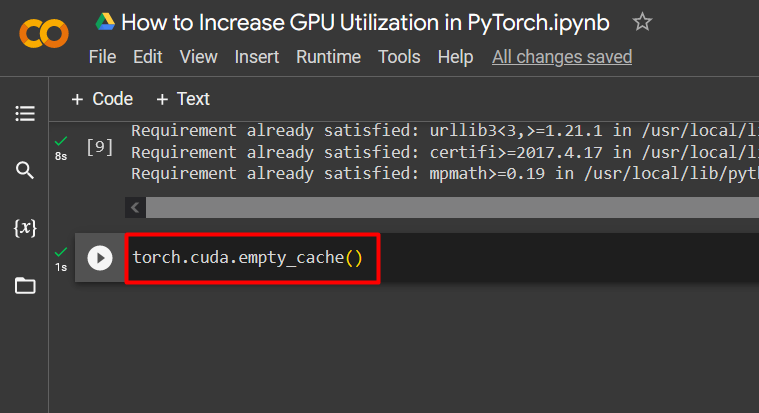

The command to clear the GPU cache is given below:

These tips are used to optimize the running of machine learning models with GPUs in PyTorch.

Pro-Tip

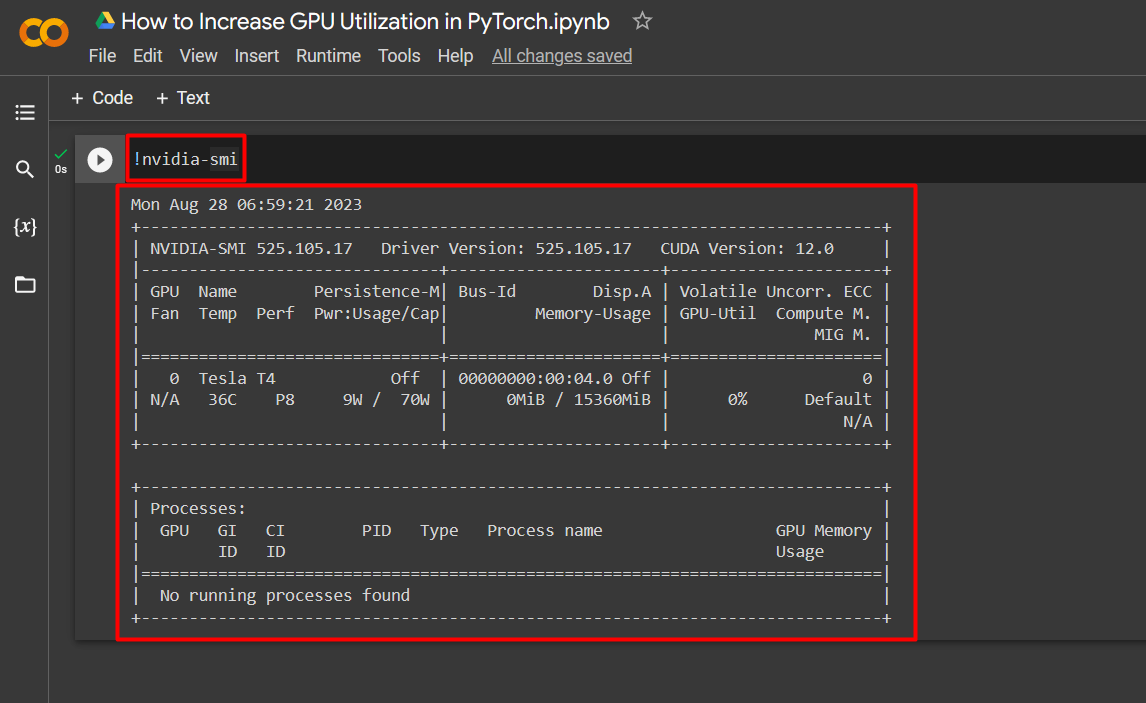

Google Colab allows its users to access details about GPU utilization by “nvidia” to get information on where the hardware resources are being utilized. The command to showcase GPU utilization details is given below:

Success! We have just demonstrated a few ways to increase the GPU utilization in PyTorch.

Conclusion

Increase GPU utilization in PyTorch by deleting cache, using PyTorch Lightning, adjusting runtime settings, using efficient models, and optimal batch sizes. These techniques go a long way in ensuring that the deep learning models perform at their best and are able to draw valid conclusions and inferences from the available data. We have demonstrated the techniques to increase GPU utilization.