A Large Language Model or LLM is easy and efficient in isolation for simpler applications but it requires chaining for complex applications. Chaining is the process of connecting applications with other applications or with components inside the application. LangChain offers the chain interface for such applications and makes the sequence of calls to components.

This guide will explain the process of implementing a custom chain in LangChain.

How to Implement Custom Chain in LangChain?

To implement custom chains in the LangChain framework, simply follow this thorough guide:

Install Prerequisites

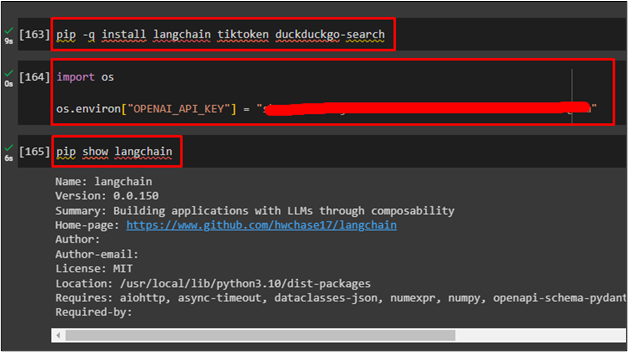

Start the process of implementing custom chains in LangChain by installing the LangChain, tiktoken, and duckduckgo-search frameworks using the following command:

Import the os library to use the OpenAI API key using the following code:

os.environ["OPENAI_API_KEY"] = "******************************"

Display the details of the LangChain using the following command:

Import Custom Agent

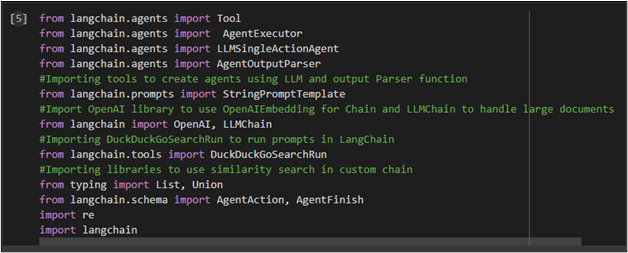

Import custom chain agents using custom search using “DuckDuckGoSearchRun” tool with other libraries using the following code:

from langchain.agents import AgentExecutor

from langchain.agents import LLMSingleActionAgent

from langchain.agents import AgentOutputParser

from langchain.prompts import StringPromptTemplate

#Import OpenAI library to use OpenAIEmbedding for Chain and LLMChain to handle large documents

from langchain import OpenAI, LLMChain

#Importing DuckDuckGoSearchRun to run prompts in LangChain

from langchain.tools import DuckDuckGoSearchRun

#Importing libraries to use similarity search in custom chain

from typing import List, Union

from langchain.schema import AgentAction, AgentFinish

import re

import langchain

Setup Tool

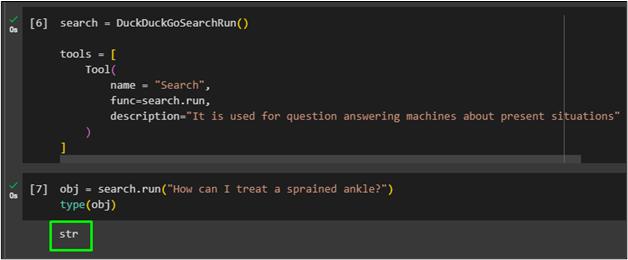

Use the following code to set up the tools for implementing custom chains imported in the previous step using the DuckDuckGoSearchRun() function:

tools = [

Tool(

name = "Search",

func=search.run,

description="It is used for question answering machines about present situations"

)

]

The following code uses the input query inside the “search.run()” function and checks the type of the input:

type(obj)

The following screenshot displays the data type of the input which is in the form of a string:

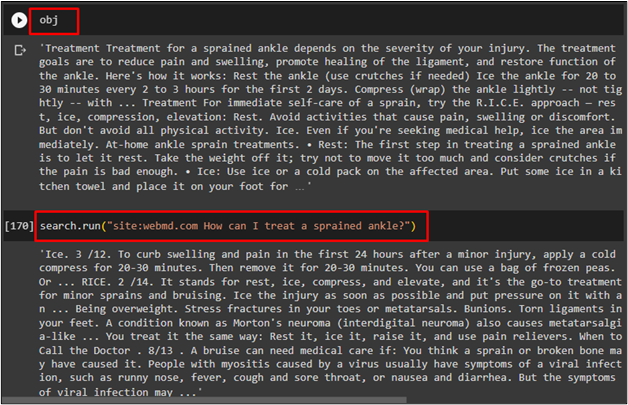

Use the following code to check the data stored in the “obj” variable and display it on the screen after searching from any site:

The following code uses the “WebMD” website to ask questions. So, the search would ask the question from a particular website:

The following screenshot displays the outputs of the above-mentioned codes individually:

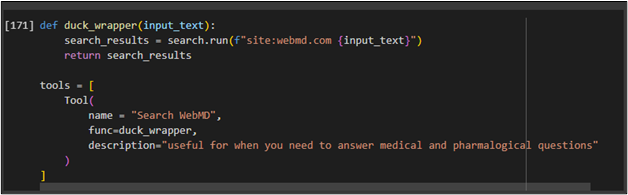

The following code uses the “duck_wrapper()” function and it sets the search.run() function to take the input only from the WebMD website for all future searches:

search_results = search.run(f"site:webmd.com {input_text}")

return search_results

tools = [

Tool(

name = "Search WebMD",

func=duck_wrapper,

description="useful for when you need to answer medical and pharmalogical questions"

)

]

Prompt Template

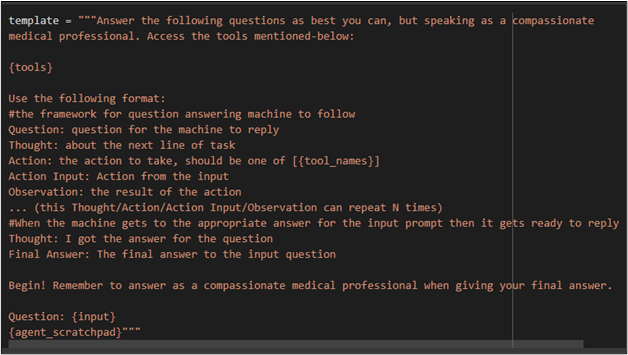

To apply the custom chains, the user needs to configure a base template for the prompts and the replies coming from the search. The following code describes the reaction style for the answering questioning bot using the tools defined previously:

medical professional. Access the tools mentioned-below:

{tools}

Use the following format:

#the framework for question answering machine to follow

Question: question for the machine to reply

Thought: about the next line of task

Action: the action to take, should be one of [{tool_names}]

Action Input: Action from the input

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

#When the machine gets to the appropriate answer for the input prompt then it gets ready to reply

Thought: I got the answer for the question

Final Answer: The final answer to the input question

Begin! Remember to answer as a compassionate medical professional when giving your final answer.

Question: {input}

{agent_scratchpad}"""

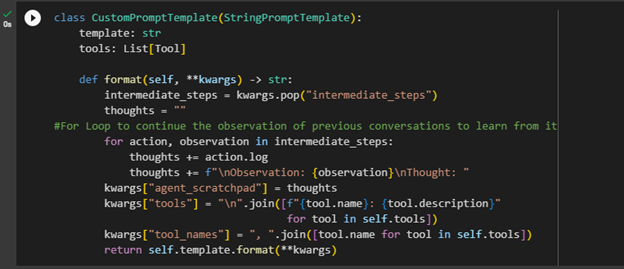

The following code provides the actual format/template for the agent using custom chains in LangChain. It also uses the “agent_scratchpad” which will be updated for each query until it finishes the answer and then starts new for a different query:

template: str

tools: List[Tool]

def format(self, **kwargs) -> str:

intermediate_steps = kwargs.pop("intermediate_steps")

thoughts = ""

#For Loop to continue the observation of previous conversations to learn from it

for action, observation in intermediate_steps:

thoughts += action.log

thoughts += f"\nObservation: {observation}\nThought: "

kwargs["agent_scratchpad"] = thoughts

kwargs["tools"] = "\n".join([f"{tool.name}: {tool.description}"

for tool in self.tools])

kwargs["tool_names"] = ", ".join([tool.name for tool in self.tools])

return self.template.format(**kwargs)

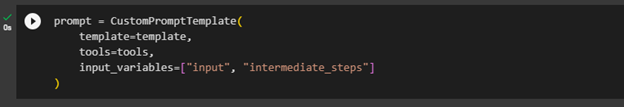

The following code sets the template for the prompt so the bot will generate the answer according to the steps for each specific input:

template=template,

tools=tools,

input_variables=["input", "intermediate_steps"]

)

Custom Output Parsing

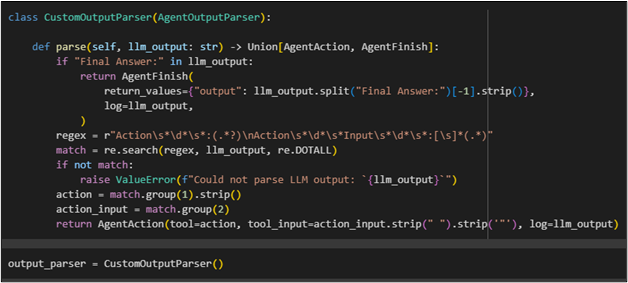

The following code uses the output parser function to provide the logic to deal with whatever is coming back in the form of output:

def parse(self, llm_output: str) -> Union[AgentAction, AgentFinish]:

if "Final Answer:" in llm_output:

return AgentFinish(

return_values={"output": llm_output.split("Final Answer:")[-1].strip()},

log=llm_output,

)

regex = r"Action\s*\d*\s*:(.*?)\nAction\s*\d*\s*Input\s*\d*\s*:[\s]*(.*)"

match = re.search(regex, llm_output, re.DOTALL)

#logic if final answer does not match with the LLM output

if not match:

raise ValueError(f"Could not parse LLM output: `{llm_output}`")

action = match.group(1).strip()

action_input = match.group(2)

return AgentAction(tool=action, tool_input=action_input.strip(" ").strip('"'),

log=llm_output)

Use the following code to set the above function in the output_parser variable:

Setup LLM

The following code is used to set up the OpenAI LLM for this example:

Implement a Custom Chain

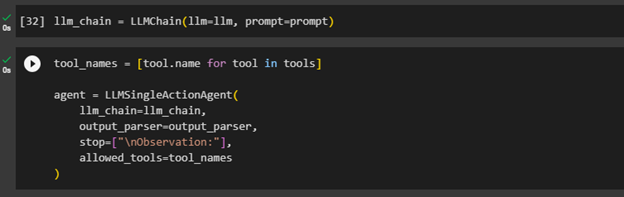

After choosing the LLM for the custom chain, simply use the LLMChain function to set the llm and prompt:

The following code combines everything and passes it to the “LLMSingleActionAgent” chain which also contains the observation for stop. It means that when the agent stops generating the output then the tool will store it as its observation to use it in future chats:

agent = LLMSingleActionAgent(

llm_chain=llm_chain,

output_parser=output_parser,

stop=["\nObservation:"],

allowed_tools=tool_names

)

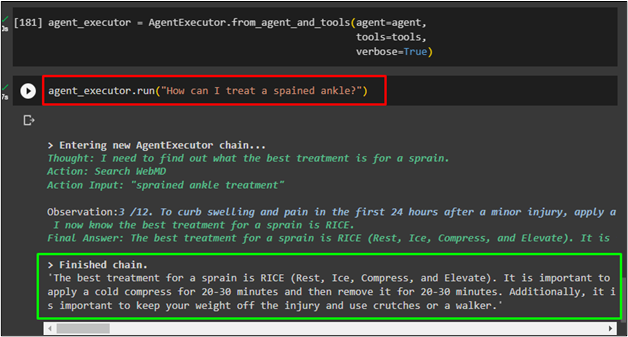

Agent Executer

After setting up the chain, simply pass it to an “agent_executer” function with agent, tools, and verbose as its parameters:

Now simply run the command inside the executor using the following code:

The following screenshot displays the output provided by the chain from the WebMD website:

That is all about implementing a custom chain in LangChain.

Conclusion

To implement a custom chain in LangChain, simply install the LangChain and other modules for implementing the process. After that, simply import the custom chains libraries for a custom search and setup tools for the process. Then, the prompt template is used to set up a template for the output structure and set the output parser function. In the end, simply set up a custom chain agent and then execute it by providing a command to check its output.