This guide will explain creating and working with human-input LLMs using LangChain.

How to Create and Work With Human Input LLMs Using Langchain?

To create and work with human input LLMs using LangChain, simply follow this step-by-step guide:

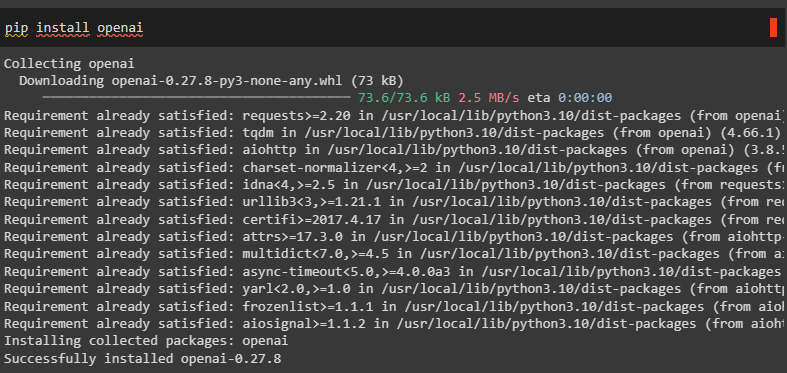

Step 1: Install Modules

To start the process of creating and working with human input LLMs, simply install LangChain to get its resources to get started with the process:

Install the OpenAI module to get its functions required for building the LLM in LangChain:

This example is set to get answers using Wikipedia, so it is required to install its module in Python:

Get the OpenAI API key from the environment and provide it in the IDE to connect to the OpenAI framework:

import getpass

os.environ['OPENAI_API_KEY'] = getpass.getpass('OpenAI API Key:')

Step 2: Import Libraries

The next step after getting all the modules is to import all the necessary libraries from the LangChain framework:

from langchain.agents import load_tools

from langchain.agents import AgentType

from langchain.llms.human import HumanInputLLM

Step 3: Build Human Input LLM

Now, the user can build the human input by loading tools and using the “llm” variable to call the “HumanInputLLM()” method. The function contains its configuration to put prompts in between the start and end lines with specified format:

llm = HumanInputLLM(

prompt_func=lambda prompt: print(

f"\n===START====\n{prompt}\n=====END======"

)

)

After that, simply call the initialize_agent() method with all the settings and parameters in it:

tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)

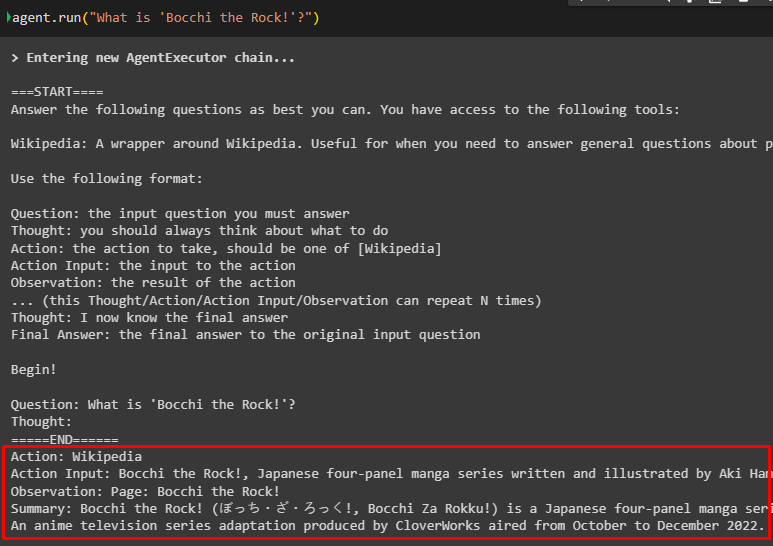

Step 4: Test the HumanInputLLM

Once the HumanInputLLM is created, simply use it to run the agent with the prompt inside its braces:

Human Input

After executing the above command, the LLM will ask the user to get the answer related to the query, and the first question will be to get the source of the response:

After that, the action input contains the exact answer for the query with precision and correctness:

After the answer, provide the name of the page that contains the above information so the bot can validate the answer from the source page using the Wikipedia library:

Now it is the time to explain the answer by providing the summary of the source page in a paragraph:

The user can elaborate the answer in multiple lines so the bot can answer the query with more compactness and this explanation gives more details about the query:

Output

The output provided by the human as the input is placed as the answer to the query after verifying from the source by the LLM, so the response should be authentic:

That is all about creating and working with human input LLMs using LangChain.

Conclusion

To create and work with the human input LLMs using the Long Chain, simply install the necessary modules and frameworks such as LangChain, Wikipedia, etc. After that, import libraries to build the human input LLM and then run the agent with the prompt to get the answer from the LLM. The LLM asks for the response from the user in the specified format and then processes it to generate the relevant replies for the user. This blog illustrated the process of creating and working with the human input LLMs in LangChain.