Data transmission is one of the most invaluable tasks in today’s internet world. Although numerous tools are available on the web to download files, Linux is one step ahead. The wget utility in Linux is a simple, powerful, and proficient tool for downloading files using download links.

The wget command includes various features, such as resuming interrupted downloads, speed and bandwidth customization, encrypted downloads, and simultaneous file downloads. Moreover, it can interact with Rest APIs. So, in this brief tutorial, we will cover all the ways to use the wget command in Linux.

How To Use the wget Command in Linux

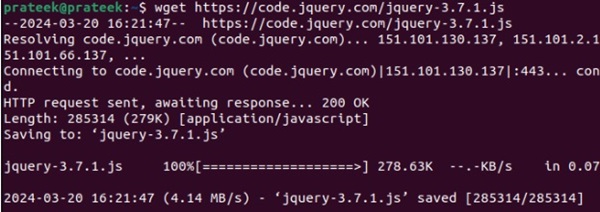

Whether you need a single file or want to download the entire file set, the wget utility helps you achieve both tasks. It also offers a few options to tweak its overall functioning. The standard wget command lets you download a file from a website. For example, to download jquery-3.7.1.js from its official website, please use the wget command:

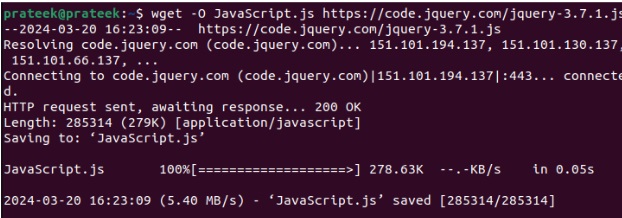

The wget command, by default, saves the downloaded files in the current directory with their original names listed on the website. However, you can save it at a specific location or with a particular name through the ‘-O’ option. For instance, you can use the below wget command to save the above file with the name JavaScript.js:

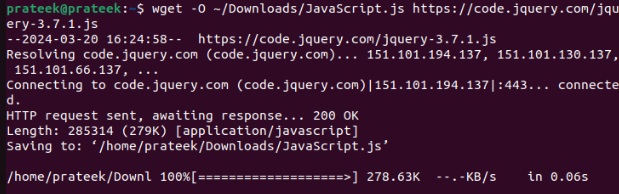

Similarly, to download the file at another path without changing the current directory, please mention the new file path along with the desired filename:

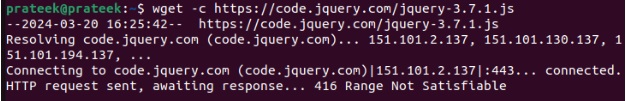

If your download fails, you can resume it from where it left off using the ‘–continue’ or ‘-c’ option:

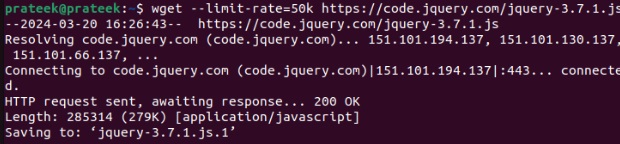

While downloading a file, if you’re also performing other online tasks that require sufficient internet bandwidth, use the ‘-limit-rate’ option to limit its speed.

Here, ’50k’ means limiting the speed to 50KB/s for the specified file. However, you can replace it with your desired limit. This is usually helpful when you do not want the wget command to consume all available bandwidth.

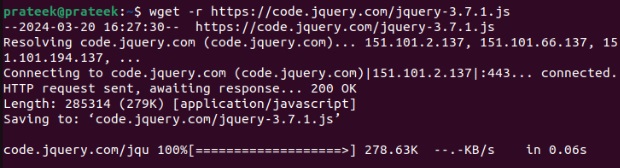

The most powerful feature of the wget utility is its ability to download entire websites recursively. You can use the ‘-r’ or ‘–recursive’ option to download all HTML pages, linked files, CSS, and images. For example:

Conclusion

The wget command is a powerful and versatile tool for downloading files from URLs. This brief tutorial explains how to use the wget command and its applications. Its prominent feature is recursive website download, but it also allows renaming downloaded files and resuming uninterrupted downloads. Moreover, if you have a low bandwidth, use the ‘–limit-rate’ option to limit the download speed.