The above minimum heap describes a heap where the number of children per parent node can be more than two. A binary heap is one where the highest number of children per parent node is two. A Complete Binary Heap is one where each node has two children, with the exception that, at the lowest level, there may be only one leaf node without a sibling; with the rest of the lowest leaf nodes paired and beginning from the extreme left end of the last row, ending with this single leaf node, which must be a left node.

Heapifying means building (creating) a heap. Since a tree where each node can have any number of children can be turned into a complete binary tree, the true aim of this article is to produce the time complexity for heapifying a complete binary tree.

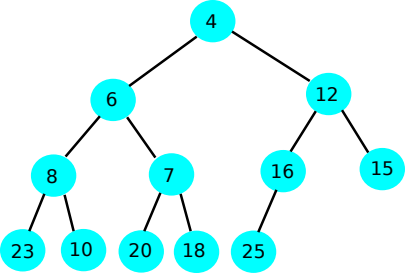

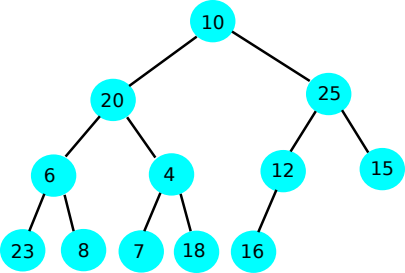

An example diagram of a complete binary tree is:

Any leaf node at the lowest level that does not have a sibling has to be a left node. All the nodes of the last row, including a possible single left node, are “flushed” to the left end of the last row.

By the definition of a heap, a left sibling can be smaller, greater, or equal to the right sibling. The ordering of both siblings is not specified.

Full Binary Tree

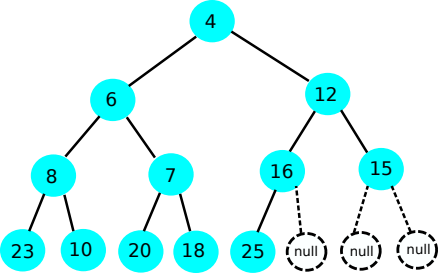

The above tree is a complete binary tree, but it is not a full binary tree. It is also a minimum heap. If it were a full binary tree, then all the nodes of the last-but-one level would have had two children each. The above tree is redrawn below as a full binary tree:

A tree can be represented by an array. The tree is read from top to bottom, left to right, row by row; then placed in the array. The following table shows the array of content and indexes for this tree:

| 4 | 6 | 12 | 8 | 7 | 16 | 15 | 23 | 10 | 20 | 18 | 25 | null | null | null |

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 |

The cell values are in the first table row. The zero-based indexes are in the second table row.

Relationship Between a Parent Index and its Children Indexes

In the tree or corresponding array, the root is at index 0 for zero-based indexing. Let i be the variable to hold each index. The children of the root are at indexes 1 and 2, which are 0 + 1 and 0 + 2. The children of node 1 are at indexes 3 and 4, which are 1 + 2 and 1 + 3. The children of node 2 are at index 5 and 6, which are 2 + 3 and 2 + 4. The children of node 3 are at index 7 and 8, which are 3 + 4 and 3 + 5. The children of node 4 are at index 9 and 10, which are 4 + 5 and 4 + 6; and so on.

Let i be the parent index for now. So the children of each parent are at index i + i + 1 and at i + i + 2, which are:

The reverse should be known. That is, given an index, i for a left child or right child, what is the parent index? For the left child at index 1 and the right child at index 2, the parent is at index 0. For the left child at index 3 and the right child at index 4, the parent is at index 1. For the left child at index 5 and the right child at index 6, the parent is at index 2. For the left child at index 7 and the right child at index 8, the parent is at index 3. For the left child at index 9 and the right child at index 10, the parent is at index 4.

i this time, is a child index (not a parent index). So the parent of each left child is at index i/2 of integer division, and the parent of the right child, which is the same as the parent of the left child (sibling), is at integer result of (i-1)/2, i.e.:

where the division is integer division.

It is also good to know if a node is a left child or a right child: If normal division by 2 has a remainder, then that is a left node by zero-based indexing. If normal division by 2 has no remainder, then that is a right node by zero-based indexing.

The code in C, to know if the child node is a left node or a right node, is:

//node is a right node

else

//node is the left node

After knowing that a node is a left node, the parent index can be obtained as an integer result of i/2. After knowing that a node is a right node, the parent index can be obtained as an integer result of (i-1)/2.

Height of a Full Binary Heap and Some Indexes

Total Number of Nodes

Notice from the last full diagram above that when the level of a heap increases by 1, its total number of nodes approximately doubles. Precisely, the next level comes with the number of nodes that makes the new total, the sum of all the previous nodes, plus 1, times 2, then minus 1. When the height is 1, there is 1 node = (0 + 1) x 2 – 1 = 2 – 1 = 21 – 1. When the height is 2, there are 3 nodes = (1 + 1) x 2 – 1 = 4 – 1 = 22 – 1. When the height is 3, there are 7 nodes = (3 + 1) x 2 – 1 = 8 – 1 = 23 – 1. When the height is 4, there are 15 nodes = (7 + 1) x 2 – 1 = 16 – 1 = 24 – 1. When the height is 5, there are 31 nodes = (15 + 1) x 2 – 1 = 32 – 1 = 25 – 1. When the height is 6, there are 63 nodes = (31 + 1) x 2 – 1 = 64 – 1 = 26 – 1. When the height is 7, there are 127 nodes = (63 + 1) x 2 – 1 = 128 – 1 = 27 – 1; and so on.

In general, when the height is H,

Last Node Index

For a binary tree and for zero-based indexing, the last index is:

where N is the total number of nodes, or simply just the number of nodes.

Number of Nodes without Last Row

For a full binary tree, two times the number of nodes for the last row gives the total number of nodes minus 1. Seen another way, the number of nodes for the last row is equal to the number of all the previous nodes, times two, plus 1. So the number of nodes without the last row is:

This is because, for zero-based indexing, the total number of nodes for a full binary tree is always an odd number. For example: If the number of nodes (total) is 15, then 15/2 = 7½. The integer result, 7, is taken, and the half is thrown away. And 7 is the number of nodes without the last row – see above.

Index for First Node of the last Row

The index for the first node of the last row should be known. For zero-based indexing, where the first node (element) is at index 0, the index for the first node of the last row is the number of nodes for all the nodes without the last row. It is:

Note that in the corresponding array, the tree nodes are called elements.

Sift Up and Sift Down

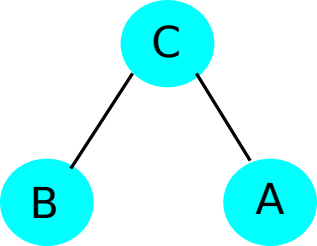

Consider the following sub-tree of three nodes:

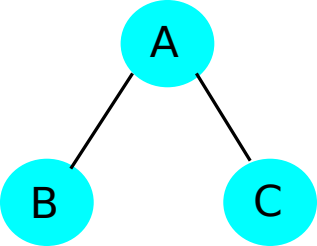

By the minimum heap property, the parent node should be smaller than or equal to the smallest of the children nodes. So node C has to be swapped with the least of the children nodes; it does not matter if the least is the left or right sibling. This means that C has to be interchanged with A to have:

As “A” moves up to take the place of C, that is sifting up. As C moves down to take the place of A, that is sifting down.

Heapifying Illustration

The heap, as shown above, is partial ordering, from smallest value to biggest value. It is not thorough ordering (not sort). As expressed above, the heap can be in tree form or in array form. As expressed above, heapifying has already taken place. In practice, the programmer is not necessarily going to find a tree already heapified. He would find a list that is completely in disorder (completely unsorted). This disordered list can exist in the form of a tree or in the form of an array. The following is a disordered (unsorted) tree and its corresponding array:

The corresponding array is:

| 10 | 20 | 25 | 6 | 4 | 12 | 15 | 23 | 8 | 7 | 18 | 16 | null | null | null |

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 |

The tree is read row-by-row, from left to right and from top to bottom, while placed in the array cells. Let the array name be arr, and let the variable that represents the zero-based index be i. In this article, the root is at index 0.

This tree will undergo partial ordering to become a minimum heap. When the partial ordering is complete, it will be the minimum heap given in the introduction section of this article. In this section, the partial ordering is done manually.

To simplify the heapifying (partial ordering process), the complete unordered binary tree has to be made a full binary tree before being processed. To make it a full binary tree, add elements whose values are greater than the highest value found already in the unordered heap. In this article, this will be done with the array and not with the graph form of the tree.

The highest element (node) is 25. Let the three numbers added to make a full tree be: 26, 27, and 28. The corresponding array for the full tree becomes:

| 10 | 20 | 25 | 6 | 4 | 12 | 15 | 23 | 8 | 7 | 18 | 16 | 26 | 27 | 28 |

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 |

When the unordered list becomes partially ordered by the heap definition, the last three elements will remain at their last positions; and can easily be abandoned.

When this unordered list is partially ordered by the heap definition, it would be the partially ordered list given above (introduction). A partially ordered list has some sorting, but it is not complete ordering (it is not complete sorting).

Manual Rearrangement (heap building)

The unordered array can be placed like so:

There are 15 elements. Integer result of 15/2 is 7. The list should be divided into two halves, with the first half being 7 and the second half, 8, as follows:

This division can be considered as one main operation. Heap building continues from the right end with the pairs of elements, 27 and 28, identified as children of 15. 15 is less than 27 or 28. So this sub-tree of three nodes satisfies the minimum heap property, and the nodes are not touched for now. This checking can be considered another main operation. So there are two main operations so far (one array division and one check).

16 and 26 are children of 12. 12 is less than 16 or 26. So this sub-tree of three nodes satisfies the minimum heap property, and the nodes are not touched (for now). This checking can be considered another main operation. So there are three main operations so far (one division and two checks).

07 and 18 are the children of 04. 04 is less than 07 or 18. So this sub-tree of three nodes satisfies the minimum heap property, and the nodes are not touched (for now). This checking can be considered another main operation. So there are four main operations so far (one division and three checks).

23 and 08 are the children of 06. 06 is less than 23 or 08. So this sub-tree of three nodes satisfies the minimum heap property, and the nodes are not touched (for now). This checking can be considered another main operation. So there are five main operations so far (one division and four checks).

All 8 elements of the last row of the tree, and their parents, have been checked. To go to the previous level, the left part of the tree has to be divided by 2, and the integer result is taken. Integer result of 7/2 is 3. The new placement of the list is:

This division can be considered as one main operation. So there are six main operations so far (two divisions and four checks).

12 and 15 are the children of 25. 25 is greater than 12 or 15. This sub-tree of three nodes does not satisfy the minimum heap property, so the nodes have to be touched. However, this checking can still be considered another main operation. So there are seven main operations so far (two divisions and five checks).

Sifting downwards has to take place and possibly down to the last row. Each sifting (swapping) is the main operation.

25 is swapped with the least of its children, 12 to give the configuration:

25 is now in the third level and no longer in the second level. 25 is now the parent of 16 and 26. At this point, 25 happens to be greater than 16 but less than 26. So 25 and 16 are swapped. This swapping is another main operation, and so there are nine main operations so far (two divisions, five checks, and two swaps). The new configuration is:

At the second level of the given list, there were 20 and 25. 25 has been sifted right down to the last row. 20 is still to be checked.

Currently, 06 and 04 are children of 20. 20 is greater than 06 or 04. This sub-tree of three nodes does not satisfy the minimum heap property, so the nodes have to be touched. However, this checking can still be considered another main operation. So there are ten main operations so far (two divisions, six checks, and two swaps). Sifting downwards has to take place and possibly down to the last row. Each sifting (swapping) is the main operation.

20 is swapped with the least of its children, 04 to give the configuration:

20 is now in the third level and no longer in the second level. 20 is now the parent of 07 and 18. At this point, 20 happens to be greater than 07 or 18. So 20 and 07 are swapped. This swapping is another main operation, and so there are twelve main operations so far (two divisions, six checks, and four swaps). The new configuration is:

Siftings downwards of the previous path have ended. The left part that has not been completely checked has to be divided into two (going to the previous level) to have:

Integer result of 3/2 is 1.

Currently, 04 and 12 are the children of 10. 10 is greater than 04 but less than 12. This sub-tree of three nodes does not satisfy the minimum heap property, so the nodes have to be touched. However, this checking should still be considered as another main operation. So there are fourteen main operations so far (three divisions, seven checks, and four swaps). Sifting downwards has to take place and possibly down to the last row. Each sifting (swapping) is the main operation.

10 is swapped with the least of its children, 04 to give the configuration:

10 is now in the second level and no longer in the first level. 10 is now the parent 06 and 07. At this point, 10 happens to be greater than 06 or 07. So 10 and 06 are swapped. This swapping is another main operation, and so there are sixteen main operations so far (three divisions, seven checks, and six swaps). The new configuration is:

10 is now in the third level and no longer in the second level. 10 is now the parent 23 and 08. At this point, 10 happens to be less than 23 but greater than 08. So 10 and 08 are swapped. This swapping is another main operation, and so there are seventeen main operations so far (three divisions, seven checks, and seven swaps). The new configuration is:

Well, the checking, division, and swapping began at the last index and has reached the first index, with all the consequences of downward siftings. This means heap building is complete and, in this case, with seventeen main operations (three divisions, seven checks, and seven swaps). There were 15 elements, though the last three were dummy elements needed to simplify the heap building.

Algorithm

There are different algorithms for heap building. The illustration given above will be the most efficient if a parent value is swapped with any of the children that are less and not always the least of the children. The steps for the algorithm are:

- Divide the whole number of elements by two.

- Continue from the right half, checking a pair of siblings with the parent and swapping if necessary.

- When all the nodes of the last level have been checked, with possible swapping, proceed to the previous level and repeat the above two steps. Swapping is sift down, and this may have to reach the lowest level.

- When the root has been checked and possibly swapped, stop.

Time Complexity

Time complexity is the relative runtime of some code. In this case, it is the relative runtime of the heap building process. Time complexity is actually the number of main operations in the code (program).

Officially, the time complexity of the algorithm for this article is said to be N operations, where N is the number of elements in the unordered array plus the dummy elements. In this case, N is 15. So the time complexity for this algorithm is 15.

Why should it be 15 instead of 17? That is, why should it be N? – Well, since division is not partition, the duration for each division action is small and can be neglected. With that and for the above illustration, the number of main operations will become 14 (seven checks and seven swaps), with the 3 divisions ignored.

Also, if a parent value is swapped with any of the children that are less, and not always the least of the children, then the overall checking time will be reduced. This will make checking time small and so ignored. With that and for the above illustration, the number of main operations will become 7 (seven swaps), with the 3 divisions ignored and the seven checks also ignored.

Note: For a good heap building program, only the swapping (sift downs in this case) operations are considered in time complexity. In this case, there are 7 operations and not 15. In dealing with time complexity, the maximum possible number of operations is what must be given.

It is possible for all the 15 nodes above to be swapped. So the time complexity for this example must be given as 15 and not 7.

The time complexity for this algorithm is given, in general terms, as:

where n is N, this is the big-O notation. This notation uses uppercase O and its parentheses. Inside the parentheses is the expression for the possible maximum number of operations for the code (program) to complete.

Coding in C

The C main function to heapify the above-unordered array is:

{

int n1 = 12;

int A1[] = {10, 20, 25, 6, 4, 12, 15, 23, 8, 7, 18, 16};

int A2[] = {10, 20, 25, 6, 4, 12, 15, 23, 8, 7, 18, 16, 26, 27, 28};

buildHeap(A2, n2);

for (int i=0; i= arr[leftIndx] && arr[leftIndx] >= arr[rightIndx]) {

int temp = arr[parentIndx]; arr[parentIndx] = arr[rightIndx]; arr[rightIndx] = temp;

lastDown = rightIndx;

}

else if (arr[parentIndx] >= arr[rightIndx] && arr[rightIndx] >= arr[leftIndx]) {

int temp = arr[parentIndx]; arr[parentIndx] = arr[leftIndx]; arr[leftIndx] = temp;

lastDown = leftIndx;

}

else if (arr[parentIndx] >= arr[rightIndx] && arr[rightIndx] = arr[leftIndx] && arr[leftIndx] <= arr[rightIndx]) {

int temp = arr[parentIndx]; arr[parentIndx] = arr[leftIndx]; arr[leftIndx] = temp;

lastDown = leftIndx;

}

return lastDown;

}

There is a sift-down function. It would use the swap() function and sift down right to the lowest level in one path. It is:

void siftdown(int arr[], int n2, int i) {

int leftIndx, rightIndx;

int parentIndx = i;

leftIndx = 2*i+1;

rightIndx = 2*i+2;

if (parentIndx = 0) {

nextIndx = swap(arr, parentIndx, leftIndx, rightIndx);

if (nextIndx =halfIndx/2; i--)

siftdown(A2, n2, i);

N = N/2;

if (N >= 1)

buildHeap(A2, N);

}

All the above code segments can be assembled to form a program that will heapify the unordered array.

Conclusion

The most efficient algorithm for Heapify Time Complexity is: