This has an implication. Its implication is that there exists lots of useful data on Google and that calls for a need to scrape this golden data. The scraped data can be used for quality data analysis and discovery of wonderful insights. It can also be important in getting great research information in one attempt.

Talking about scraping, this can be done with third party tools. It can also be done with a Python library known as Scrapy. Scrapy is rated to be one of the best scraping tools, and can be used to scrape almost any web page. You can find out more on the Scrapy library.

However, regardless of the strengths of this wonderful library. Scraping data on Google could be one difficult task. Google comes down hard on any web scraping attempts, ensuring that scraping scripts do not even make as many 10 scrape requests in an hour before having the IP address banned. This renders third party and personal web scraping scripts useless.

Google does give the opportunity to scrape information. However, whatever scraping that would be done has to be through an Application Programming Interface (API).

Just incase you do not already know what an Application Programming Interface is, there’s nothing to worry about as I’ll provide a brief explanation. By definition, an API is a set of functions and procedures that allow the creation of applications which access the features or data of an operating system, application, or other service. Basically, an API allows you gain access to the end result of processes without having to be involved in those processes. For example, a temperature API would provide you with the Celsius/Fahrenheit values of a place without you having to go there with a thermometer to make the measurements yourself.

Bringing this into the scope of scraping information from Google, the API we would be using allows us access to the needed information without having to write any script to scrape the results page of a Google search. Through the API, we can simply have access to the end result (after Google does the “scraping” at their end) without writing any code to scrape web pages.

While Google has lots of APIs for different purposes, we are going to be using the Custom Search JSON API for the purpose of this article. More information on this API can be found here.

This API allows us make 100 search queries per day for free, with pricing plans available for making more queries if necessary.

Creating A Custom Search Engine

In order to be able to use the Custom Search JSON API, we would be needing a Custom Search Engine ID. However, we would have to create a Custom Search Engine first which can be done here.

When you visit the Custom Search Engine page, click on the “Add” button to create a new search engine.

In the “sites to search” box, simply put in “www.linuxhint.com” and in the “Name of the search engine” box, put in any descriptive name of your choice (Google would be preferable).

Now click “Create” to create the custom search engine and click the “control panel” button from the page to confirm the success of creation.

You would see a “Search Engine ID” section and an ID under it, that is the ID we would be needing for the API and we would refer to it later in this tutorial. The Search Engine ID should be kept private.

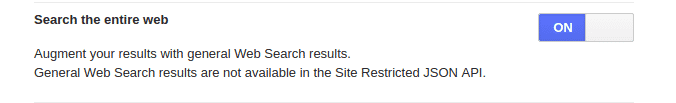

Before we leave, remember we put in “www.linuhint.com” earlier. With that setting, we would only get results from the site alone. If you desire to get the normal results from total web search, click “Setup” from the menu on the left and then click the “Basics” tab. Go to the “Search the Entire Web” section and toggle this feature on.

Creating An API Key

After creating a Custom Search Engine and getting its ID, next would be to create an API key. The API key allows access to the API service, and it should be kept safe after creation just like the Search Engine ID.

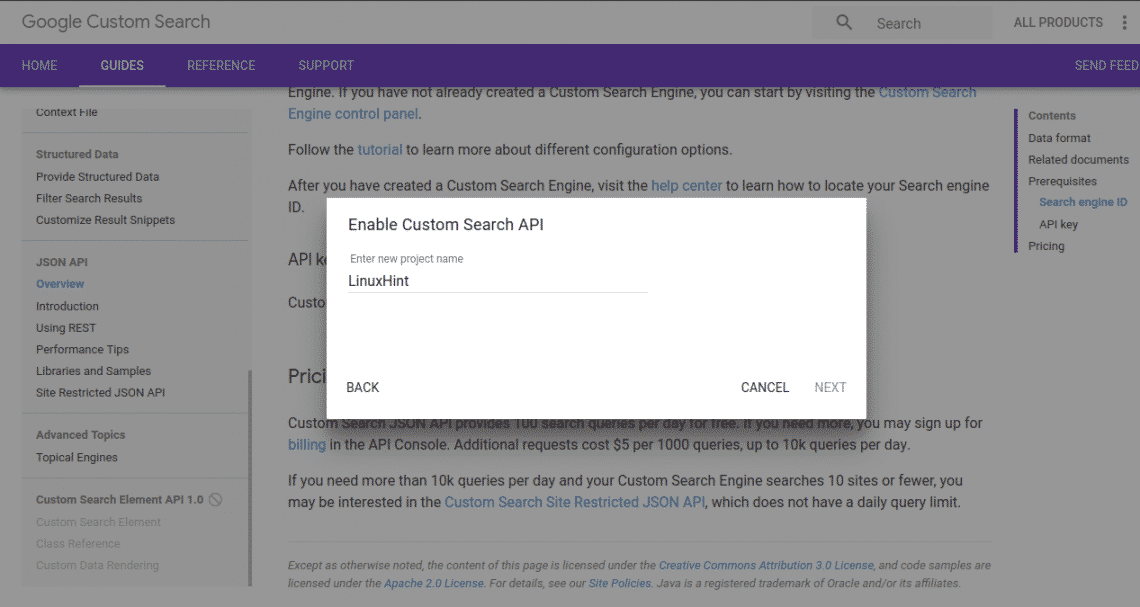

To create an API key, visit the site and click on the “Get A Key” button.

Create a new project, and give it a descriptive name. On clicking “next”, you would have the API key generated.

On the next page, we would have different setup options which aren’t necessary for this tutorial, so you just click the “save” button and we are ready to go.

Accessing The API

We have done well getting the Custom Search ID and the API Key. Next we are going to make use of the API.

While you can access the API with other programming languages, we are going to be doing so with Python.

To be able to access the API with Python, you need to install the Google API Client for Python. This can be installed using the pip install package with the command below:

After successfully installing, you can now import the library in our code.

Most of what will be done, would be through the function below:

my_api_key = "Your API Key”

my_cse_id = "Your CSE ID"

def google_search(search_term, api_key, cse_id, **kwargs):

service = build("customsearch", "v1", developerKey=api_key)

res = service.cse().list(q=search_term, cx=cse_id, **kwargs).execute()

return res

In the function above, the my_api_key and my_cse_id variables should be replaced by the API Key and the Search Engine ID respectively as string values.

All that needs to be done now is to call the function passing in the search term, the api key and the cse id.

print(result)

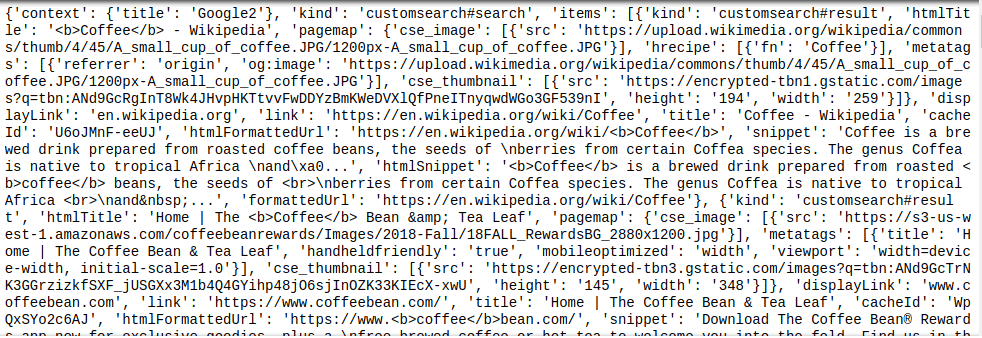

The function call above would search for the keyword “Coffee” and assign the returned value to the result variable, which is then printed. A JSON object is returned by the Custom Search API, therefore any further parsing of the resulting object would require a little knowledge of JSON.

This can be seen from a sample of the result as seen below:

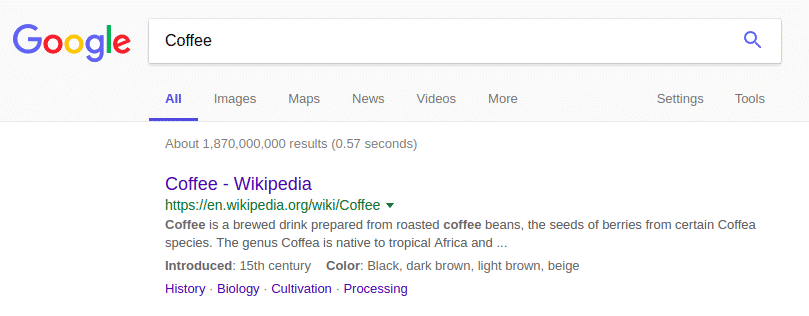

The JSON object returned above is very similar to the result from the Google search:

Summary

Scraping Google for information isn’t really worth the stress. The Custom Search API makes life easy for everyone, as the only difficulty is in parsing the JSON object for the needed information. As a reminder, always remember to keep your Custom Search Engine ID and API Key values private.