Quick Outline

This post will demonstrate the following:

What are Agents in Natural Language Processing(NLP)

Getting Started With Agents in LangChain

- Installing Frameworks

- Configure Chat Model

- Building Agent

- Invoking the Agent

- Configure Agent Tools

- Testing the Agent

What are Agents in Natural Language Processing(NLP)?

Agents are the vital components of the natural language application and they use the Natural Language Understanding (NLU) to understand queries. These agents are programs that act like a conversational template for having an interaction with humans using the sequence of tasks. Agents use multiple tools that can be called by the agent to perform multiple actions or specify the next task to perform.

Getting Started With Agents in LangChain

Start the process of building the agents to have a conversation with humans by extracting output using the agents in LangChain. To learn the process of getting started with the agents in LangChain, simply follow the listed steps below:

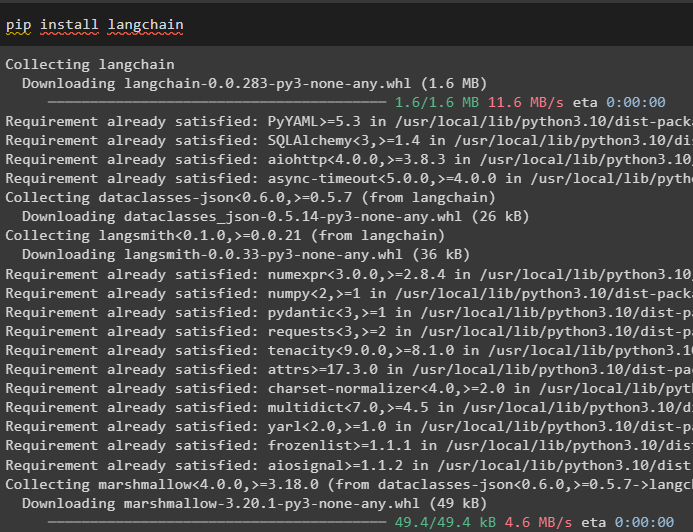

Step 1: Installing Frameworks

First, get started with the process of installing the LangChain framework using the “pip” command to get the required dependencies for using agents:

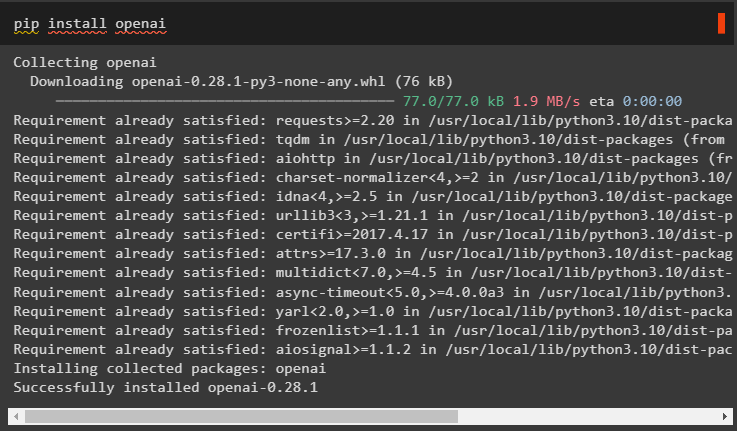

Install the OpenAI module for building the LLM and use it to configure the agents in LangChain:

Set up the environment for the OpenAI module using its API key from the account by running the following code:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Configure Chat Model

Import the ChatOpenAI module from the LangChain to build the LLM using its function:

llm = ChatOpenAI(temperature=0)

Import tools for the agent to configure the tasks or actions needed to be performed by the agent. The following code uses the get_word_length() method to get the length of the word provided by the user:

@tool

def get_word_length(word: str) -> int:

"""getting the word's length"""

return len(word)

tools = [get_word_length]

Configure the template or structure for the chat model to create an interface for having a chat:

prompt = ChatPromptTemplate.from_messages([

("system", "your assistant is quite amazing, but need improvement at calculating lengths"),

("user", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad"),

])

Step 3: Building Agent

Import the tools library for building the LLM with tools using the OpenAI functions from the LangChain module:

llm_with_tools = llm.bind(

functions=[format_tool_to_openai_function(t) for t in tools]

)

Configure the agent using the OpenAI function agent to use the output parser to set the actions/tasks sequences:

from langchain.agents.output_parsers import OpenAIFunctionsAgentOutputParser

agent = {

"input": lambda x: x["input"],

"agent_scratchpad": lambda x: format_to_openai_functions(x['intermediate_steps'])

} | prompt | llm_with_tools | OpenAIFunctionsAgentOutputParser()

Step 4: Invoking the Agent

The next step uses the invoke() function to call the agent using the input and intermediate_steps arguments:

"input": "how many letters in the word good",

"intermediate_steps": []

})

Step 5: Configure Agent Tools

After that, simply import the AgentFinish library to configure the intermediate_steps by integrating all the steps in a sequence to complete the activity:

intermediate_steps = []

while True:

output = agent.invoke({

"input": " letters in good",

"intermediate_steps": intermediate_steps

})

if isinstance(output, AgentFinish):

final_result = output.return_values["output"]

break

else:

print(output.tool, output.tool_input)

tool = {

"get_word_length": get_word_length

}[output.tool]

observation = tool.run(output.tool_input)

intermediate_steps.append((output, observation))

print(final_result)

Step 6: Testing the Agent

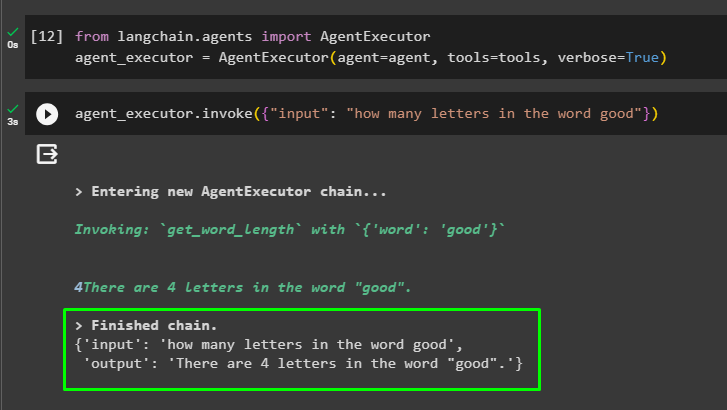

Now, execute the agent by calling the AgentExecutor() method after importing its library from the LangChain:

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

At the end, invoke the agent_executor with the input argument to enter the query for the agent:

The agent has displayed the answer to the question provided in the input argument after finishing the chain:

That’s all about getting started with the agents in the LangChain framework.

Conclusion

To get started with the agents in LangChain, simply install the modules required to set up the environment using the OpenAI API key. After that, configure the chat model by setting the prompt template for building the agent with the sequence of intermediate steps. Once the agent is configured, simply build the tools by specifying the tasks after giving the input string to the user. This blog has demonstrated the process of using the agents in LangChain.