One of the biggest advantages of tensors is their ability to perform efficient mathematical operations. Tensors support a wide range of arithmetic operations including the element-wise operations like addition, subtraction, multiplication, and division and the matrix operations like matrix multiplication and transpose.

PyTorch provides a comprehensive set of functions and methods for manipulating the tensors. These include operations for reshaping the tensors, extracting specific elements or sub-tensors, and concatenating or splitting the tensors along specified dimensions. Additionally, PyTorch offers functionalities for indexing, slicing, and broadcasting the tensors which make it easier to work with tensors of different shapes and sizes.

In this article, we will learn the fundamental operations with tensors in PyTorch, explore how to create tensors, perform basic operations, manipulate their shape, and move them between CPU and GPU.

Creating Tensors

Tensors in PyTorch can be created in several ways. Let’s explore some common methods.

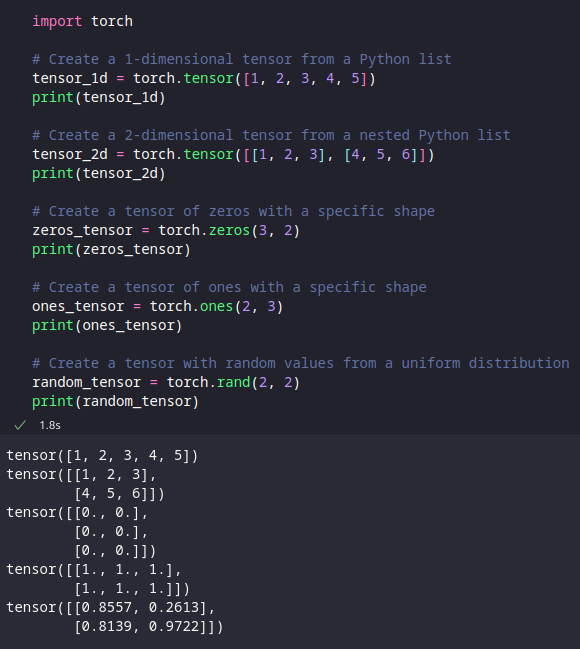

To create a tensor, we can use the “torch.Tensor” class or the “torch.tensor” function. Let’s look at some examples:

# Create a 1-dimensional tensor from a Python list

tensor_1d = torch.tensor([1, 2, 3, 4, 5])

print(tensor_1d)

# Create a 2-dimensional tensor from a nested Python list

tensor_2d = torch.tensor([[1, 2, 3], [4, 5, 6]])

print(tensor_2d)

# Create a tensor of zeros with a specific shape

zeros_tensor = torch.zeros(3, 2)

print(zeros_tensor)

# Create a tensor of ones with a specific shape

ones_tensor = torch.ones(2, 3)

print(ones_tensor)

# Create a tensor with random values from a uniform distribution

random_tensor = torch.rand(2, 2)

print(random_tensor)

In the given examples, we create the tensors of different shapes and initialize them with various values such as specific numbers, zeros, ones, or random values. You should see a similar output when you run the previous code snippet:

Tensor Operations

Once we have tensors, we can perform various operations on them such as the element-wise arithmetic operations, matrix operations, and more.

Element-Wise Arithmetic Operations

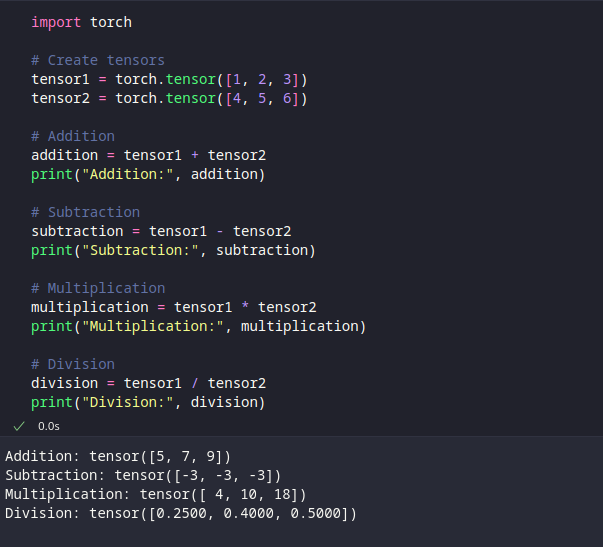

Element-wise arithmetic operations allow us to perform computations between tensors on an element-by-element basis. The tensors involved in the operation should have the same shape.

Here are some examples:

# Create tensors

tensor1 = torch.tensor([1, 2, 3])

tensor2 = torch.tensor([4, 5, 6])

# Addition

addition = tensor1 + tensor2

print("Addition:", addition)

# Subtraction

subtraction = tensor1 - tensor2

print("Subtraction:", subtraction)

# Multiplication

multiplication = tensor1 * tensor2

print("Multiplication:", multiplication)

# Division

division = tensor1 / tensor2

print("Division:", division)

In the given code, we perform the addition, subtraction, multiplication, and division operations between two tensors which results in a new tensor with the computed values. The result of the code snippet is shown as follows:

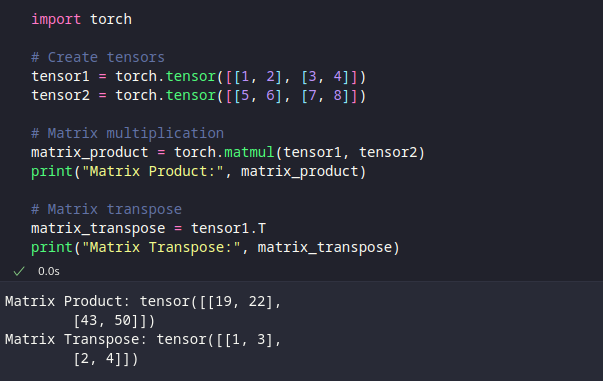

Matrix Operations

PyTorch provides efficient matrix operations for tensors such as matrix multiplication and transpose. These operations are particularly useful for tasks like linear algebra and neural network computations.

# Create tensors

tensor1 = torch.tensor([[1, 2], [3, 4]])

tensor2 = torch.tensor([[5, 6], [7, 8]])

# Matrix multiplication

matrix_product = torch.matmul(tensor1, tensor2)

print("Matrix Product:", matrix_product)

# Matrix transpose

matrix_transpose = tensor1.T

print("Matrix Transpose:", matrix_transpose)

In the given example, we perform the matrix multiplication using the “torch.matmul” function and obtain the transpose of a matrix using the “.T” attribute.

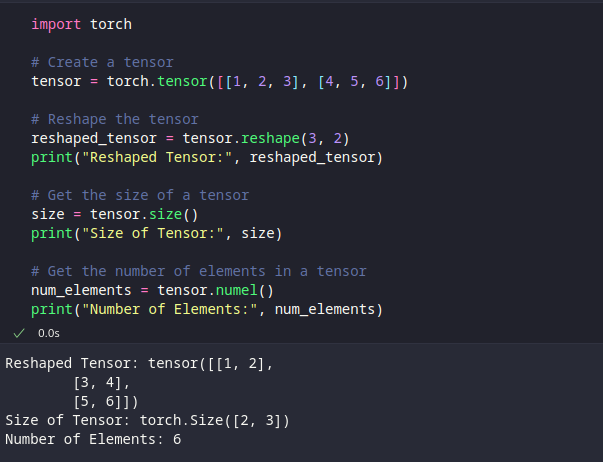

Tensor Shape Manipulation

In addition to performing operations on tensors, we often need to manipulate their shape to fit specific requirements. PyTorch provides several functions to reshape the tensors. Let’s explore some of these functions:

# Create a tensor

tensor = torch.tensor([[1, 2, 3], [4, 5, 6]])

# Reshape the tensor

reshaped_tensor = tensor.reshape(3, 2)

print("Reshaped Tensor:", reshaped_tensor)

# Get the size of a tensor

size = tensor.size()

print("Size of Tensor:", size)

# Get the number of elements in a tensor

num_elements = tensor.numel()

print("Number of Elements:", num_elements)

In the provided code, we reshape a tensor using the reshape function, retrieve the size of a tensor using the size method, and obtain the total number of elements in a tensor using the numel method.

Moving Tensors Between CPU and GPU

PyTorch provides support for GPU acceleration which allows us to perform computations on graphics cards which can significantly speed up the deep learning tasks by lowering the training times. We can move the tensors between the CPU and GPU using the “to” method.

Note: This can only be done if you have an NVIDIA GPU with CUDA on your machine.

# Create a tensor on CPU

tensor_cpu = torch.tensor([1, 2, 3])

# Check if GPU is available

if torch.cuda.is_available():

# Move the tensor to GPU

tensor_gpu = tensor_cpu.to("cuda")

print("Tensor on GPU:", tensor_gpu)

else:

print("GPU not available.")

In the provided code, we check if a GPU is available using torch.cuda.is_available(). If a GPU is available, we move the tensor from the CPU to the GPU using the “to” method with the “cuda” argument.

Conclusion

Understanding the fundamental tensor operations is crucial for working with PyTorch and building the deep learning models. In this article, we explored how to create tensors, perform basic operations, manipulate their shape, and move them between CPU and GPU. Armed with this knowledge, you can now start working with tensors in PyTorch, perform computations, and build sophisticated deep-learning models. Tensors serve as the foundation for data representation and manipulation in PyTorch which enables you to unleash the full power of this versatile machine-learning framework.