like few-shot which can be used to train these models. The training phase is the most vital phase as the model learns most of the things in this phase and then with time improves its performance.

This post will illustrate the process of building a few-shot prompt template for chat models in LangChain.

How to Build a Few-Shot Prompt Template for Chat Models in LangChain?

To build a few-shot prompt template for chat models, simply go through the following listed steps:

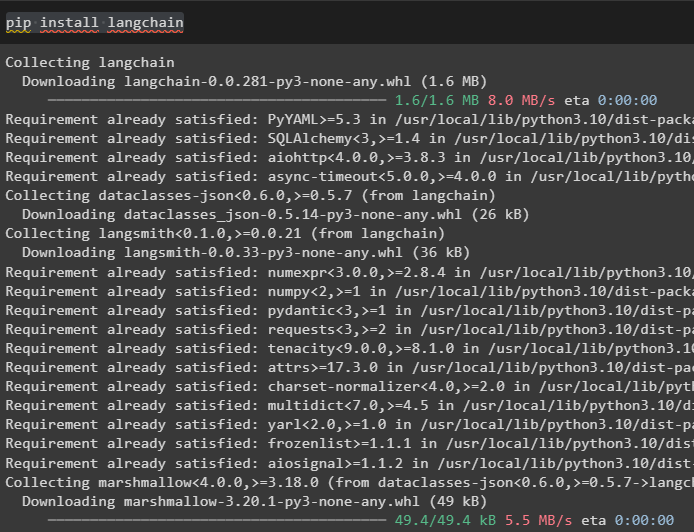

Step 1: Install Modules

Start the process by installing the LangChain framework to get its dependencies and libraries for the task:

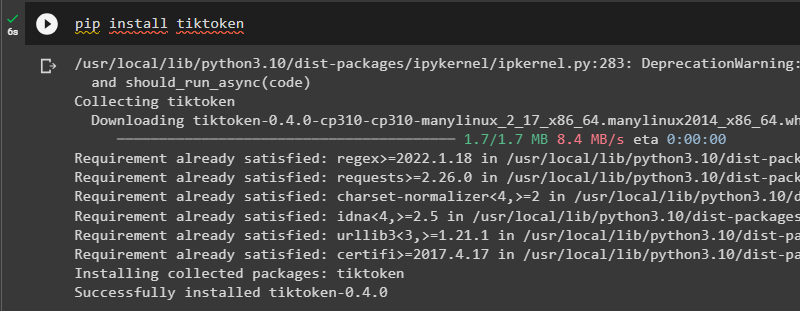

Install tiktoken tokenizer for splitting the data into small chunks so the indexing process becomes easier:

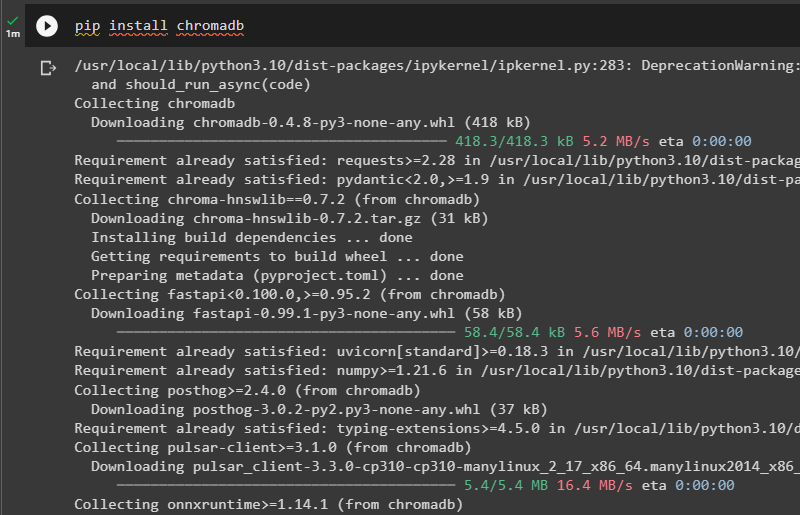

Install chromadb to create a data store for placing the data that can be used to train the prompt template:

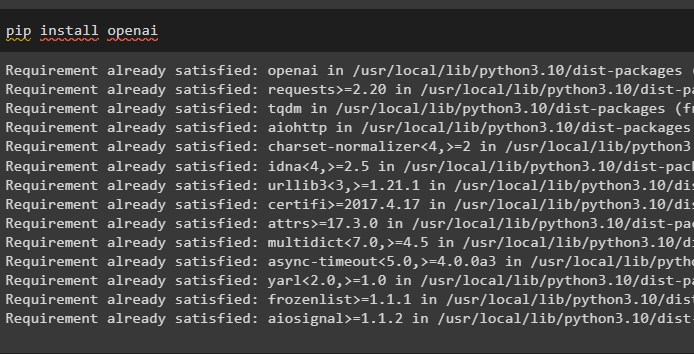

Another module that is required is OpenAI and it can be installed using the pip command:

Step 2: Importing Libraries

After installing all the necessary frameworks or modules, simply import the required libraries from these modules:

FewShotChatMessagePromptTemplate,

ChatPromptTemplate,

)

Some more libraries that can be used to build a few-shot prompt template are imported in this section:

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Chroma

Step 3: Setting an Example

After that, simply set an example dataset with a simple math problem of addition:

{"input": "2+2", "output": "4"},

{"input": "2+3", "output": "5"},

]

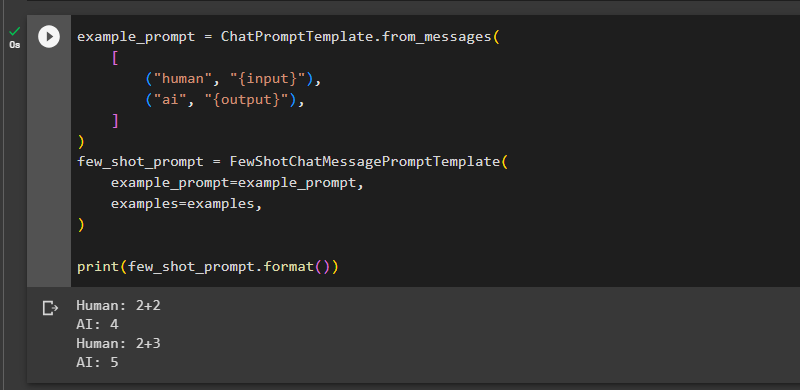

Step 4: Using Few-Shot Prompt Template

After that, simply use the few-shot template configured on the above example to get the answer using the data:

[

("human", "{input}"),

("ai", "{output}"),

]

)

few_shot_prompt = FewShotChatMessagePromptTemplate(

example_prompt=example_prompt,

examples=examples,

)

print(few_shot_prompt.format())

Step 5: Using Dynamic Few Shot

Now, use another example and store the data in the vector store and apply to embed the textual data using the OpenAIEmbeddings() method:

{"input": "2+2", "output": "4"},

{"input": "2+3", "output": "5"},

{"input": "2+4", "output": "6"},

{"input": "What did the cow say to the moon?", "output": "nothing at all"},

{

"input": "Write me a poem about the moon",

"output": "One for the moon, and one for me, who are we to talk about the moon?",

},

]

to_vectorize = [" ".join(example.values()) for example in examples]

embeddings = OpenAIEmbeddings()

vectorstore = Chroma.from_texts(to_vectorize, embeddings, metadatas=examples)

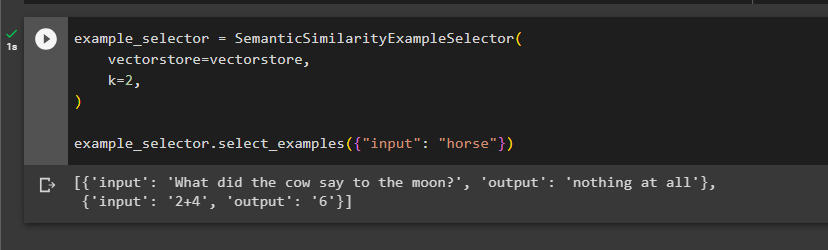

Step 6: Setting an Example Selector

After embedding the data, simply apply a semantic search using the example_selector() method:

vectorstore=vectorstore,

k=2,

)

example_selector.select_examples({"input": "horse"})

Step 7: Configuring Prompt Template

Configure the prompt template using the few-shot template to set the human input and AI output structure for the data:

FewShotChatMessagePromptTemplate,

ChatPromptTemplate,

)

few_shot_prompt = FewShotChatMessagePromptTemplate(

input_variables=["input"],

example_selector=example_selector,

example_prompt=ChatPromptTemplate.from_messages(

[("human", "{input}"), ("ai", "{output}")]

),

)

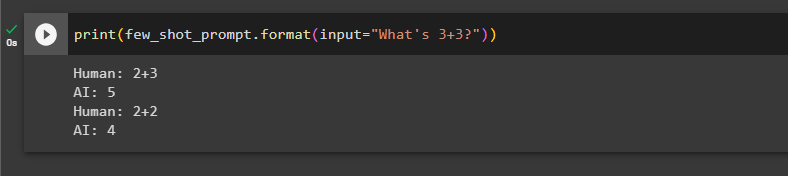

Step 8: Testing the Template

Simply test the few-shot on the data using the input from the dataset:

Here is the guide that uses the few-shot prompt template and that’s all about building the few-shot prompt template for chat models in LangChain.

Conclusion

To build a few-shot prompt template for chat models in LangChain, simply install the necessary modules to import the libraries and dependencies from them. After that, configure the prompt template using the example dataset and extract information from that using the few-shot prompt template. This post has explained the process of building the few-shot prompt template for chat models in LangChain.