This post will illustrate the process of using the few-shot prompt templates using LangChain.

How to Use a Few-Shot Prompt Template in LangChain?

FewShotPromptTemplate is the structure that can be used to train the model to learn the query patterns. To use the few-shot template in LangChain, simply follow this easy guide:

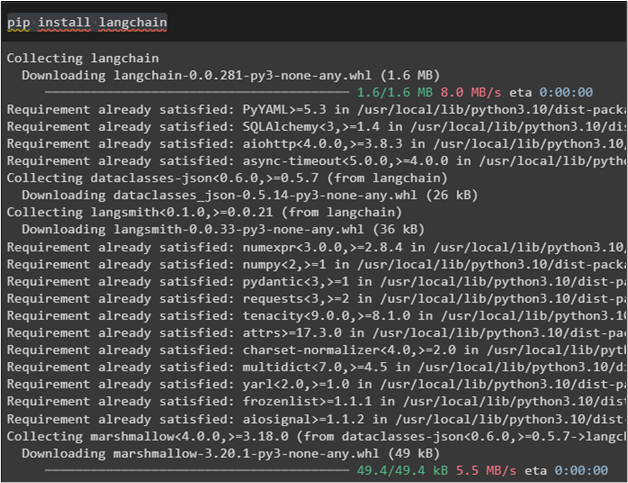

Step 1: Install Modules

Start the process of using the few-shot prompt template by installing the LangChain with its dependencies to go through the task:

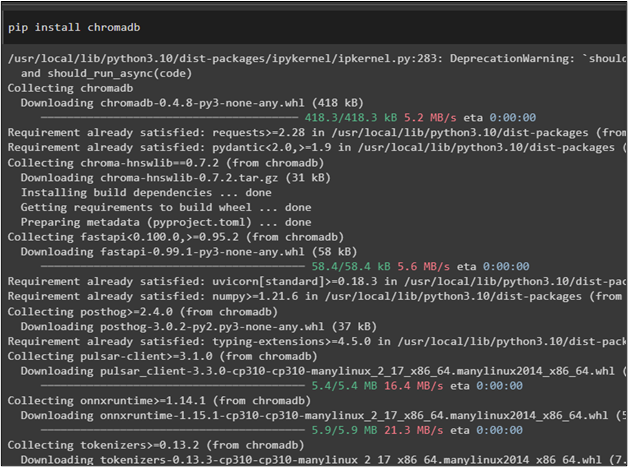

To use the few-shot template, the user needs to install chromadb to store the example sets for the prompt:

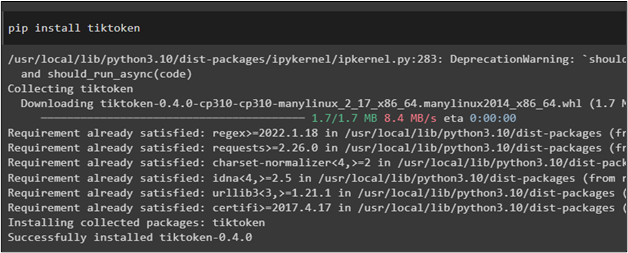

Install the tiktoken tokenizer to split the data into small chunks which can be used to search data easily:

Step 2: Creating an Example Set

After installing all the required modules for the task, simply configure the example set containing multiple examples for training the model on the prompt template:

from langchain.prompts.prompt import PromptTemplate

examples = [

{

"question": "What is the DoB of founder of nemo",

"answer":

"""

Any more questions required: Yes

Follow up: Name the person who founded nemo

Intermediate answer: Leonel Messi founded the nemo

Follow up: When was Lenoel Messi born

Intermediate answer: Lenoel Messi was born on December 12, 1990

So the final answer is: December 12, 1990

"""

},

{

"question": "Who lived longer, Allan Border or Glen Phillip",

"answer":

"""

Any more questions required: Yes

Follow up: What was the age of Allan Border

Intermediate answer: Allan Border was 67 years old

Follow up: What was the age of Glen Phillip when he died

Intermediate answer: Glen Phillip was 42 years old

So the final answer is: Allan Border

"""

},

{

"question": "Are both the lead actors of Race and Mission Impossible from same city",

"answer":

"""

Any more questions required: Yes

Follow up: Who is the lead actor of Race

Intermediate Answer: The lead actor of Race is Daniel Vittori

Follow up: Where is Daniel Vittori from

Intermediate Answer: The Chicago

Follow up: Who is the lead actor of Mission Impossible

Intermediate Answer: The lead actor of MI is Martin Guptill

Follow up: Where is Martin Guptill from

Intermediate Answer: Orlando

So the final answer is: No

"""

},

{

"question": "Who was the Jake Bailey’s father",

"answer":

"""

Any more questions required: Yes

Follow up: Who was the mother of Jake Bailey

Intermediate answer: The mother of Jake Bailey was Mary Jake Ball

Follow up: Who was the father of Mary Jake Ball

Intermediate answer: The father of Mary Jake Ball was Alzari Joseph

So the final answer is: Alzari Joseph

"""

}

]

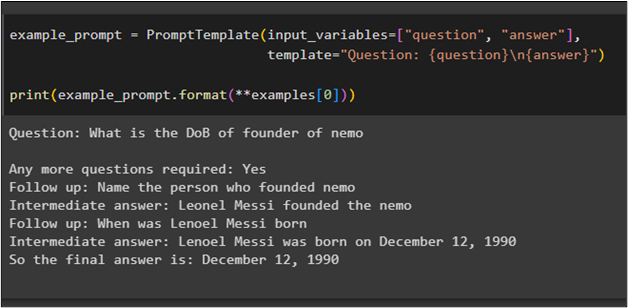

Step 3: Building a Format for Few-Shot

Now, build the format so the few-shot dataset can be converted into strings and stored in the datastore. In the end, confirm the storage by calling the first index example using the print() method:

template="Question: {question}\n{answer}")

print(example_prompt.format(**examples[0]))

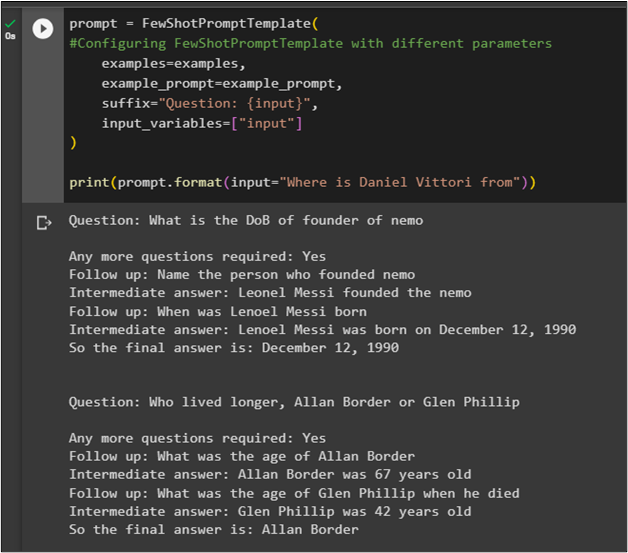

Step 4: Configuring FewShotPromptTemplate

After setting the example set, simply configure the FewShotPromptTemplate() method using multiple parameters like examples, prompts, variables for input, etc.:

#Configuring FewShotPromptTemplate with different parameters

examples=examples,

example_prompt=example_prompt,

suffix="Question: {input}",

input_variables=["input"]

)

print(prompt.format(input="Where is Daniel Vittori from"))

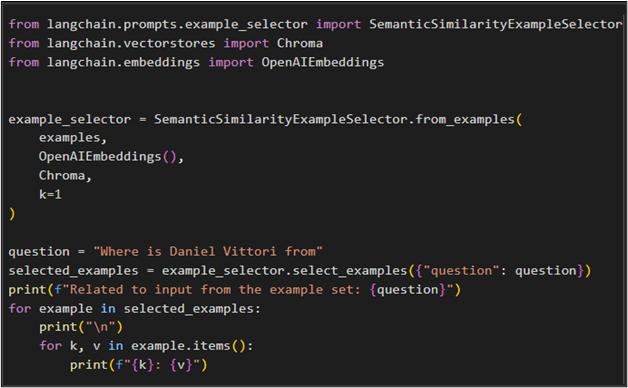

Step 5: Feeding Data to Example Selector

Now, the example set, and few-shot are configured successfully so everything is set for the testing phase of our prompt template. Simply check the template by setting the example selector for the template and ask the query so it can fetch information from the dataset:

from langchain.vectorstores import Chroma

from langchain.embeddings import OpenAIEmbeddings

example_selector = SemanticSimilarityExampleSelector.from_examples(

examples,

OpenAIEmbeddings(),

Chroma,

k=1

)

question = "Where is Daniel Vittori from"

selected_examples = example_selector.select_examples({"question": question})

print(f"Related to input from the example set: {question}")

for example in selected_examples:

print("\n")

for k, v in example.items():

print(f"{k}: {v}")

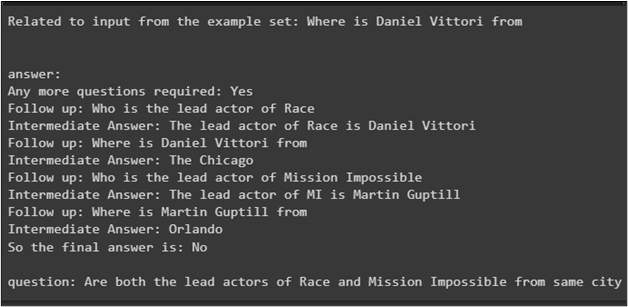

Output

The output displays that the template has delivered the answer from the dataset against the query using semantic search:

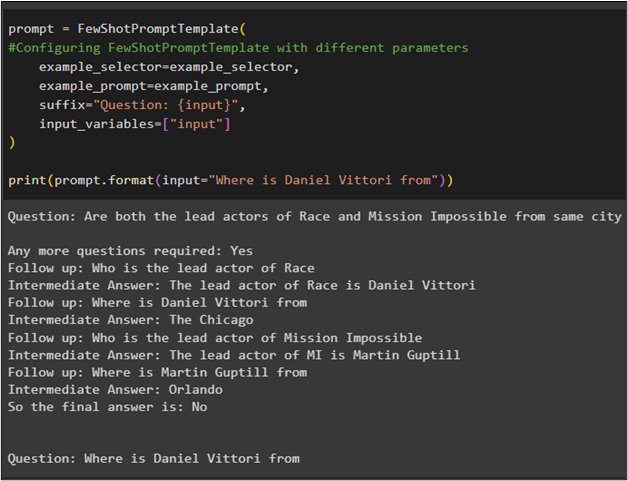

Step 6: Testing the FewShotPromptTemplate

Now, finally, test the prompt template by giving it access to the complete dataset and ask the question in the input variable using the prompt.format() method:

#Configuring FewShotPromptTemplate with different parameters

example_selector=example_selector,

example_prompt=example_prompt,

suffix="Question: {input}",

input_variables=["input"]

)

print(prompt.format(input="Where is Daniel Vittori from"))

That is all about using the few-shot prompt template in LangChain.

Conclusion

To use the FewShotPromptTemplate in LangChain, simply install the LangChain, chromadb, and tiktoken frameworks to create the prompt template. After that, create a customized data store with some data stored in an array so it can be searched semantically. After configuring the few-shot prompt template and data store, simply test the template by giving complete data and asking the query in the set format. This post has illustrated the process of using the FewShotPromptTemplate using the LangChain framework.