This problem is especially apparent in magnetic media although SSDs suffer from it as well. Let’s try and defragment an XFS file system in this post.

Sandbox setup

First, to experiment with XFS filesystem, I decided to create a testbench instead of working with critical data on a disk. This testbench is made up of a Ubuntu VM to which a virtual disk is connected providing raw storage. You can use VirtualBox to create the VM and then create an additional disk to attach to the VM

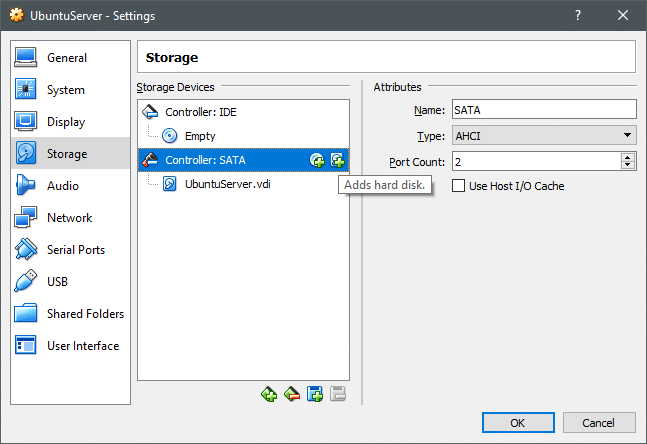

Just go to your VM’s settings and under Settings → Storage section you can add a new disk to the SATA controller you can create a new disk. As shown below, but make sure your VM is turned off when you do this.

Once the new disk is created, turn on the VM and open up the terminal. The command lsblk lists all the available block devices.

sda 8:0 0 60G 0 disk

├─sda1 8:1 0 1M 0 part

└─sda2 8:2 0 60G 0 part /

sdb 8:16 0 100G 0 disk

sr0 11:0 1 1024M 0 rom

Apart from the main block device sda, where the OS is installed, there’s now a new sdb device. Let’s quickly create a partition from it and format it with XFS filesystem.

Open up parted utility as the root user:

Let’s create a partition table first using mklabel, this is followed by creating a single partition out of the entire disk (which is 107GB in size). You can verify that the partition is made by listing it using print command:

(parted) mkpart primary 0 107

(parted) print

(parted) quit

Okay, now we can see using lsblk that there’s a new block device under the sdb device, called sdb1.

Let’s format this storage as xfs and mount it in /mnt directory. Again, do the following actions as root:

$ mount /dev/sdb1 /mnt

$ df -h

The last command will print all the mounted filesystems and you can check that /dev/sdb1 is mounted at /mnt.

Next we write a bunch of files as dummy data to defragment here:

The above command would write a file myfile.txt of 1MB size. You can automaticate this command into a simple for loop using bash and generate more such files. Spread them across various directories if you like. Delete a few of them randomly. Do all of this inside the xfs filesystem (mounted at /mnt) and then check for fragmentation. All of this is, of course, optional.

Defragment your filesystem

First thing we need to do is figure out how to check the amount of fragmentation. For example, the xfs filesystem we created earlier was on device node /dev/sdb. We can use the utility xfs_db (stands for xfs debugging) to check the level of fragmentation.

The -c flag takes various commands among which is the frag command to check the level of fragmentation. The -r flag used to make sure that the operation is entirely read-only.

If we find that there is any fragmentation in this filesystem we run the xfs_fsr command on the device node:

This last command is all there is to defragment your filesystem, you can add this as a cronjob which will regularly monitor your filesystem. But doing that for xfs makes little sense. The extent based allocation of XFS ensures that problems such as fragmentation stays to a minimum.

Use Cases

The use cases where you need to worry the most about filesystem fragmentation involves applications where a lot of small chunks of data are written and rewritten. A database is a classic example of this and databases are notorious for leaving lots and lots of “holes” in your storage. Memory blocks are not contiguously filled up making the amount of available space smaller and smaller over time.

The problem arises as not only in terms of reduced usable space but also in terms of reduced IOPS which might hurt your application’s performance. Having a script to continuously monitor the fragmentation level is a conservative way of maintaining the system. You don’t want an automated script to randomly start defragmenting your filesystem especially when it is being used at peak load.