This guide will illustrate the process of debugging chains in LangChain.

How to Debug Chains in LangChain?

In LangChain, building Large Language Models can be done using the training data or OpenAI environment and the training data must be in a particular format. Sometimes, the developers face complex problems while debugging the chains to get data in a more readable form. To learn the process of debugging the chains in LangChain, simply go through this easy guide:

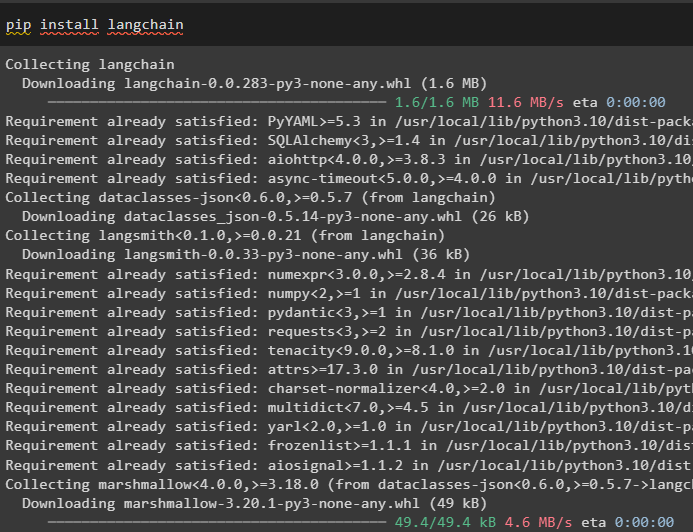

Step 1: Install Modules

First, install the LangChain framework using the pip command to get its dependencies and libraries:

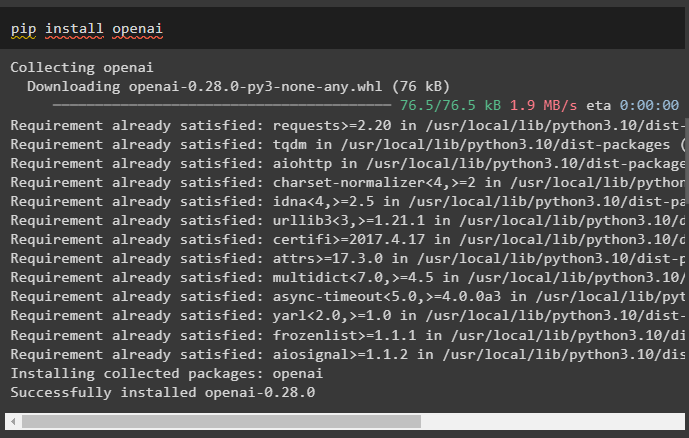

After that, install another module called OpenAI that can be used to build LLMs or chatbots that can interact with humans:

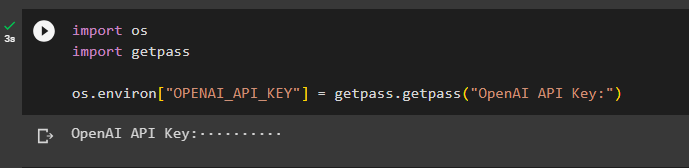

Now, simply set up the OpenAI environment using the API key from its account:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Import Libraries

The next step is to import the libraries from the modules installed to get started with the process of debugging chains in LangChain:

from langchain.chains.llm import LLMChain

from langchain.memory.buffer import ConversationBufferMemory

from langchain.chat_models import ChatOpenAI

<h3><strong>Step 3: Configure LLM Chains</strong></h3>

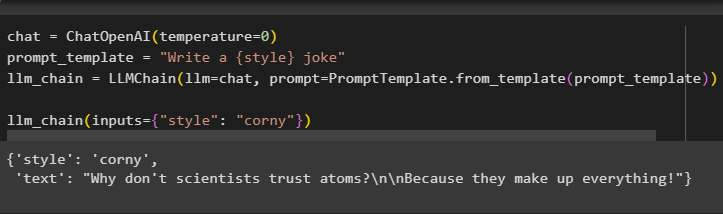

Once the libraries are imported successfully, build the LLM by configuring the Template for the queries and call the chain() method using the query:

[cc lang="python" width="100%" height="100%" escaped="true" theme="blackboard" nowrap="0"]

chat = ChatOpenAI(temperature=0)

prompt_template = "Write a {style} joke"

llm_chain = LLMChain(llm=chat, prompt=PromptTemplate.from_template(prompt_template))

llm_chain(inputs={"style": "corny"})

Running the above code will display the joke having the “corny” style using the prompt_template variable:

The output displayed in the above chain has different characters that are specifically used in the programming language like “\n” and others. The output should be more inclined towards human-like writing so the next steps explain the code for it:

Step 4: Debugging Chains

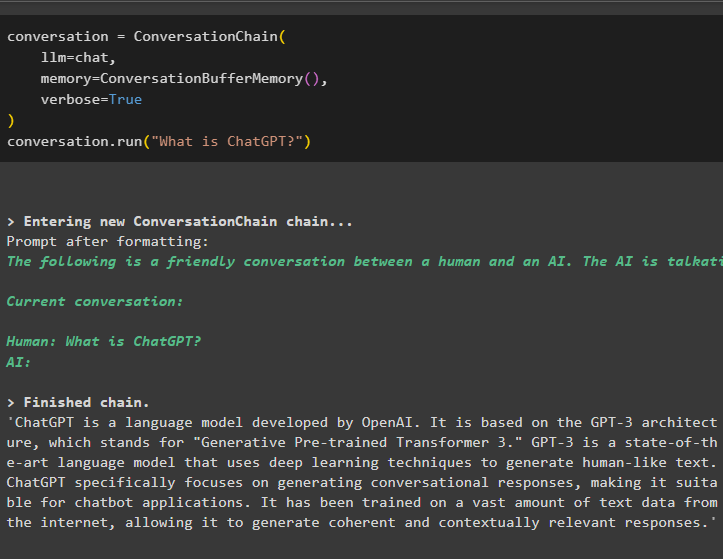

Now, use the ConversationChain() method to define the verbose variable with the value true and run the chain. The verbose is the flag that can be used to write normal expressions that can be easily readable and look nice as text:

llm=chat,

memory=ConversationBufferMemory(),

verbose=True

)

conversation.run("What is ChatGPT?")

The following screenshot displays the output that is written in the form of a paragraph and is in more readable form:

That is all about the process of debugging the chains in LangChain.

Conclusion

To debug chains in LangChain, simply install modules to import the libraries required to build and debug chains like ConversationChain, ConversationBufferMemory, etc. Once the libraries are imported successfully, simply build the LLM or chatbot to have a conversation with it. The output can give a program-like feel so we can use the ConversationChain() method to make it easier to read for humans. This guide has elaborated on the process of debugging chains using the LangChain framework.