Building Large Language Models or chatbots that can interact with humans in their languages like English. It requires training in the system so it can understand the queries and prompts effectively. Extracting replies or information without understanding the question might not be very effective and people would not prefer these bots. It is very important to build prompt templates for the models to let them have a feel for the queries they have to face.

This post will illustrate the process of building a custom prompt template in LangChain.

How to Create a Custom Prompt Template in LangChain?

Multiple Prompt templates are already available and the user can build their customized template according to the use of the chatbot or LLM. The customized prompt templates can be used to meet the requirements more effectively as compared to the given/set templates. Each user can have a different set of requirements so the prompt template should be built accordingly using the custom prompt template.

To create a custom prompt template, simply go through this guide:

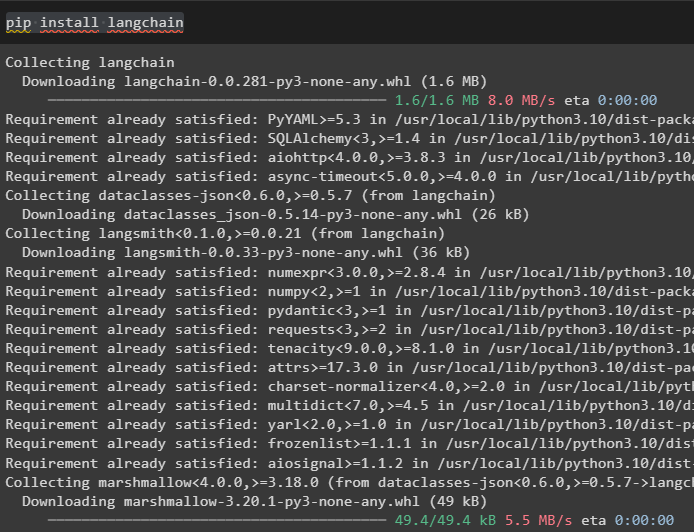

Step 1: Install Modules

Firstly, install the LangChain module to start the process of customizing the prompt template:

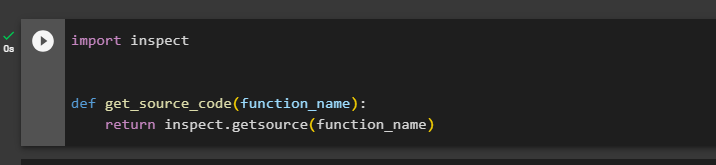

Step 2: Getting Source Code

After installing the LangChain framework, simply define a function that will get the source code of the function whenever it is called using the function’s name as the parameter. The following code uses the “inspect” prompt template to define the function for getting the source code:

def get_source_code(function_name):

return inspect.getsource(function_name)

Step 3: Building Custom Prompt Template

Now, simply create a custom prompt template that takes the name of the function as the input and the source code to display the explanation of the code in natural language:

from pydantic import BaseModel, validator

PROMPT = """\

Using the name of the function and its source code, give an explanation of the code in the English language

Function Name: {function_name}

Source Code:

{source_code}

Explanation:

"""

# Configure the class that defines a function to explain the source code of the given function

class FunctionExplainerPromptTemplate(StringPromptTemplate, BaseModel):

@validator("input_variables")

def validate_input_variables(cls, v):

"""Validate that the input variables are correct."""

if len(v) != 1 or "function_name" not in v:

raise ValueError("function_name must be the only input_variable")

return v

def format(self, **kwargs) -> str:

source_code = gsc(kwargs["function_name"])

prompt = PROMPT.format(

function_name=kwargs["function_name"].__name__, source_code=source_code

)

return prompt

def _prompt_type(self):

return "function-explainer"

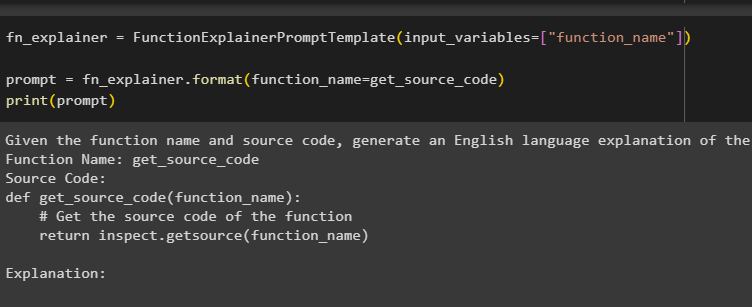

Step 4: Testing the Template

Now the template is configured, simply use it to display the queries of the task that gets the explanation in the English language for the function:

prompt = fn_explainer.format(function_name=get_source_code)

print(prompt)

That’s all about the process of creating a custom prompt template in LangChain.

Conclusion

To build a custom prompt template in LangChain, start the process by installing the LangChain framework to get all the required libraries for customizing the prompt templates. After that, configure a custom prompt template using the inspect and string prompt templates to get the name of the function as input and explain its source code. This guide has illustrated the process of creating a custom prompt template in LangChain.