Quick Outline

This post will demonstrate the following:

How to Create a Custom Agent with Tool Retrieval in LangChain

- Installing Frameworks

- Setting up Environments

- Importing Libraries

- Setting up Tools

- Configuring Tool Retrieval

- Building Vector Store for Tool Retrieval

- Testing the Tool Retrieval

- Designing Prompt Template

- Customizing Output Parser

- Setting up LLM

- Building the Agent

- Testing the Agent

How to Create a Custom Agent With Tool Retrieval in LangChain?

Building a custom agent allows the user to build and configure its tools according to the requirement. Tool Retrieval can be more useful when we have a huge number of tools and the agent can pick its tools on the spot according to the tasks. In this guide, we will use one genuine tool called “search” and 99 fake tools so the agent can locate which tool to use for the task.

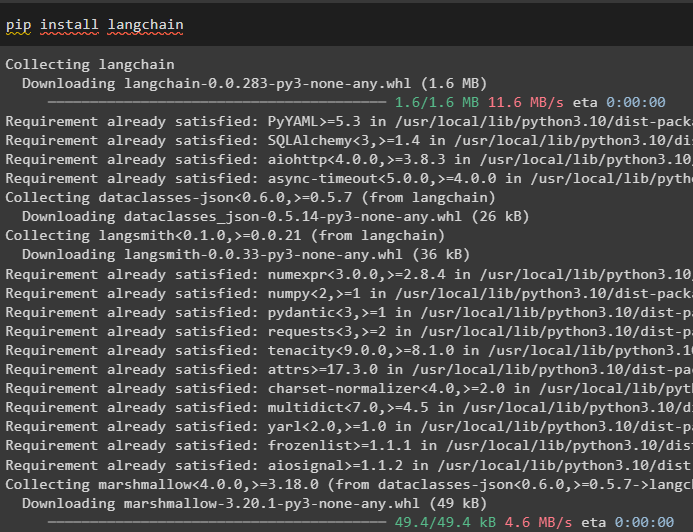

Step 1: Installing Frameworks

First of all, install the LangChain module to get the required libraries provided by the LangChain dependencies:

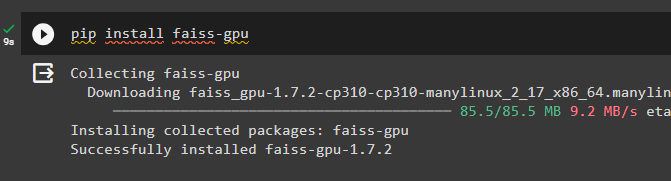

FAISS is an efficient similarity search library that can be used to extract information from different sources accurately:

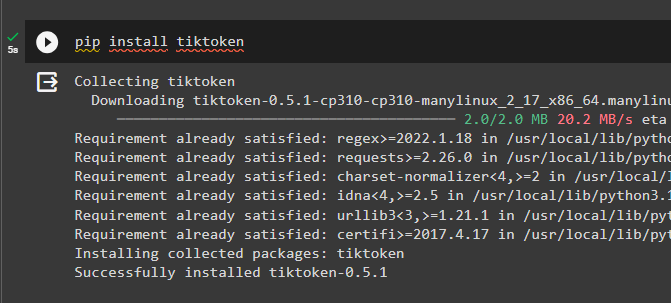

Install the tiktoken tokenizer to make smaller chunks of the larger documents so they can be processed and managed easily:

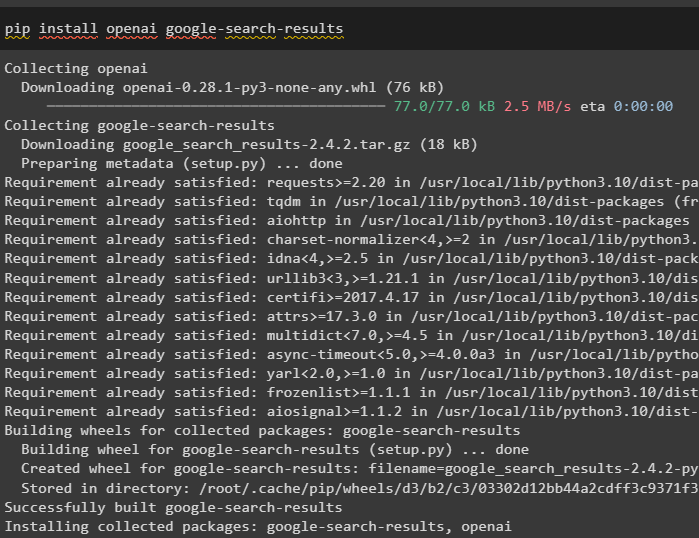

Install the google-search-results module to get the answers from the Google server and print them on the screen:

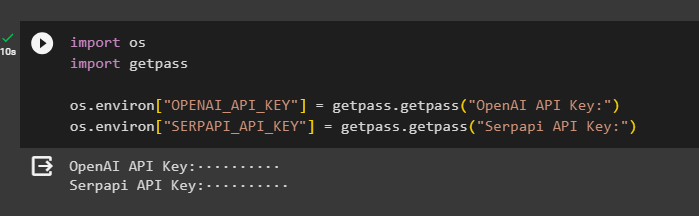

Step 2: Setting up Environments

After getting the modules, set up the OpenAI and SerpAPi environment by extracting their API keys after signing in to their respective websites:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

os.environ["SERPAPI_API_KEY"] = getpass.getpass("Serpapi API Key:")

Step 3: Importing Libraries

Now, get the libraries from the LangChain dependencies to build the agent with the Tool Retrieval using the following code:

from langchain.agents import AgentExecutor

from langchain.schema import AgentAction

from langchain.agents import LLMSingleActionAgent

from langchain.prompts import StringPromptTemplate

from langchain.agents import AgentOutputParser

from langchain.llms import OpenAI

import re

from langchain.utilities import SerpAPIWrapper

from langchain.chains import LLMChain

from typing import Union

from langchain.schema import AgentFinish

from typing import List

Step 4: Setting up Tools

Once the libraries are imported, configure the search_tool using the SerpAPIWrapper() method and Tool() with its arguments. Configure the fake_tools using the fake_func() method after setting up the genuine tool. Define the ALL_TOOLS variable containing all hundred tools so they can be given to the agent at the same time:

search_tool = Tool(

name="Search",

func=search.run,

description="useful for when you need to answer questions about current events",

)

def fake_func(inp: str) -> str:

return "foo"

fake_tools = [

Tool(

name=f"foo-{i}",

func=fake_func,

description=f"a silly function that you can use to get more information about the number",

)

for i in range(99)

]

ALL_TOOLS = [search_tool] + fake_tools

Step 5: Configuring Tool Retrieval

Once the tools are configured, simply build the Tool Retrieval to fetch data from the databases or vector stores using the FAISS library. Once the data is fetched, simply create embeddings by converting text into numbers and storing them in the database in the form of documents:

#Convert text into numbers and create vector spaces

from langchain.embeddings import OpenAIEmbeddings

#Store data into documents after splitting into small documents

from langchain.schema import Document

Use the “ALL_TOOLS” in the loop to choose the correct tool from a hundred of them to fetch data from documents:

Document(page_content=t.description, metadata={"index": i})

for i, t in enumerate(ALL_TOOLS)

]

Step 6: Building Vector Store for Tool Retrieval

Configure the vector_store variable for storing the documents containing data and embeddings:

Set the retriever for the database that can index the tables and extract data upon request using the correct tool:

#Defining tool retrieval to use the correct tool for each task

def get_tools(query):

docs = retriever.get_relevant_documents(query)

return [ALL_TOOLS[d.metadata["index"]] for d in docs]

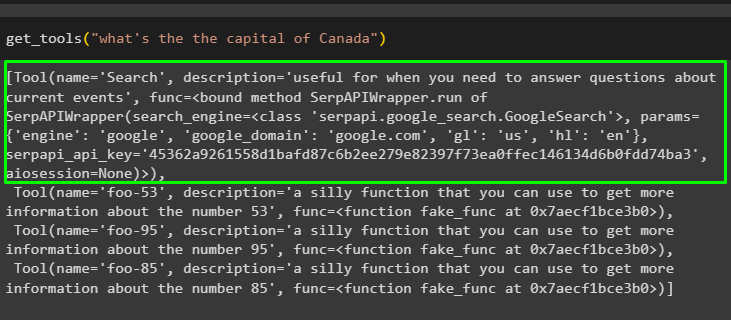

Step 7: Testing the Tool Retrieval

After configuring the agent, simply test the tool by giving the question in the get_tools() method and execute it:

The agent has found the correct tool and placed it on the top but the answer hasn’t been extracted yet:

Step 8: Designing Prompt Template

To get the answer from the agent, simply configure the prompt template that defines the structure for the interface of the model:

{tools}

Use the following format:

#store the input string and start working on it

Question: the input question you must answer

#understand the question before finding the line of action

Thought: you should always think about what to do

#Think the required tool for the current input

Action: the action to take, should be one of [{tool_names}]

#find the correct tool and navigate to different sources to find answers

Action Input: the input to the action

#gather all possible answers to the given queasy from multiple sources

Observation: the result of the action

#all the steps should be running with proper orientation

... (this Thought, Action, Action Input, and Observation can repeat N times)

#these actions keep repeating until the correct answer is found and then printed on the screen

Thought: I now know the final answer

#make sure that the final answer is authentic

Final Answer: the final answer to the original input question

#leave a signature with the final answer to make it stand out from the others

Begin! Remember to speak as a pirate when giving your final answer using lots of "Arg"s

#again use the query with the final answer to keep it relevant.

Question: {input}

{agent_scratchpad}"""

Build the custom template for the prompts with all the activities and the intermediate_steps involved in these activities. Provide the tools with the arguments used while configuring the tools like name, description, and others:

class CustomPromptTemplate(StringPromptTemplate):

template: str

############## NEW ######################

tools_getter: Callable

def format(self, **kwargs) -> str:

intermediate_steps = kwargs.pop("intermediate_steps")

thoughts = ""

#defining the intermediate step to gather observations and thoughts to guess the final reply

for action, observation in intermediate_steps:

thoughts += action.log

thoughts += f"\nObservation: {observation}\nThought: "

kwargs["agent_scratchpad"] = thoughts

############## NEW ######################

tools = self.tools_getter(kwargs["input"])

#using the tools with their components to be used when required

kwargs["tools"] = "\n".join(

[f"{tool.name}: {tool.description}" for tool in tools]

)

kwargs["tool_names"] = ", ".join([tool.name for tool in tools])

return self.template.format(**kwargs)

Define the prompt variable with the CustomPromptTemplate() method with the template, tools_getter, and input_variables arguments:

template=template,

tools_getter=get_tools,

input_variables=["input", "intermediate_steps"],

)

Step 9: Customizing Output Parser

Build the Output Parser to get the structure of the steps by returning the AgentAction and AgentFinish objects. These objects explain the position of the agent as AgentFinish means that the user has to give the input to make it work. AgentAction means that the agent is working or performing some task like gathering observation or finding the correct tool:

#defining the parse method with multiple arguments to return answers using the Agent performing tasks

def parse(self, llm_output: str) -> Union[AgentAction, AgentFinish]:

if "Final Answer:" in llm_output:

return AgentFinish(

return_values={"output": llm_output.split("Final Answer:")[-1].strip()},

log=llm_output,

)

regex = r"Action\s*\d*\s*:(.*?)\nAction\s*\d*\s*Input\s*\d*\s*:[\s]*(.*)"

match = re.search(regex, llm_output, re.DOTALL)

#Returning AgentFinish object that gets the input/question from the user and the AgentAction starts running and the loops keeps repeating

if not match:

raise ValueError(f"Could not parse LLM output: `{llm_output}`")

action = match.group(1).strip()

action_input = match.group(2)

return AgentAction(

tool=action, tool_input=action_input.strip(" ").strip('"'), log=llm_output

)

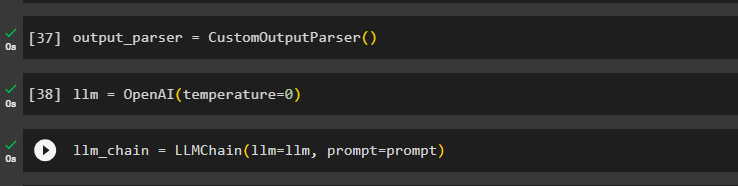

Define the output_parser variable with the CustomOutputParser() method containing all the configuration:

Step 10: Setting up LLM

Now, simply build the language model using the OpenAI() that allows the use of its environment as well:

Build the chain using the LLMChain() method using the llm and prompt variables as its arguments:

Step 11: Building the Agent

Build the agent using the LLMSingleActionAgent() method after calling the get_tools() method with the input in the tool’s variable:

tool_names = [tool.name for tool in tools]

agent = LLMSingleActionAgent(

llm_chain=llm_chain,

output_parser=output_parser,

stop=["\nObservation:"],

allowed_tools=tool_names,

)

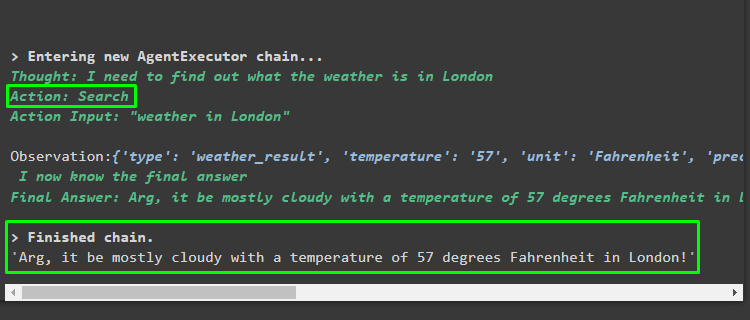

Step 12: Testing the Agent

Build the agent_executor to run the agent using the agent, tools, and verbose variables:

agent=agent, tools=tools, verbose=True

)

Run the agent to get the weather of London as it is the question asked in the agent_executor.run() method:

Output

The output screenshot displays that the agent has correctly identified the tool and it also found the answer to the question using the search tool:

That’s all about how to create a custom agent with Tool Retrieval in LangChain.

Conclusion

To create a custom agent with Tool Retrieval in LangChain, install the required modules to import libraries for building the agent. Set up the OpenAI and SerpAPI environments to build a real tool and some fake tools to go along with the real one. After that, configure the Tool Retrieval and build a language model to check if the agent uses the correct tool to perform a task. Once the model finds the correct tool, simply configure the prompt template and execute the agent to answer the question as well. This guide has elaborated on the process of creating a custom agent with Tool Retrieval in LangChain.