Large Language Models or LLMs are used to build language processing models that use natural languages to understand and generate text. LangChain supports streaming responses using the OpenAI environment variable API key. It also supports streaming responses from the model according to the query prompted by the user in a natural language like English.

This post demonstrates the process of creating responses to Large Language Models using LangChain.

How to Create Streaming Responses of LLMs Using LangChain?

To create streaming responses of Large Language Models using Langchain, look at the following guide with multiple steps:

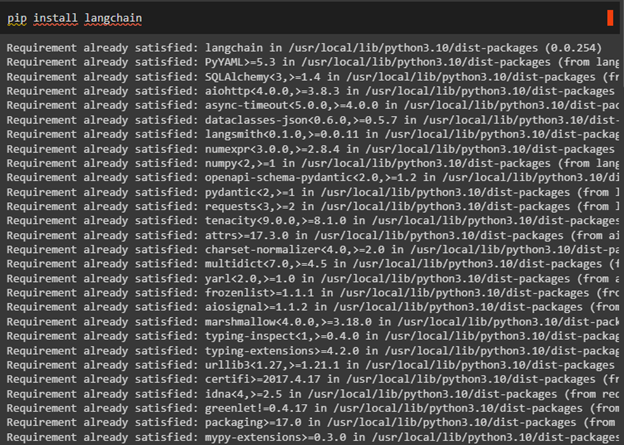

Install Modules

Install LangChain before start using the framework using Python language as written below:

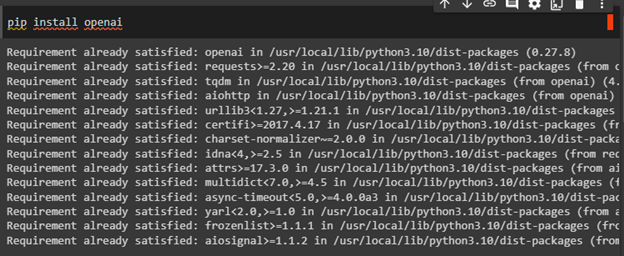

Install another module that is OpenAI to use its credentials for generating streaming responses in LangChain:

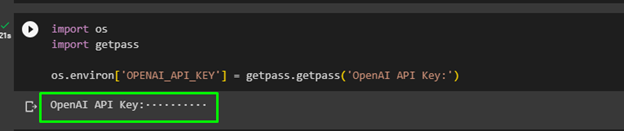

Set OpenAI API Key

After that, set the environment variable key for the OpenAI using its API key in the “getpass()” method as the os library allows the user to interact with the operating system. The getpass library allows the user to enter the password which is the API key in this case:

import getpass

os.environ['OPENAI_API_KEY'] = getpass.getpass('OpenAI API Key:')

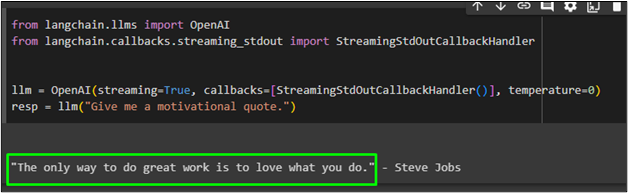

Use the CallbackHandler Library to Create Streaming Responses

Import the OpenAI library to use its API key and then use the CallbackHandler library to generate streaming responses and place them in the “llm” variable. The variable contains the OpenAI() function with multiple parameters to enable the streaming option by assigning it a true value. After that, use the resp variable to call the “llm()” method containing the prompt in natural language:

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

llm = OpenAI(streaming=True, callbacks=[StreamingStdOutCallbackHandler()], temperature=0)

resp = llm("Give me a motivational quote.")

The response generated by the LLM using the prompt provided by the user is displayed in the following screenshot:

Use LLM Generate() Method to Create Streaming Responses

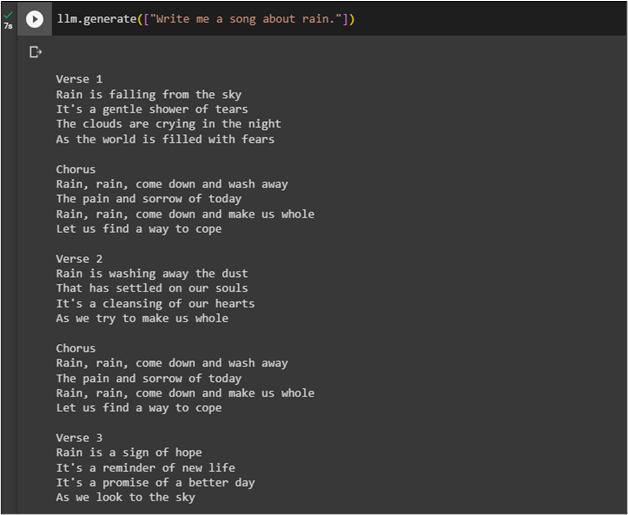

Users can also call LLM using the generate() function with the command/prompt mentioned inside it as displayed in the following code:

The LLM has generated a streaming response to generate a song related to the rain as the prompt asked:

That is all about creating streaming responses of LLMs using LangChain.

Conclusion

To create streaming responses of Large Language Models using LangChain, simply install the necessary modules to generate responses from OpenAI. The OpenAI library can be used to generate responses using its API key which is accessed through its official website. After that, simply configure the CallbackHandler library to implement token. This guide has explained the process of creating streaming responses of LLMs using the LangChain framework.