This tutorial will show you how to create a Docker image that integrates Elasticsearch, Kibana, and Logstash. You can then use the image to deploy the ELK stack on any Docker container.

Getting Started

For this guide, we will start by installing and setting up Docker on a system. Once we set up Docker, we will deploy a container running Elasticsearch, Kibana, and Logstash in the same system. In that Container, we can then tweak and customize Elastic Stack to our needs.

Once we have the appropriate ELK stack, we will export the Docker container to an image you can use to build other containers.

Step 1: Install Docker

The very first thing we need to do is install Docker on a system. For this tutorial, we are using Debian 10 as the base system.

The very first step is to update the apt packages using the following command:

Next, we need to install some packages that will allow us to use apt over HTTPS, which we can do using the following command:

The next step is to add the Docker repository GPG key using the command:

From there, we need to add the Docker repository to apt using the command:

Now we can update the package index and install Docker:

sudo apt-get install docker-ce docker-ce-cli containerd.io

Step 2: Pulling ELK Docker Image

Now that we have Docker up and running on the system, we need to pull a Docker container containing the ELK stack.

For this illustration, we will use the elk-docker image available in the Docker registry.

Use the command below to pull the Docker image.

Once the image has been pulled successfully from the docker registry, we can create a docker container using the command:

Once you create the Container, all the services (Elasticsearch, Kibana, and Logstash) will be started automatically and exposed to the above ports.

You can access the services with the addresses

- http://localhost:9200 – Elasticsearch

- http://localhost:5601 – Kibana web

- http://localhost:5044 – Logstash

Step 3: Modifying the Container

Once we have ELK up and running on the Container, we can add data, modify the settings, and customize it to meet our needs.

For the sake of simplicity, we will add sample data from Kibana Web to test it.

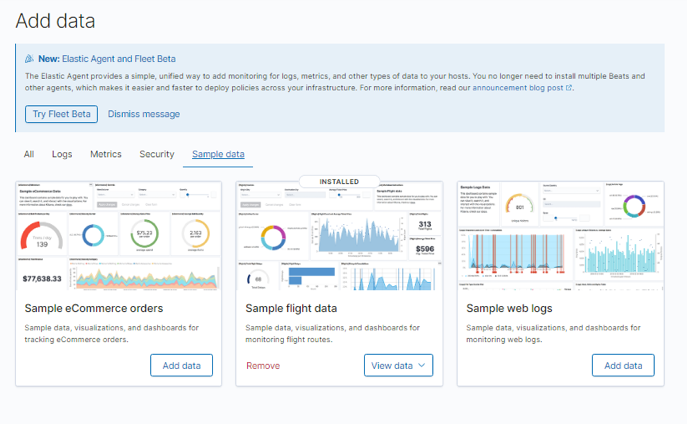

On the main Kibana home page, select Try sample data to import sample.

Choose the data to import and click on add data

Now that we have imported and modified the Container, we can export it to create a custom Elk image that we can use for any Docker image.

Step 4: Create ELK Docker image from Container

With all the changes in the Elastic stack container, we can export the Container to an image using a single command as:

Using the above command, we created the image elkstack with the tag version2 to the docker repository myrepo. This saves all the changes we made from the Container, and you can use it to create other containers.

Conclusion

This quick and simple guide showed you how to create a custom ELK image for Docker with changes. For those experienced with Docker, you can use Dockerfiles to accomplish the same tasks but with more complexity.