LangChain is the framework for building AI models to solve Natural Language Processing problems like understanding or generating text in language. LangChain uses Large Language Models or LLMs to build chatbots that answer the questions/prompts in natural language. LangChain also provides LLM wrappers for different popular language models containing prompt templates. These prompt templates can be used to create customized prompts for the LLMs to retrieve information from data.

This guide explains the procedure for creating the custom LLM wrapper in LangChain.

How to Create/Build a Custom Wrapper For LLM in LangChain?

To create a custom LLM wrapper in LangChain, simply use the following simple and easy guide explaining the process:

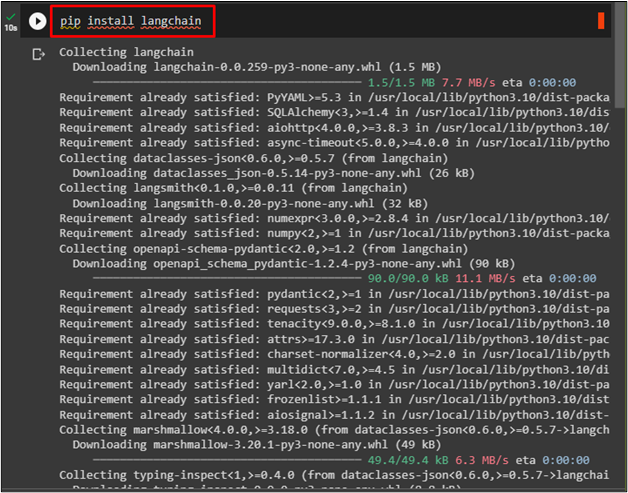

Install LangChain

Before starting the process of creating a custom wrapper, install the LangChain framework to use all its necessary libraries:

Import Libraries

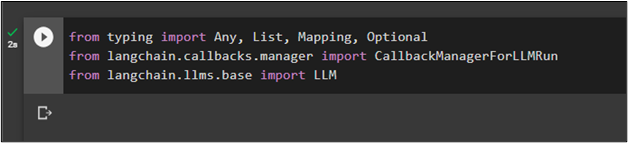

After installing the LangChain module, simply import libraries from it like LLM to build its model and CallbackManagerForLLMRun class for creating the custom LLM wrapper in LangChain:

from langchain.callbacks.manager import CallbackManagerForLLMRun

from langchain.llms.base import LLM

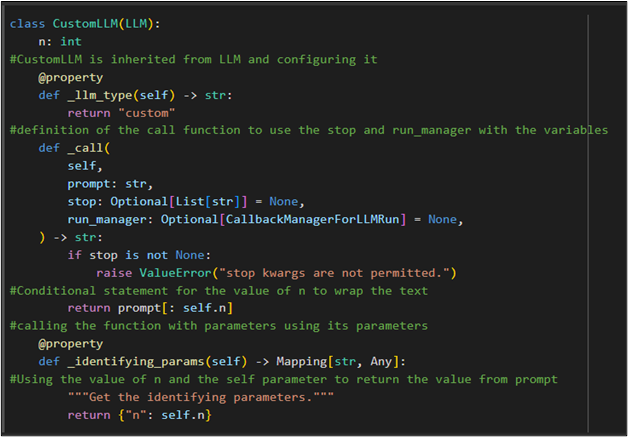

Create a Custom LLM Wrapper

To configure a custom LLM wrapper, create the LLM classes. Furthermore, the following code block contains the code for its customization:

n: int

#CustomLLM is inherited from LLM and configuring it

@property

def _llm_type(self) -> str:

return "custom"

#definition of the call function to use the stop and run manager with the variables

def _call(

Self,

prompt: str,

stop: Optional[List[str]] = None,

run_manager: Optional[CallbackManagerForLLMRun] = None,

) -> str:

if stop is not None:

raise ValueError("stop kwargs are not permitted.")

#Conditional statement for the value of n to wrap the text

return prompt[: self.n]

#calling the function with parameters using its parameters

@property

def _identifying_params(self) -> Mapping[str, Any]:

#Using the value of n and the self-parameter to return the value from prompt

"""Get the identifying parameters."""

return {"n": self.n}

The above code creates a “CustomLLM” class that inherits from the “LLM” and contains the n variable with an integer datatype. It defines the prompt in the string type and displays the answer according to the command from the data stored in the LLM. The code uses the “stop” and “run_manager” parameters for the call function and if the “stop is not none” then the function raises an error otherwise returns n:

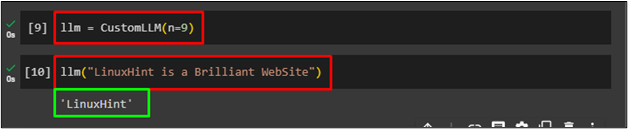

Test the LLM Wrapper

CustomLLM can be used like other Large Language Models as it was configured in the previous step. The “llm” variable is initialized with the function continuing the value of “n” and now the variable can be used to call the function:

Simply write the prompt inside the “llm()” function as the following code suggests:

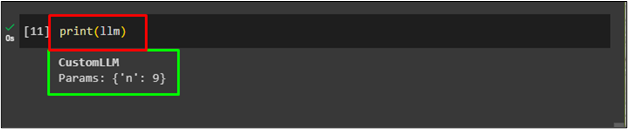

The llm variable can be used to print the CustomLLM parameters with the value of n:

That is all about creating a custom LLM wrapper in LangChain.

Conclusion

To create a custom LLM wrapper in LangChain, simply install LangChain and import different classes or libraries to build the custom LLM. After importing the classes from the LangChain module, simply configure the CustomLLM class and explain its parameters. Test the LLM wrapper after its creation by providing the command to wrap the text with the value of 9, suggesting where the string would end. This post demonstrated the process of creating the custom LLM wrapper in LangChain.