In Python, PySpark is a Spark module used to provide a similar kind of processing like spark using DataFrame. It provides the several methods to return the top rows from the PySpark DataFrame.

Pandas is a module used for Data Analysis. It supports three data structures – Series, DataFrame and Panel. We can convert PySpark DataFrame to Pandas DataFrame once we have PySpark DataFrame.

Let’s create PySpark DataFrame first.

Example:

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[{'rollno':'001','name':'sravan','age':23,'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,'height':3.79,'weight':34,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':7,'height':2.79,'weight':17, 'address':'patna'},

{'rollno':'004','name':'rohith','age':9,'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,'height':5.59,'weight':54,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

# dataframe

df.show()

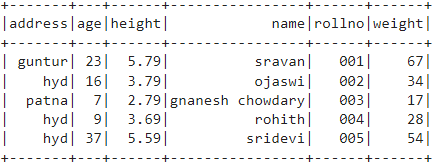

Output:

toPandas() is a method that will convert PySpark DataFrame to Pandas DataFrame.

Syntax:

where dataframe is the input PySpark DataFrame.

Example:

In this example, we are converting above PySpark DataFrame to Pandas DataFrame.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[{'rollno':'001','name':'sravan','age':23,'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,'height':3.79,'weight':34,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':7,'height':2.79,'weight':17, 'address':'patna'},

{'rollno':'004','name':'rohith','age':9,'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,'height':5.59,'weight':54,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#convert to pandas dataframe

print(df.toPandas())

Output:

We can iterate the DataFrame through iterrows() by converting PySpark to Pandas.

iterrows()

This method is used to iterate the columns in the given PySpark DataFrame by converting into Pandas DataFrame, It can be used with for loop and takes column names through the row iterator and index to iterate columns. Finally, it will display the rows according to the specified indices.

Syntax:

print(row_iterator[index_value], ………)

Where:

- dataframe is the input PySpark DataFrame.

- index_value is the column index position in the PySpark DataFrame.

- row_iterator is the iterator variable used to iterate row values in the specified column.

Example 1:

In this example, we are iterating rows from the address and height columns from the above PySpark DataFrame.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#import the col function

from pyspark.sql.functions import col

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[{'rollno':'001','name':'sravan','age':23,'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,'height':3.79,'weight':34,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':7,'height':2.79,'weight':17, 'address':'patna'},

{'rollno':'004','name':'rohith','age':9,'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,'height':5.59,'weight':54,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#iterate address and height columns

for index, row_iterator in df.toPandas().iterrows():

print(row_iterator[0], row_iterator[1])

Output:

hyd 16

patna 7

hyd 9

hyd 37

Example 2:

In this example, we are iterating rows from the address and name columns from the above PySpark DataFrame.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#import the col function

from pyspark.sql.functions import col

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[{'rollno':'001','name':'sravan','age':23,'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,'height':3.79,'weight':34,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':7,'height':2.79,'weight':17, 'address':'patna'},

{'rollno':'004','name':'rohith','age':9,'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,'height':5.59,'weight':54,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#iterate address and name columns

for index, row_iterator in df.toPandas().iterrows():

print(row_iterator[0], row_iterator[3])

Output:

hyd ojaswi

patna gnanesh chowdary

hyd rohith

hyd sridevi

Conclusion

In this tutorial, we discussed converting PySpark DataFrame to Pandas DataFrame using toPandas() method and iterated the Pandas DataFrame using iterrows() method.