LangChain is a framework to build applications powered by language models that can understand and generate text in human language. Large Language Models or LLMs are built to interact with humans using the training data that can be saved in natural language. JSON format is one of the most efficient formats for storing data in a textual format using natural language and training LLMs.

This guide will explain the process of saving the configuration of LLMs using serialization in LangChain.

How to Save the Configuration of LLMs Using Serialization in LangChain?

To save the configuration of LLMs using serialization in LangChain, simply follow this guide containing the complete process:

Install Prerequisites

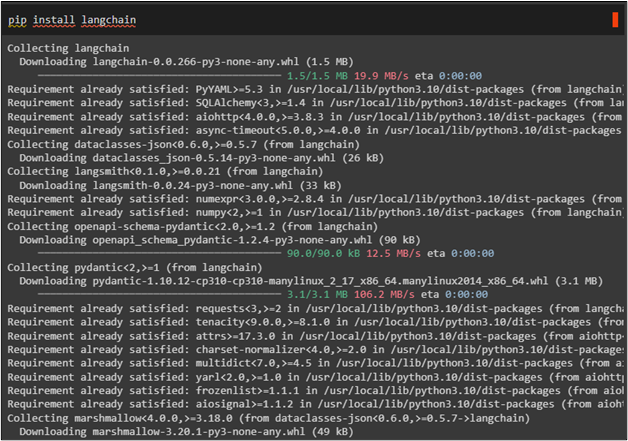

To create the LLMs, install the LangChain framework using the following code:

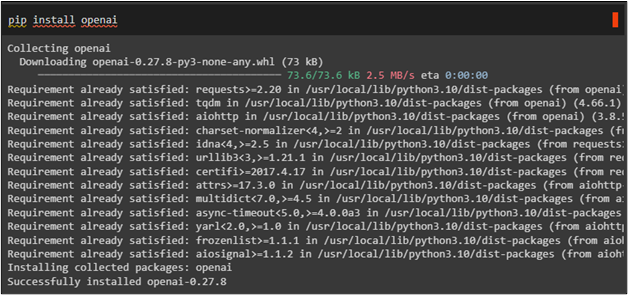

Another module required to install is OpenAI and it can be installed using this code:

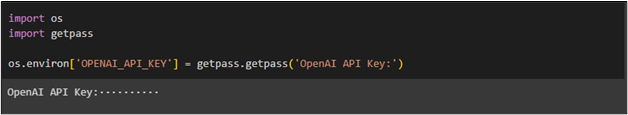

After that, simply get the OpenAI API key from the OpenAI environment to access its models using the os and getpass libraries:

import getpass

os.environ['OPENAI_API_KEY'] = getpass.getpass('OpenAI API Key:')

Import Libraries

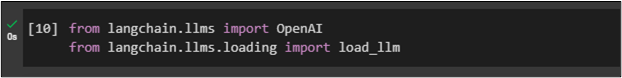

After installing all the modules and connecting to the OpenAI environment, import the OpenAI library to access its resources. Another library to import for this example is load_llm from LangChain using the serializations technique:

from langchain.llms.loading import load_llm

Loading LLM Configuration

To save the configured LLM, simply load the model using the following code:

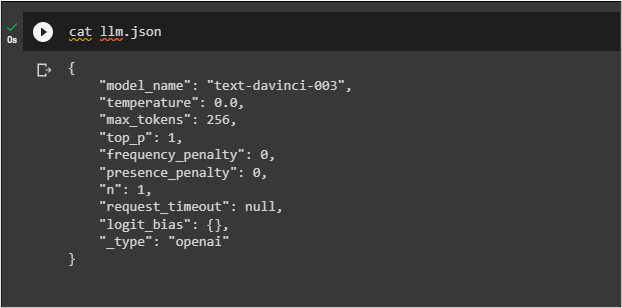

Executing the above code displays the models’ details configured using the guide provided in this step earlier:

Save the Configuration of LLMs

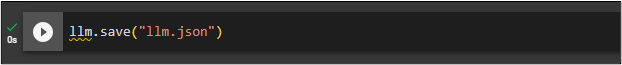

After loading the LLM, type the following code and execute it to save the configuration of LLMs using the serialization using LangChain:

Verification for Saving LLM

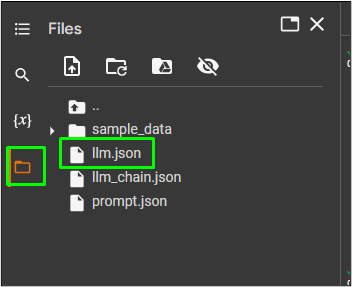

Click on the folder from the left panel to verify that the LLM is saved successfully and click on the name of the model:

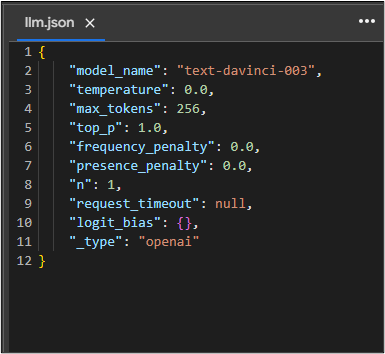

The following screenshot displays the contents of the configured LLM that we saved and loaded earlier:

That is all about saving the configurations of LLMs using serialization in LangChain.

Conclusion

To save the configuration of LLMs using serialization in LangChain, simply install LangChain and OpenAI modules to use their resources and libraries. After that, connect to the OpenAI using its API key and then import the libraries like OpenAI, load_llm, etc. Load the LLM configurations and then save them using the serialization technique in LangChain. This guide has explained the process of saving the configuration of LLMs using serialization in LangChain.