This post illustrates the process of composing multiple prompts in LangChain.

How to Compose Multiple Prompts in LangChain?

Multiple prompts can be used to get the parts of the command or prompts that were used previously to avoid repetition. The model can get the previous command to combine it with the current prompt and provide the display or reply accordingly.

To learn how to compose multiple prompts in LangChain, simply go through the listed steps:

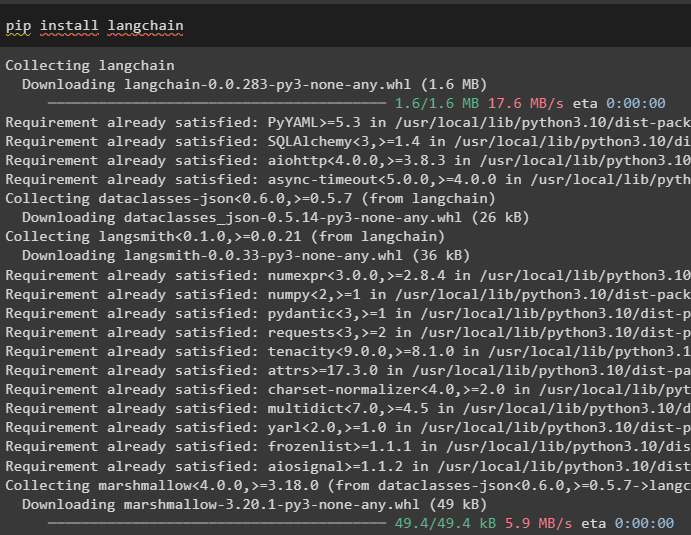

Step 1: Install LangChain

Start composing multiple prompts in LangChain by installing the LangChain framework:

Step 2: Using Prompt Template

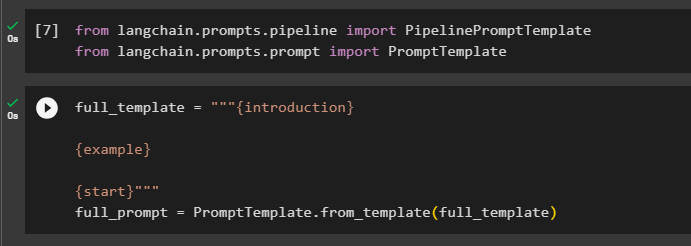

After that, import the required libraries like PipelinePromptTemplate and PromptTemplate for using multiple prompts:

from langchain.prompts.prompt import PromptTemplate

Configure the template for the prompt and then call the PromptTemplate() method using the template:

{example}

{start}"""

full_prompt = PromptTemplate.from_template(full_template)

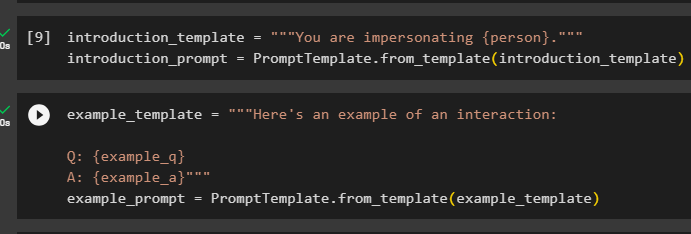

After that, simply configure another prompt using the “introduction_template” variable and call the PromptTemplate() in another variable:

introduction_prompt = PromptTemplate.from_template(introduction_template)

Set the example_template variable for the model so it can return the output in the given format:

Q: {example_q}

A: {example_a}"""

example_prompt = PromptTemplate.from_template(example_template)

Now, simply set the template for the question-and-answer style for the bot to understand the style:

Q: {input}

A:"""

start_prompt = PromptTemplate.from_template(start_template)

Step 3: Using Pipeline Prompt Template

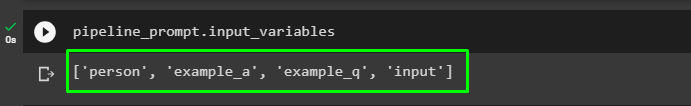

After that, use the PipelinePromptTemplate() with multiple parameters which are the templates configured in the previous step:

("introduction", introduction_prompt),

("example", example_prompt),

("start", start_prompt)

]

pipeline_prompt = PipelinePromptTemplate(

final_prompt=full_prompt,

pipeline_prompts=input_prompts

)

Step 4: Using Multiple Prompts

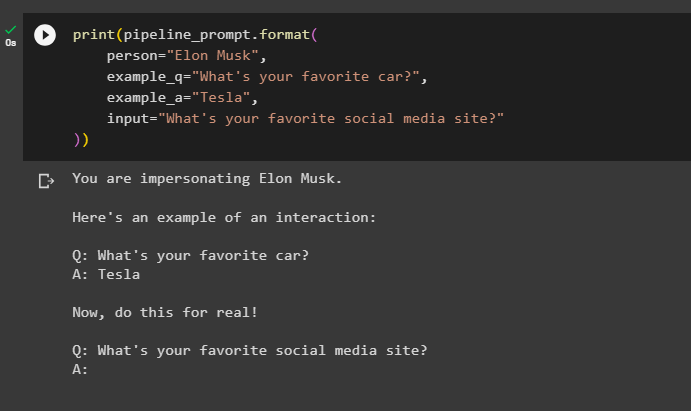

Execute the pipeline template to call the input variable to display the output which is the format of the prompts:

Call the prompt templates using a dataset with values for its parameter to display the output like real chat or conversation:

person="Elon Musk",

example_q="What's your favorite car",

example_a="Tesla",

input="What's your favorite social media site"

))

That is all about composing multiple prompts using the LangChain framework.

Conclusion

To compose the multiple prompts in LangChain, simply install the LangChain framework to get the PromptTemplate and PipelinePromptTemplate libraries. After that, configure the prompts using the PromptTemplate library and then combine them in the PipelinePromptTemplate library. Execute the prompts using the example dataset and get the output for different prompts. This post has illustrated the process of composing multiple prompts in LangChain.