Now, there are good reasons why data scientists prefer using the above-mentioned tools as the tools are well equipped to handle multitudes of tasks related to data. However, these aren’t the only easy-to-use tools available to them or us.

The people who are regular users of Linux know how powerful the Linux command terminal is. Users can perform virtually anything related to their systems using the command terminal. Although, Linux does provide its users with an attractive GUI, the command terminal is more fun and interactive.

However, only a few people actually know how to use the terminal to perform regular data science tasks. Furthermore, if you are interested in finding out how to use the terminal as a tool for data science, you are at the right place as we will be going over some of the commands that you can use for doing just that.

$wc

The first command we will be explaining is $wc and it is used to find out the word count, character count, line counts, and byte counts of a particular file. This command can be important as you can check out how big the file is that you are going to check out. There are different outputs with different operators used with $wc. The default output gives us the line count, word count, and character count left to right respectively. The syntax for this command is:

$wget

Another important command that can be regularly used by data scientists is the $wget command. This command downloads files from remote locations. In case of the dataset, you want to go through needs to be downloaded, you can use the $wget command to get it retrieved straight to your computer without hiccups. The syntax for $wget is:

$head anD $tail commands.

Consider the scenario where you have downloaded a dataset consisting of numerous files. Now, you are looking for a specific file with specific contents of your interest. You can use the $head and $tail commands To get to know the contents of the files.

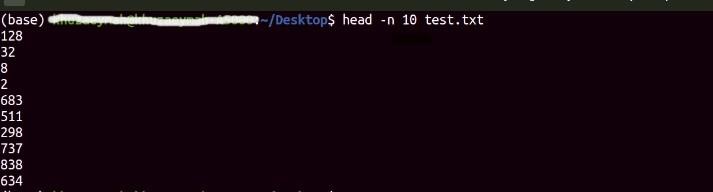

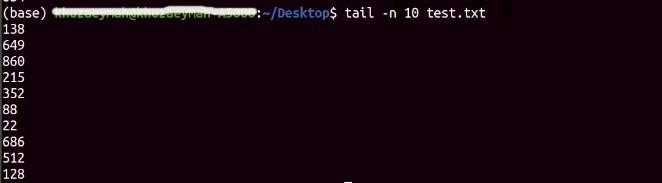

The $head command prints the first lines of the file as the output. The default output is 10 lines and you can choose to see as many lines as you want.

The $tail command gives you the lines at the end of the file as output. It, too, has a default output of 10 lines. The syntax for both the commands is as follows:

$ tail -n <the number of lines you want printed> <filename.extension>

$find

The next command we are going to take a look at is the $find command. Now you know that the dataset the scientists have to deal with is usually very large. It consists of thousands of files and in case they want to look for a specific file, it can become a headache. Though, the Linux terminal has provided its users with the $find command. If a person knows the name of the file he or she is looking for, just use the $find command to find it instantly.

$cat

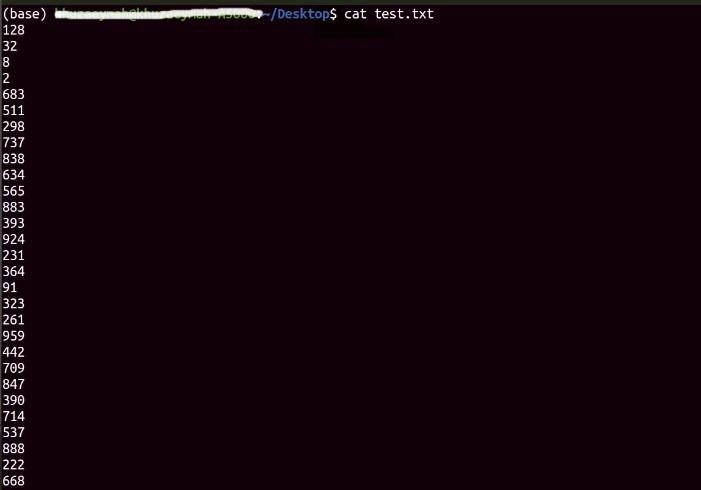

The $cat command has a number of uses in the data science world. The most basic use of the $cat command is that it outputs all the contents of a particular file.

$cat stands for “concatenate” and it can be used for combining two or multiple files together to form a single file.

The syntax for getting the content of a file is as follows:

Other uses of the $cat command include numbering the lines present in the file, appending text to files, creating new files, and etc.

$cut

The $cut command is used for removing sections of contents in a particular file. You can also copy those sections and paste them into another file. It should prove useful when you want to extract a few lines of useful information from a particular file.

awk

Before this, we looked at Linux commands that can prove useful to data scientists. Awk on the other hand is a full-fledged programming language that basically deals with processing text present in files or in general. This is a powerful tool that can be summoned in the terminal with short commands. There are a variety of tasks that can be performed using awk and it is recommended that you learn how to use awk in the Linux terminal.

Grep

Grep is another text processing tool that is somewhat similar to awk but it can also perform other tasks with minimum fuss and easy-to-implement syntax. It is another tool that you can learn quickly and use to your advantage for performing textual data-related tasks.

Conclusion

In this article, we looked at the different tools and commands available on the Linux terminal that can help in performing data science tasks. As you can see, there are a number of ways the Linux terminal can prove helpful, particularly in managing and handling data.