In this article, we will demonstrate how to check GPU availability in PyTorch.

How to Check GPU Availability in PyTorch?

Checking GPU availability in PyTorch is a simple process and it should always be the first step when starting work. GPUs are integrated into the PyTorch framework by the “CUDA” platform developed by Nvidia. The “torch.cuda.is_available()” function is used to check the availability of GPUs in PyTorch.

Follow the steps given below to check if GPU is available or not in PyTorch in the Google Colab IDE:

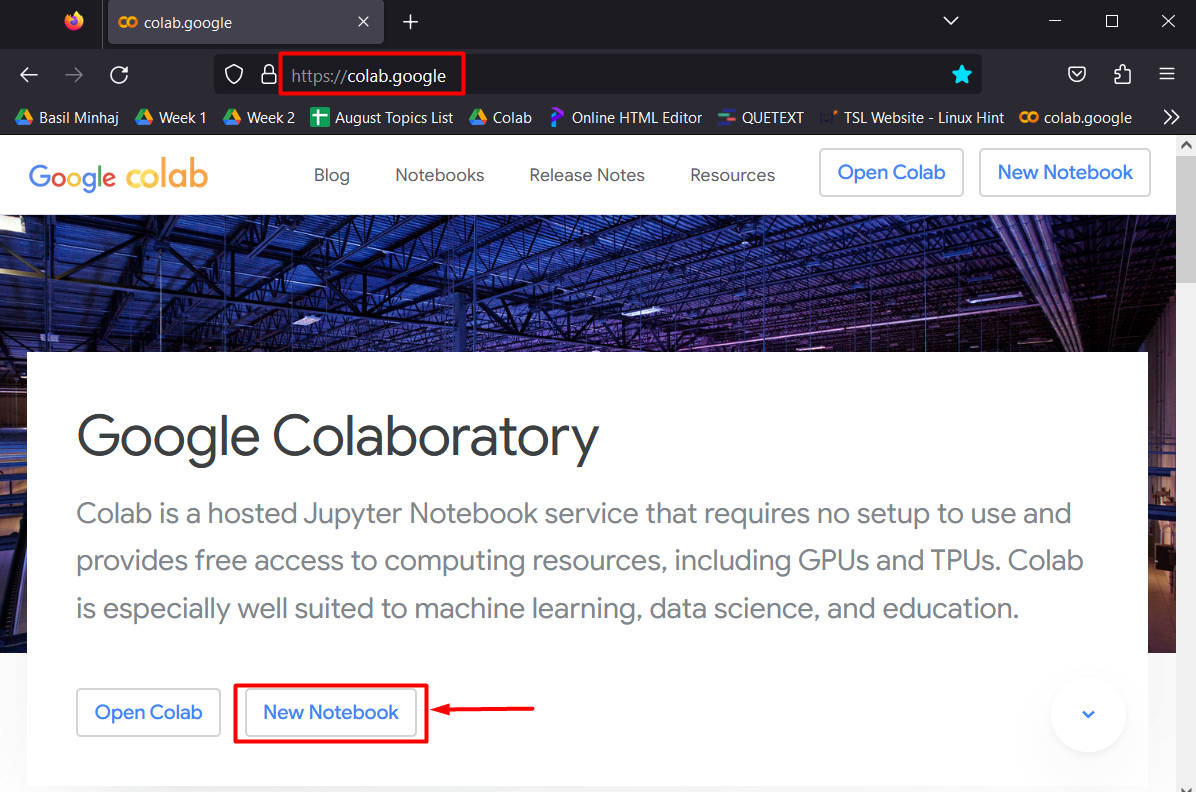

Step 1: Launch Google Colaboratory

In order to begin working, go to Google Colab and open a New Notebook as shown below:

Note: Give the Notebook a suitable name to easily recall and resume work.

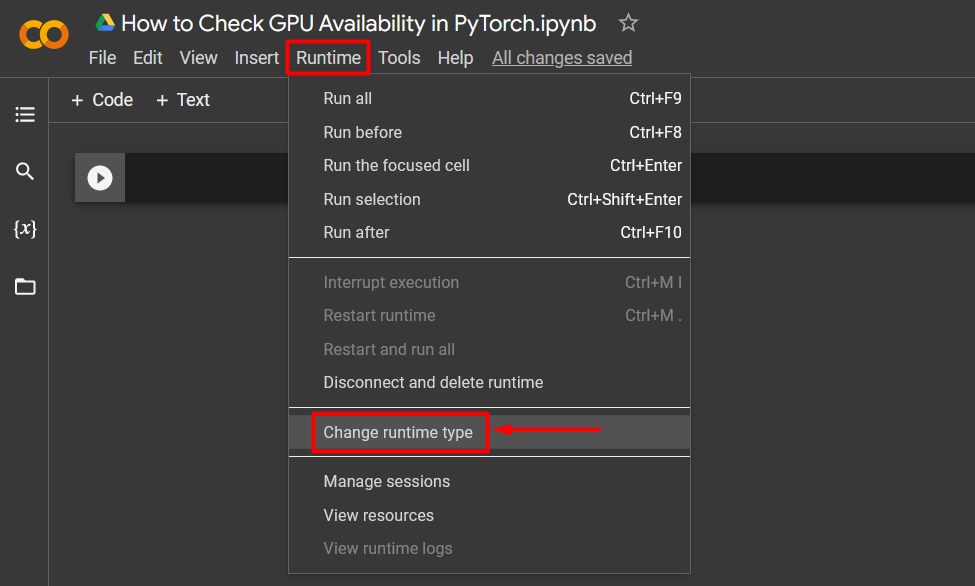

Step 2: Change Runtime Type and Hardware Accelerator

Click on the “Runtime” option in the menu bar, scroll down, and click on the “Change runtime type” option as shown:

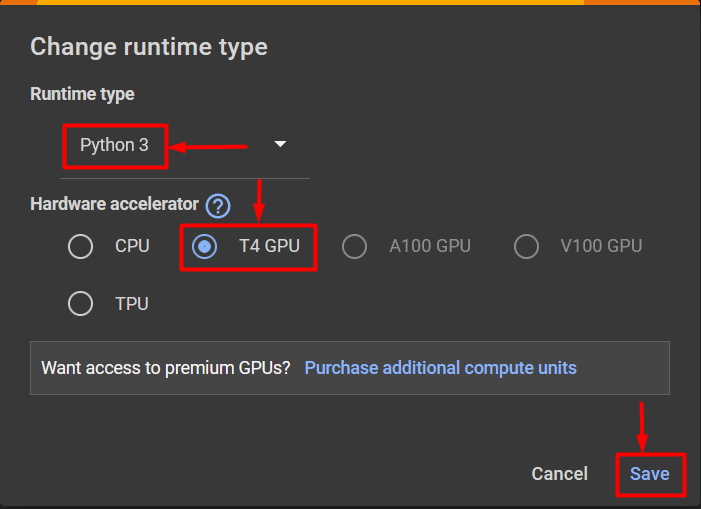

From the “Change runtime type” dialog box, select “Python 3” as the “Runtime type” and “T4 GPU” as the “Hardware Accelerator”. Then, click on “Save” to apply the selected changes as shown:

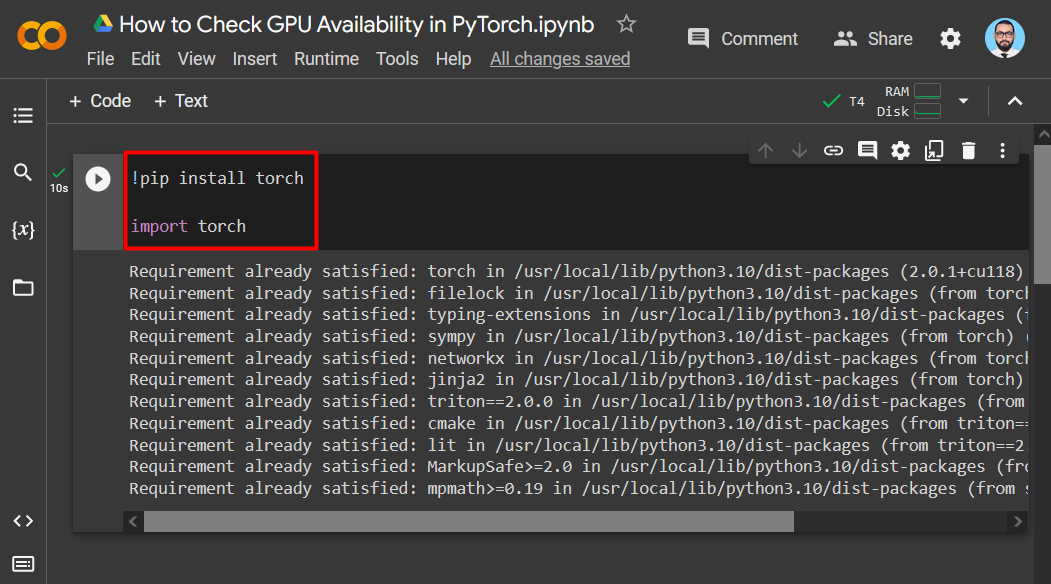

Step 3: Install and Import the Required Libraries

Install the “Torch” library into the project using the “pip” package installer and import it using the “import” command as shown:

import torch

The output showing the installation and import of the Torch library:

Step 4: Check GPU Availability

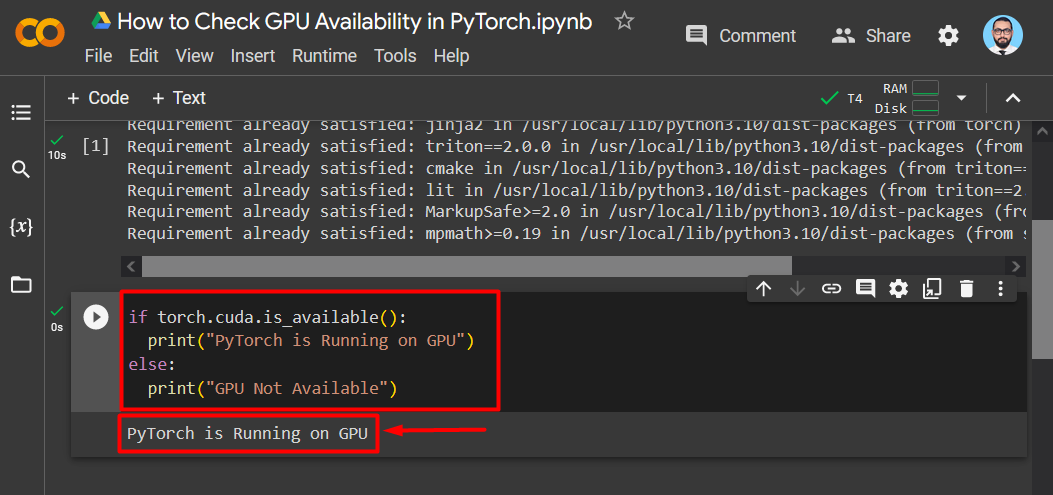

The “torch.cuda.is_available()” function is used within an “if/else” statement in order to check if the GPU is available in the PyTorch project as shown:

print("PyTorch is Running on GPU")

else:

print("GPU Not Available")

The output showing that PyTorch is running on GPU:

Step 5: Check Name of Available GPU

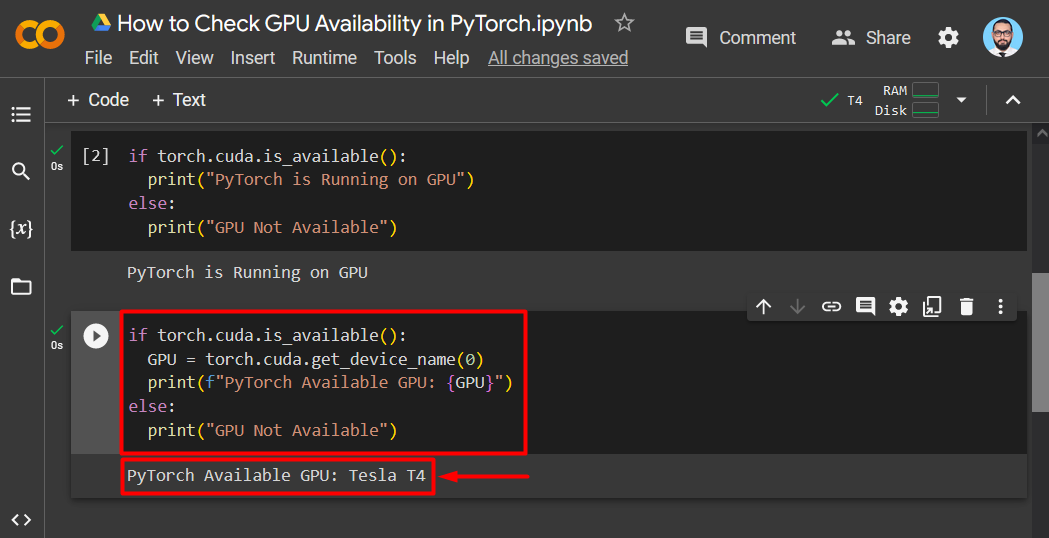

Take the GPU Availability check a step further by inquiring about the name of the GPU available for the project. This can be done by using the “torch.cuda.get_device_name()” function and an “if/else” statement as shown below:

GPU = torch.cuda.get_device_name(0)

print(f"PyTorch Available GPU: {GPU}")

else:

print("GPU Not Available")

The Google Colab output shows the “Tesla T4” available GPU for PyTorch:

Note: You can access our Google Colab Notebook on “How to Check GPU Availability in PyTorch” at this link.

Pro-Tip

The “Google Colaboratory” development platform is ideal for running complicated and multi-layered machine learning models that require dedicated access to state-of-the-art GPUs. Colab provides the formidable “T4” GPU free of cost to its users and other stronger GPUs are available with a paid subscription.

We have just shown how to check GPU availability in PyTorch to run complex machine-learning models that require significant processing power.

Conclusion

To check GPU availability in PyTorch, use the “torch.cuda.is_available()” function and print a confirmation using the “print()” method. It is advised to change the Hardware Accelerator to “T4” beforehand in Google Colab. Furthermore, the exact name of the GPU can also be accessed using the “torch.cuda.get_device_name()” function. In this blog, we have shown how to check GPU availability in PyTorch and how to print the name of the available GPU.