The individual data entries are stored in the form of “Tensors” in the PyTorch and “gradients” of tensors are calculated using backward propagation within the training loop of a deep learning model. The term “unscaled” means that the data is raw and there is no preprocessing or optimization involved. The unscaled gradient of a Tensor provides the true value of change about the specified loss function.

In this blog, we will discuss how to calculate the unscaled gradient of a Tensor in PyTorch.

What is an Unscaled Gradient of a Tensor in PyTorch?

Tensors are multidimensional arrays that contain data and can run on GPUs in PyTorch. The tensors that contain raw data from the dataset without any preprocessing, transformations, or optimizations are called unscaled tensors. However, an “Unscaled Gradient” is different from an unscaled tensor and care must be taken to not confuse the two. An unscaled gradient of a tensor is calculated with respect to the selected loss function and it does not have any further optimizations or scaling.

How to Calculate the Unscaled Gradient of a Tensor in PyTorch?

The unscaled gradient of a Tensor is the actual value of the rate of change of the input data concerning the selected loss function. The raw gradient data is important to understand the behavior of the model and its progression during the training loop.

Follow the steps given below to learn how to calculate the unscaled gradient of a tensor in PyTorch:

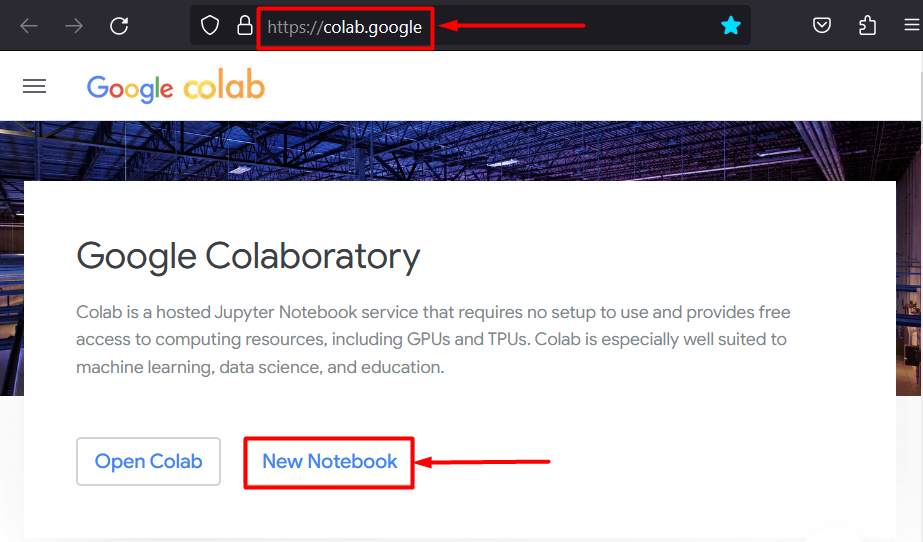

Step 1: Begin the Project by setting up the IDE

The Google Colaboratory IDE is one of the best choices for the development of PyTorch projects because it provides free access to GPUs for faster processing. Go to the Colab website and click on the “New Notebook” option to start working:

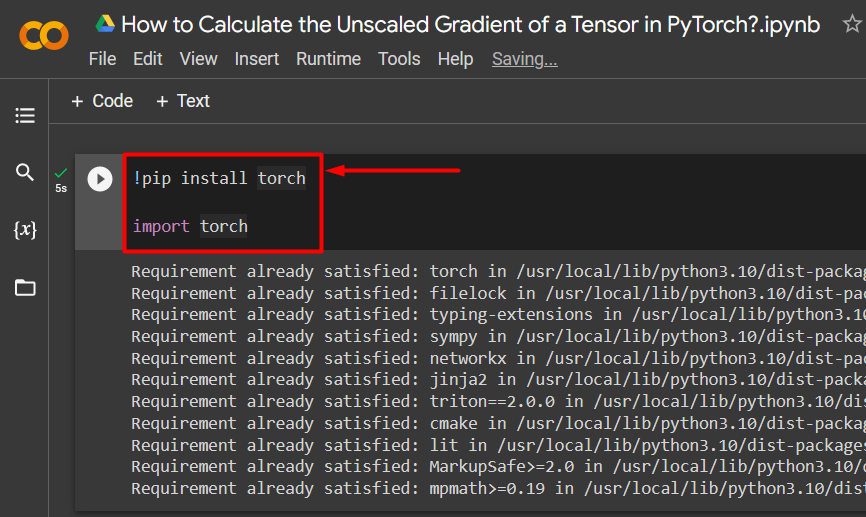

Step 2: Import the Essential Torch Library

All the functionality of the PyTorch framework is contained within the “Torch” library. Every PyTorch project starts by installing and importing this library:

import torch

The above code works as follows:

- “!pip” is an installation package for Python used to install libraries in projects.

- The “import” command is used to call the installed libraries into the project.

- This project only needs the functionality of the “torch” library:

Step 3: Define a PyTorch Tensor with Gradient

Use the “torch.tensor()” method to define a tensor with a gradient “requires_grad=True” method:

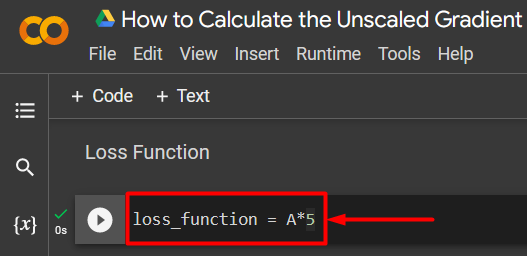

Step 4: Define a Simple Loss Function

A loss function is defined using a simple arithmetic equation as shown:

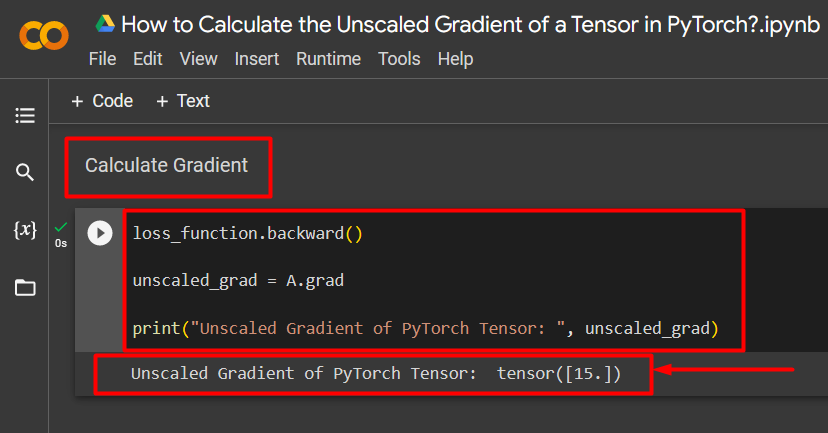

Step 5: Calculate the Gradient and Print to Output

Use the “backward()” method to calculate the unscaled gradient as shown:

unscaled_grad = A.grad

print("Unscaled Gradient of PyTorch Tensor: ", unscaled_grad)

The above code works as follows:

- Use the “backward()” method to calculate the unscaled gradient via backward propagation.

- Assign the “A.grad” to the “unscaled_grad” variable.

- Lastly, use the “print()” method to showcase the output of the unscaled gradient:

Note: You can access our Colab Notebook at this link.

Pro-Tip

The unscaled gradient of tensors can show the exact relation of the input data with the loss function for a neural network within the PyTorch framework. The raw unedited gradient shows how both values are related systematically.

Success! We have just shown how to calculate the unscaled gradient of a tensor in PyTorch.

Conclusion

Calculate the unscaled gradient of a tensor in PyTorch by first defining the tensor, and then using the backward() method to find the gradient. This shows how the deep learning model relates the input data with the defined loss function. In this blog, we have given a step-wise tutorial on how to calculate the unscaled gradient of a tensor in PyTorch.