LangChain is the framework with the application in the Natural Language Processing or NLP domain to build models in human-like languages. These models can be used by humans to get answers from the model or have a conversation like any other human. LangChain is used to build chains by storing each sentence in the conversation and interacting further using it as the context.

This post will illustrate the process of building LLM and LLMChain in LangChain.

How to Build LLM and LLMChain in LangChain?

To build LLM and LLMChain in LangChain, simply go through the listed steps:

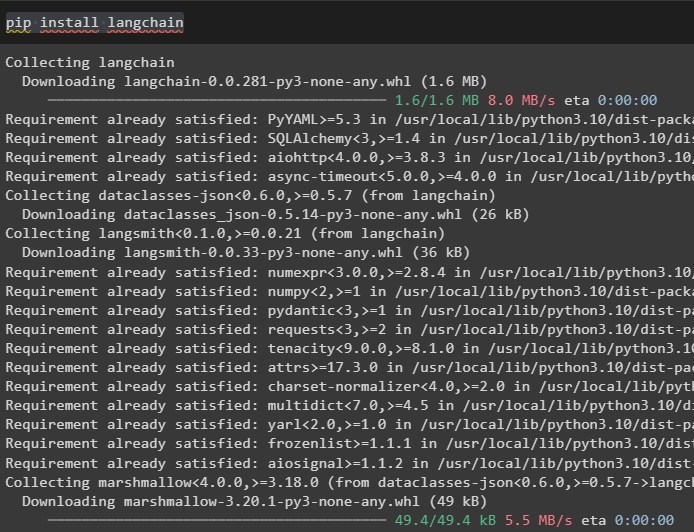

Step 1: Install Modules

Firstly, install the LangChain module to use its libraries for building LLMs and LLMChain:

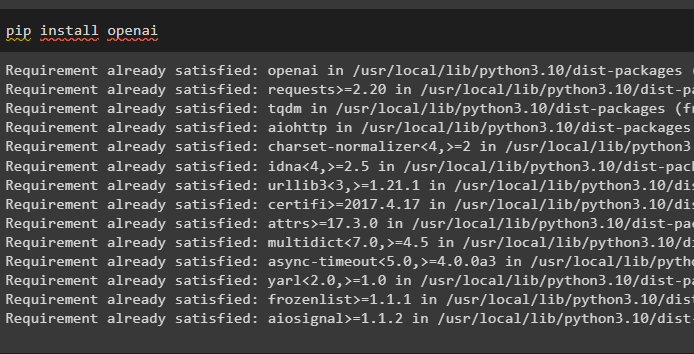

Another module that is required to build LLMs is OpenAI, and it can be installed using the pip command:

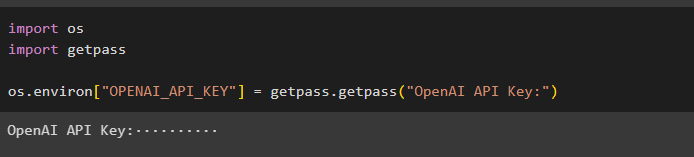

Step 2: Set up an Environment

Set up an environment using the OpenAI API key from its environment:

import getpassos.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Example 1: Build LLMs Using LangChain

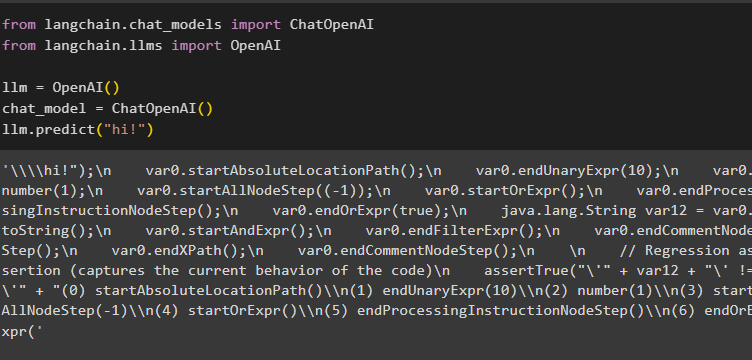

The first example is to build the Large Language Models using LangChain by importing OpenAI and ChatOpenAI libraries and the use llm() function:

Step 1: Using the LLM Chat Model

Import OpenAI and ChatOpenAI modules to build a simple LLM using OpenAI environment from LangChain:

from langchain.llms import OpenAI

llm = OpenAI()

chat_model = ChatOpenAI()

llm.predict("hi!")

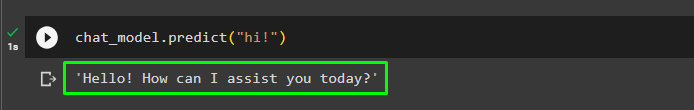

The model has replied with the “hi” answer as displayed in the following screenshot below:

The predict() function from the chat_model is used to get the answer or reply from the model:

The output displays that the model is at the disposal of the user asking queries:

Step 2: Using Text Query

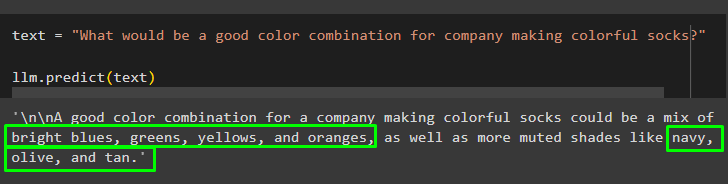

The user can also get answers from the model by giving the complete sentence in the text variable:

llm.predict(text)

The model has displayed multiple color combinations for colorful socks:

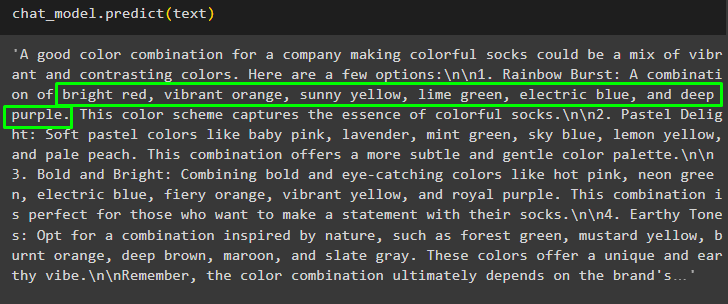

Get the detailed reply from the model using the predict() function with the color combinations for the socks:

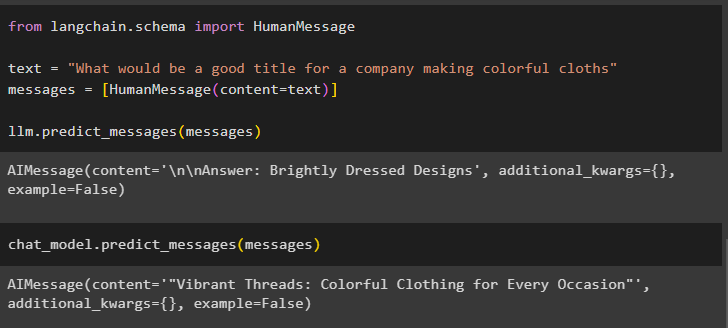

Step 3: Using Text With Content

The user can get the answer with a little explanation about the answer:

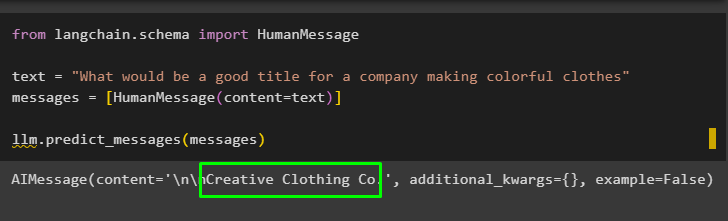

text = "What would be a good title for a company making colorful clothes"

messages = [HumanMessage(content=text)]

llm.predict_messages(messages)

The model has generated the title for the company which is “Creative Clothing Co”:

Predict the message to get the answer for the title of the company with its explanation as well:

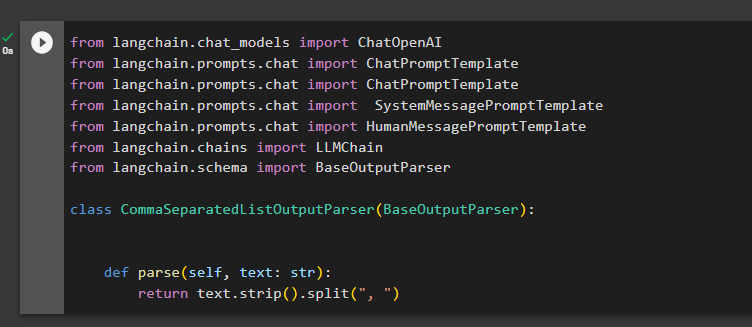

Example 2: Build LLMChain Using LangChain

The second example of our guide builds the LLMChain to get the model in the format of human interaction to combine all the steps from the previous example:

from langchain.prompts.chat import ChatPromptTemplate

from langchain.prompts.chat import ChatPromptTemplate

from langchain.prompts.chat import SystemMessagePromptTemplatefrom langchain.prompts.chat import HumanMessagePromptTemplate

from langchain.chains import LLMChain

from langchain.schema import BaseOutputParserclass CommaSeparatedListOutputParser(BaseOutputParser):

def parse(self, text: str):

return text.strip().split(", ")

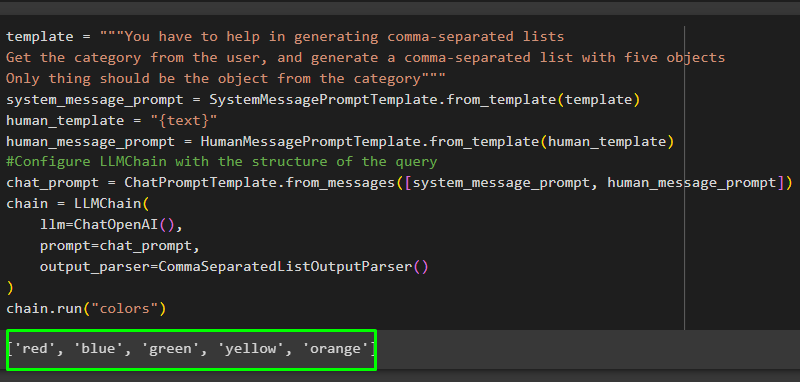

Build the template for the chat model by giving a detailed explanation of its working and then build the LLMChain() function containing the LLM, output parser, and chat_prompt libraries:

Get the category from the user, and generate a comma-separated list with five objects

Only thing should be the object from the category"""

system_message_prompt = SystemMessagePromptTemplate.from_template(template)

human_template = "{text}"

human_message_prompt = HumanMessagePromptTemplate.from_template(human_template)

#Configure LLMChain with the structure of the query

chat_prompt = ChatPromptTemplate.from_messages([system_message_prompt, human_message_prompt])

chain = LLMChain(

llm=ChatOpenAI(),

prompt=chat_prompt,

output_parser=CommaSeparatedListOutputParser()

)

chain.run("colors")

The model has provided the answer with the list of colors as the category should only contain 5 objects given in the prompt:

That’s all about building the LLM and LLMChain in LangChain.

Conclusion

To build the LLM and LLMChain using LangChain, simply install LangChain and OpenAI modules to set up an environment using its API key. After that, build the LLM model using the chat_model after creating the prompt template for a single query to a complete chat. LLMChain are used to build chains of all the observations in the conversation and use them as the context of the interaction. This post illustrates the process of building LLM and LLMChain using the LangChain framework.