This post will illustrate the process of building LangChain applications using Prompt Template and Output Parser.

How to Build LangChain Applications Using Prompt Template and Output Parser?

To build the LangChain application using the prompt template and output parser, simply go through this easy guide:

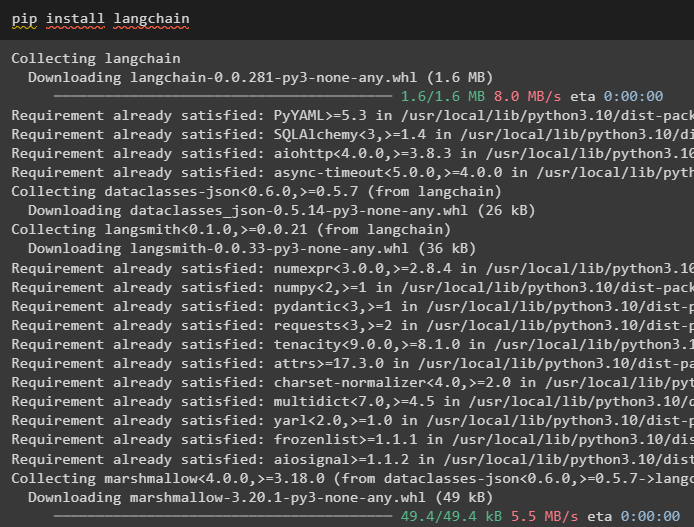

Step 1: Install LangChain

First, start the process of building LangChain applications by installing the LangChain framework using the “pip” command:

Step 2: Using Prompt Template

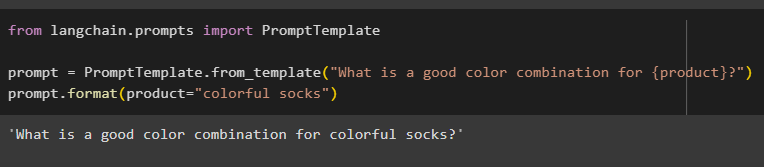

After installing the LangChain modules, import the “PromptTemplate” library to build a prompt template by providing a query for the model to understand the question:

prompt = PromptTemplate.from_template("What is a good color combination for {product}?")

prompt.format(product="colorful socks")

The output automatically combined the sentence with the value of the “product” variable:

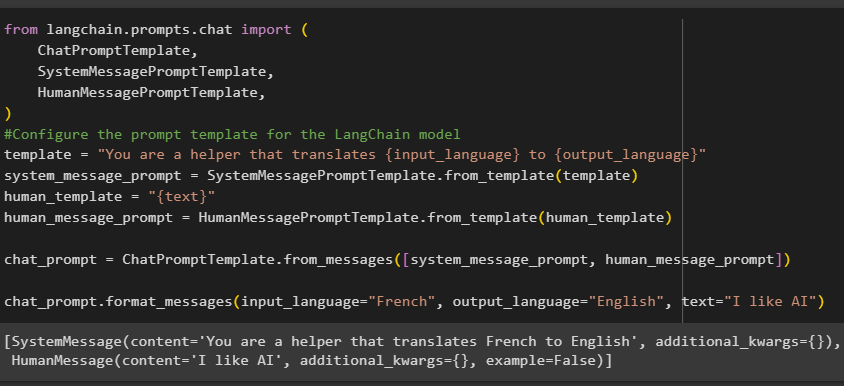

After that, build another prompt template by importing the HumanMessagePromptTemplate, ChatPromptTemplate, and SystemMessagePromptTemplate libraries from the LangChain:

ChatPromptTemplate,

SystemMessagePromptTemplate,

HumanMessagePromptTemplate,

)

#Configure the prompt template for the LangChain model

template = "You are a helper that translates {input_language} to {output_language}"

system_message_prompt = SystemMessagePromptTemplate.from_template(template)

human_template = "{text}"

human_message_prompt = HumanMessagePromptTemplate.from_template(human_template)

chat_prompt = ChatPromptTemplate.from_messages([system_message_prompt, human_message_prompt])

chat_prompt.format_messages(input_language="French", output_language="English", text="I like AI")

After importing all the required libraries, simply construct the custom template for the queries using the template variable:

The prompt templates are only used to set the template for the query/question and it does not reply with any answer to the question. However, the OutputParser() function can extract answers as the following section explains with the example:

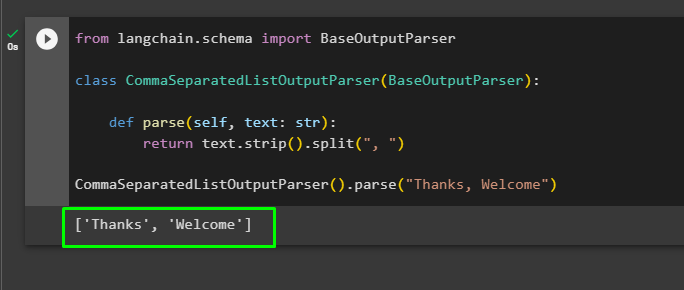

Step 3: Using Output Parser

Now, import the BaseOutputParser library from LangChain to separate the text values separated by commas and return the list in the output:

class CommaSeparatedListOutputParser(BaseOutputParser):

def parse(self, text: str):

return text.strip().split(", ")

CommaSeparatedListOutputParser().parse("Thanks, Welcome")

That is all about building the LangChain application using the prompt template and output parser.

Conclusion

To build a LangChain application using the prompt template and output parser, simply install LangChain and import libraries from it. PromptTemplate library is used to build the structure for the query so the model can understand the question before extracting information using the Parser() function. The OutputParser() function is used to fetch answers based on the queries customized previously. This guide has explained the process of building LangChain applications using the prompt template and output parser.