Quick Outline

This post will demonstrate the following:

How to Build a Customized Conversational Memory in LangChain

- Install Modules

- Import Libraries

- Configure ConversationChain

- Set Prompt Template & AI Assistant

- Configure Human Prefix

How to Build a Customized Conversational Memory in LangChain?

LangChain enables the user to build customized conversational memory that can be used to store previous messages as observations. These observations can be used by the machine or chatbot to recognize the context of the conversation. Using conversational memory, the model can also understand the ambiguities added to the prompts by the user as humans often use them in their conversation.

To learn the process of building the customized conversational memory in LangChain, simply go through the following steps:

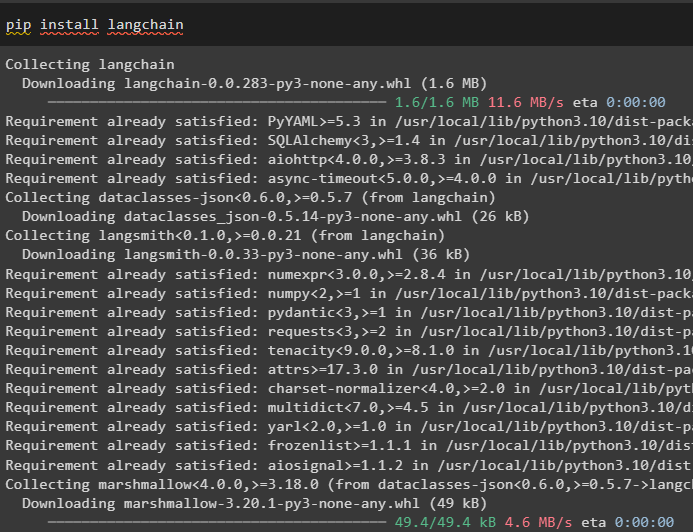

Step 1: Install Modules

The first step in order to get started is the installation of the LangChain to get its dependencies and libraries for building chatbots and LLMs:

Another module to install for building LLMs in LangChain is the OpenAI framework that contains the component to set up the environment:

Importing the “os” and “getpass” libraries can be used to set up the OpenAI API key for configuring its environment. The user needs to provide the API key that can be gathered from the OpenAI’s account after signing in to it:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Import Libraries

After setting up the environment using the modules installed in the previous step, simply import the required libraries using LangChain. Using the “langchain.memory” to import the “ConversationBufferMemory” library that can be used to build the conversational memory in the LangChain framework. After that, configure the LLM using the OpenAI() method with a temperature value equal to 0 which can control the randomness of the output:

#libraries from LangChain module to build additional memory

from langchain.memory import ConversationBufferMemory

from langchain.llms import OpenAI

llm = OpenAI(temperature=0)

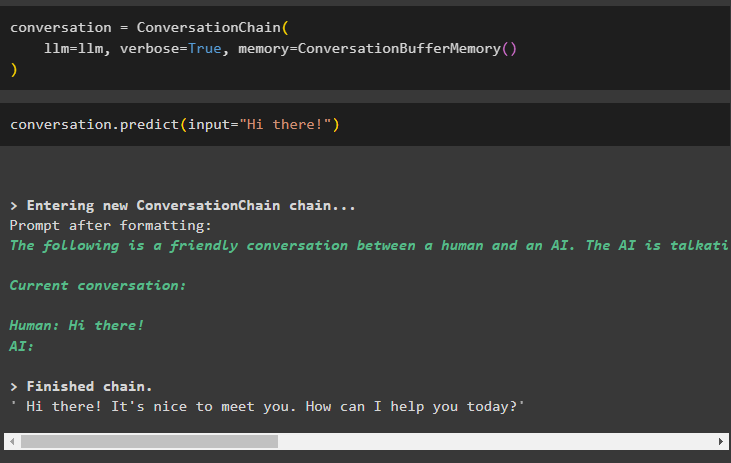

Step 3: Configure ConversationChain

Now, define the conversation variable using the ConversationChain() method to add the ConversationBufferMemory() function in the memory:

llm=llm, verbose=True, memory=ConversationBufferMemory()

)

Use the conversation variable to get the output from the model by initializing the command/prompt in the input variable as the argument of the predict() method:

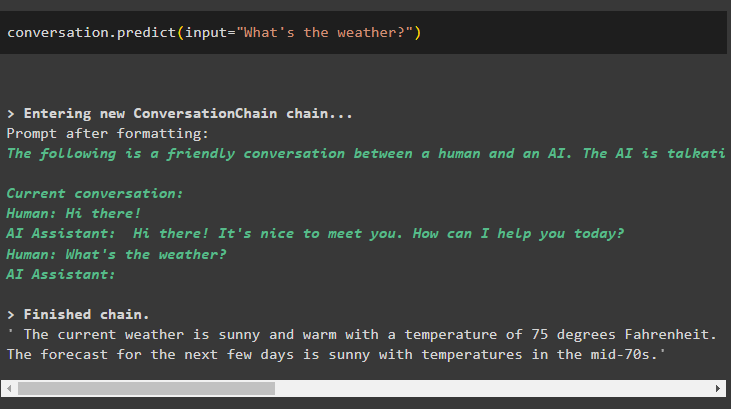

Running the above command will initiate the conversation between the human and the model and the machine responds with the reply after finishing the chain. This chat will be stored in the memory and will be used as the context for the following interactions/messages:

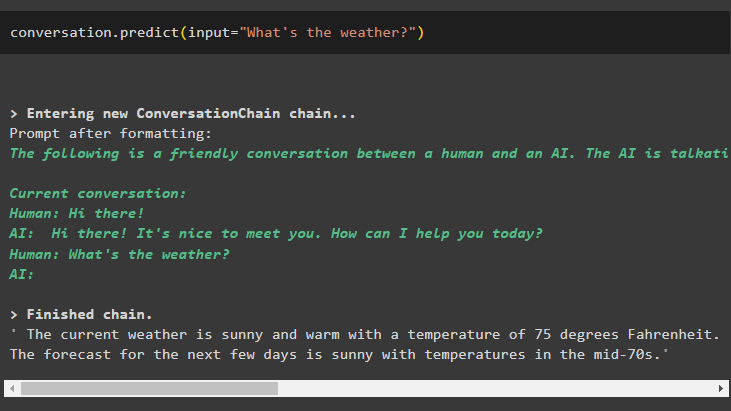

Now, ask a question about the current weather to get the output from the chatbot:

The chatbot responds with an answer related to the weather but it has used the previous conversation as the context by storing it as the observation:

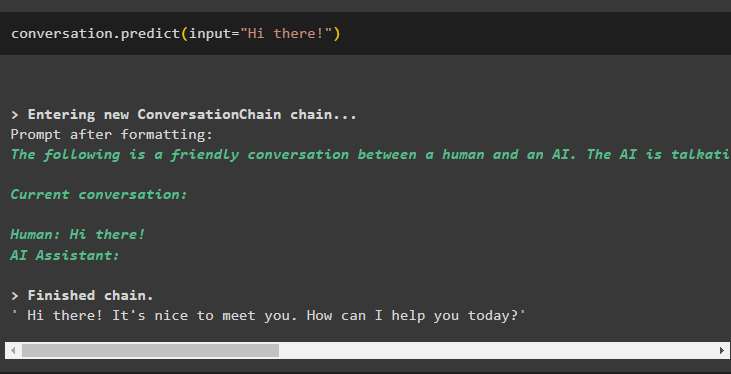

Step 4: Set Prompt Template & AI Assistant

The next step is to add the argument in the ConversationBufferMemory() that is called ai_prefix with the value “AI Assistant”. To use the AI assistant, the prompt template needs to be configured first so the model will understand the structure of the input:

template = """The following is an interaction between a machine and a human

The machine (AI) provides a lot of specific details from its context

It says it does not know If the machine does not know the answer

Current conversation:

{history}

Human: {input}

AI Assistant:"""

#Configure prompt template to build the conversation model and its interface

PROMPT = PromptTemplate(input_variables=["history", "input"], template=template)

#Defining conversation variable to add conversation buffer memory

conversation = ConversationChain(

prompt=PROMPT,

llm=llm,

verbose=True,

memory=ConversationBufferMemory(ai_prefix="AI Assistant"),

)

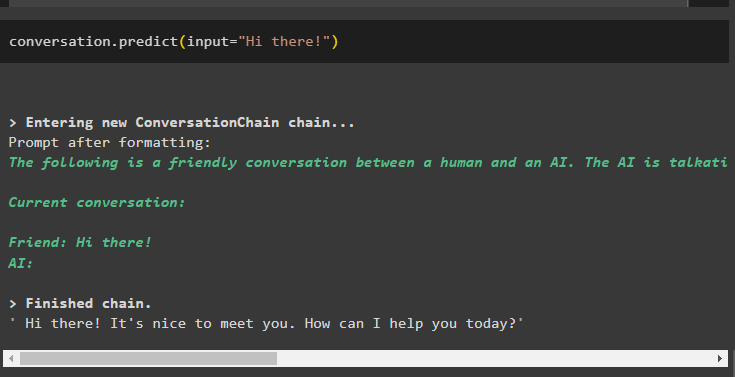

Now, providing the query in the input variable which is used as the argument in the predict() method by calling the conversation variable with it:

Provide another prompt in the input variable as an argument to use the chat stored in the memory as the observation:

The following screenshot displays the current conversation section that explains the history of the interaction between the human and the AI assistant. At the end, it also displays the answer to the asked prompt after finishing the chain:

Step 5: Configure Human Prefix

The last step in this guide is to configure the prompt template by assigning the “Friend” value to the “human_prefix” argument. The conversation actors will be configured in the prompt template as the “Friend” asking questions and “AI” will be responding as output:

template = """The is the interaction between a machine and a human

The machine (AI) provides a lot of specific details using its context

the machine says I don't know if it does not know the answer

Current conversation:

{history}

Friend: {input}

AI:"""

#Configure prompt template to build the conversation model and its interface

PROMPT = PromptTemplate(input_variables=["history", "input"], template=template)

#Defining conversation variable to add conversation buffer memory

conversation = ConversationChain(

prompt=PROMPT,

llm=llm,

verbose=True,

memory=ConversationBufferMemory(human_prefix="Friend"),

)

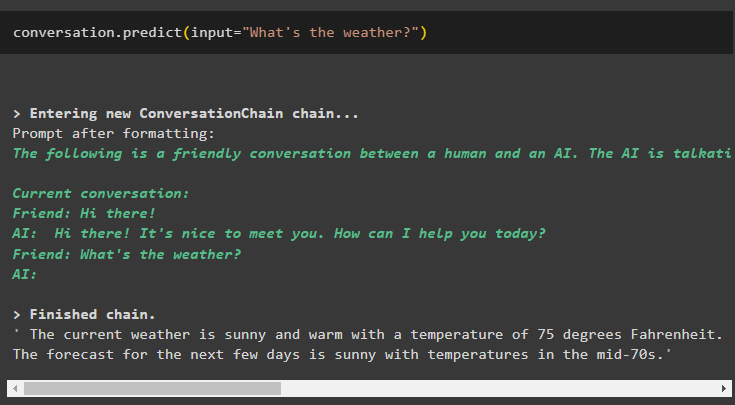

The input will be asked by the Friend containing the prompt for the AI which will provide the answer for the query:

Another input to get on with the conversation is provided using the following code and the conversation will be stored in the conversational memory:

That’s all about building a customized conversational memory in LangChain.

Conclusion

To build the customized conversational memory in LangChain, simply install the modules required to build chatbots or LLMs. After that, set up the environment using OpenAI and then import the libraries from the modules like the ConversationChain() method. The ConversationChain() function can be configured with different memory arguments like ai_prefix or human_prefix to get answers from the chatbots. This guide has elaborated on the process of building a customized conversational memory in LangChain.