Quick Outline

This post will demonstrate:

How to Add Multiple Memory Classes in LangChain

- Installing Frameworks

- Importing Multiple Memory Libraries

- Adding Multiple Memory Classes

- Testing the Chain

How to Add Multiple Memory Classes in LangChain?

LangChain offers multiple memory classes that can be added to the chatbots or models for building chains. The chains are a vital aspect of the chat model as they can connect the messages to build a chat and store them as observations. Multiple memory classes can be added at once in the single chain to improve the efficiency of the model and its performance.

To learn the process of adding multiple classes in the same chain using the LangChain framework, simply go through the listed steps:

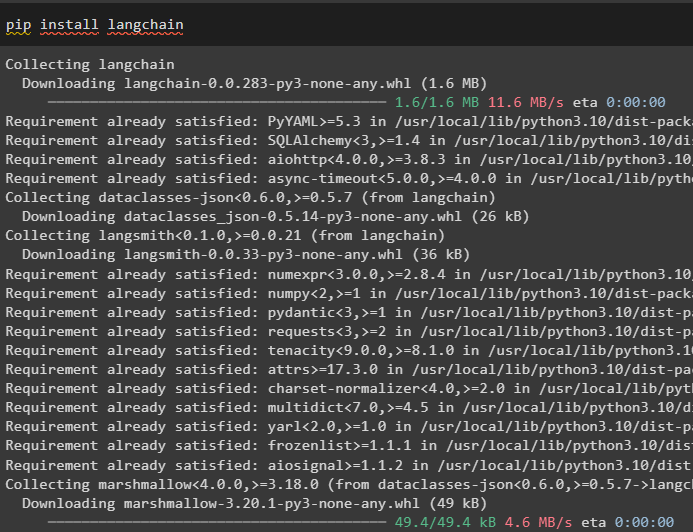

Step 1: Installing Frameworks

First, get started with the process of adding multiple chains by installing the LangChain framework to configure chat models:

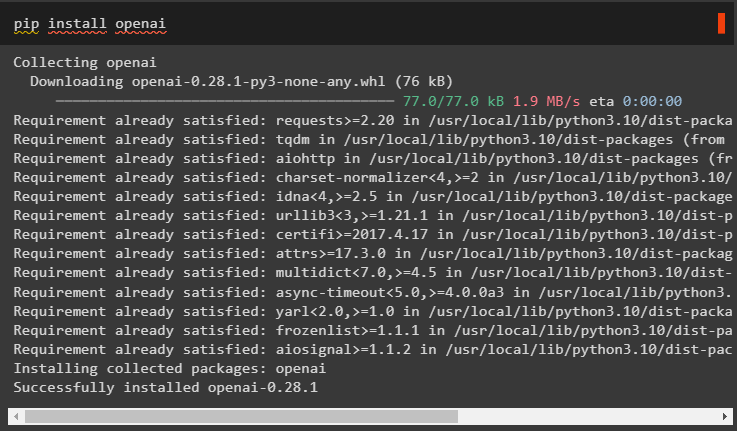

Install the OpenAI module using the “pip” command to get its libraries for designing LLMs or Large Language Models:

Get the “os” and “getpass” libraries to enter the API key from the OpenAI account and its complete process is given here. The os library is used to access the operating system that enables us to access its environment by entering the API key using the getpass library:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Importing Multiple Memory Libraries

The next step here is to import libraries from the LangChain framework like OpenAI, PromptTemplate, and ConversationChain. Using the memory dependency, import multiple memory libraries that can be provided to the same chain simultaneously:

from langchain.prompts import PromptTemplate

from langchain.chains import ConversationChain

from langchain.memory import (

ConversationBufferMemory,

CombinedMemory,

ConversationSummaryMemory,

)

Step 3: Adding Multiple Memory Classes

The next is the addition of multiple memory classes to access additional memory while building the chain of conversational messages. Configure the prompt templates using the memory classes to add the memory with the chain so the chat history can be accessed by the LLMs. Use the ConversationChain() method to use the llm, prompt template, and memory as its arguments to configure the structure of the conversation:

memory_key="chat_history_lines", input_key="input"

)

summary_memory = ConversationSummaryMemory(llm=OpenAI(), input_key="input")

memory = CombinedMemory(memories=[conv_memory, summary_memory])

_DEFAULT_TEMPLATE = """The following is an interaction between a machine and a human The machine (AI) provides a lot of specific details from its context It says it does not know If the machine does not know the answer

Summary of conversation:

{history}

Current conversation:

{chat_history_lines}

Human: {input}

AI:"""

PROMPT = PromptTemplate(

input_variables=["history", "input", "chat_history_lines"],

template=_DEFAULT_TEMPLATE,

)

llm = OpenAI(temperature=0)

conversation = ConversationChain(llm=llm, verbose=True, memory=memory, prompt=PROMPT)

Step 4: Testing the Chain

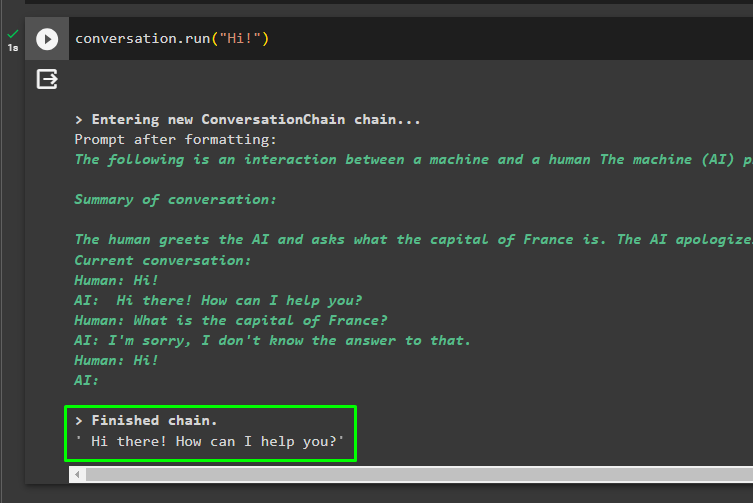

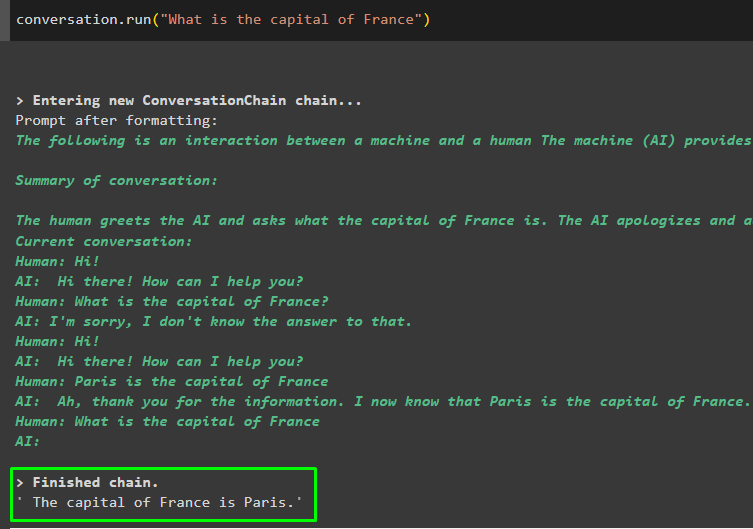

Once the template is set and the conversation structure is configured, simply test the conversation using the run() method with the input as its argument:

Running the conversation variable initiates the conversation with the language model and the answer in reply to the prompt:

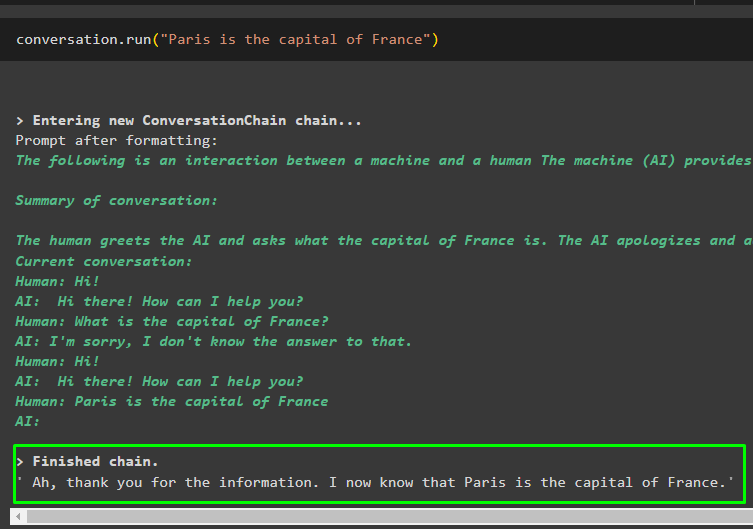

Get on with the conversation by giving the model some information about the real world that it doesn’t already know:

The model has trained itself by storing the information in its memory and it can be used in the future when asked a question related to it:

Now, ask the question related to the information stored in the memory in the previous code section:

The model has successfully extracted the information from its memory and displayed the correct answer after understanding the question:

That’s all about adding multiple memory classes in the same chain using the LangChain framework.

Conclusion

To add multiple memory classes in the same chain using the LangChain framework, simply install the required modules to set up the environment. After that, import multiple libraries to use them for building the memory classes and configuring the conversation chain with the arguments. Once the configuration is complete, simply run the chain to test the memory by asking questions related to previous information. This guide has elaborated on the process of adding multiple memory classes in the same chains using the LangChain.