This guide will illustrate the process of loading chains from LangChain Hub.

How to Add Memory State in Chain Using LangChain?

Memory state can be used to initialize the chains as it can refer to the recent value stored in the chains which will be used while returning the output. To learn the process of adding a memory state in chains using the LangChain framework, simply go through this easy guide:

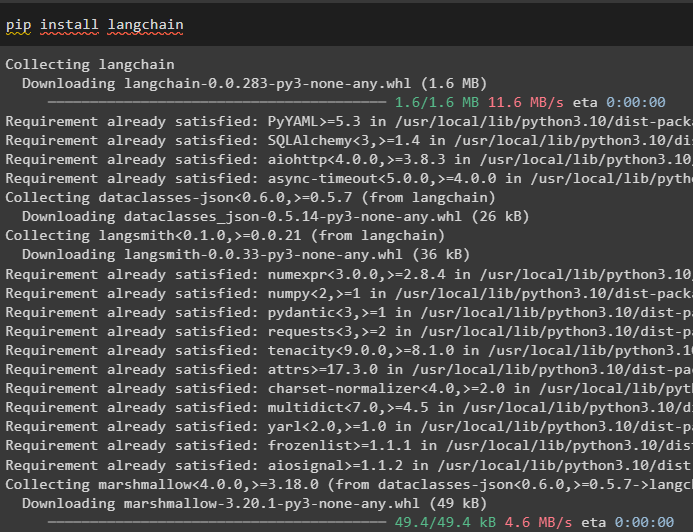

Step 1: Install Modules

Firstly, get into the process by installing the LangChain framework with its dependencies using the pip command:

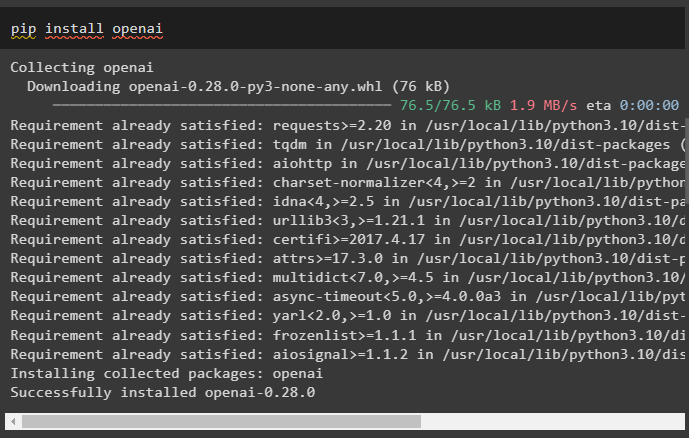

Install the OpenAI module as well to get its libraries that can be used to add memory state in the chain:

Get the API key from the OpenAI account and set up the environment using it so the chains can be able to access it:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

This step is important for the code to work properly.

Step 2: Import Libraries

After setting up the environment, simply import the libraries for adding the memory state like LLMChain, ConversationBufferMemory, and many more:

from langchain.memory import ConversationBufferMemory

from langchain.chat_models import ChatOpenAI

from langchain.chains.llm import LLMChain

from langchain.prompts import PromptTemplate

Step 3: Building Chains

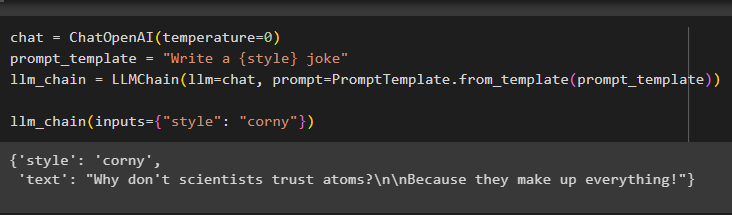

Now, simply build chains for the LLM using the OpenAI() method and template of the prompt using the query to call the chain:

prompt_template = "Write a {style} joke"

llm_chain = LLMChain(llm=chat, prompt=PromptTemplate.from_template(prompt_template))

llm_chain(inputs={"style": "corny"})

The model has displayed the output using the LLM model as displayed in the screenshot below:

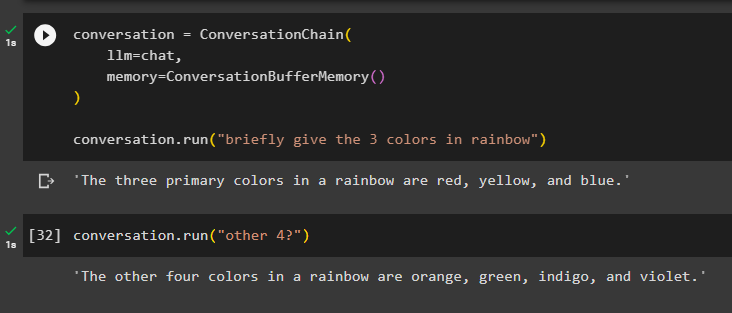

Step 4: Adding Memory State

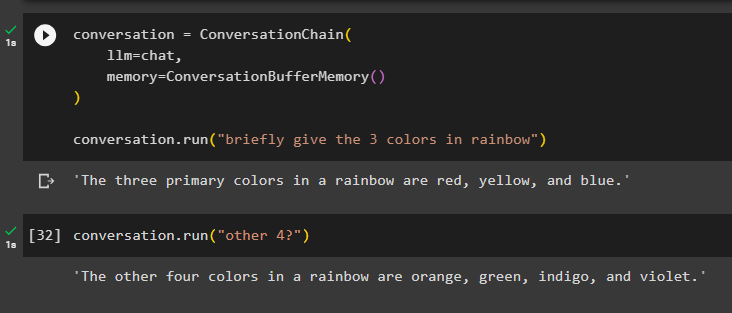

Here we are going to add the memory state in the chain using the ConversationBufferMemory() method and run the chain to get 3 colors from the rainbow:

llm=chat,

memory=ConversationBufferMemory()

)

conversation.run("briefly give the 3 colors in rainbow")

The model has displayed only three colors of the rainbow and the context is stored in the memory of the chain:

Here we are running the chain with an ambiguous command as “other 4?” so the model itself gets the context from the memory and displays the remaining rainbow colors:

The model has done exactly that, as it understood the context and returned the remaining four colors from the rainbow set:

That is all about loading chains from LangChain Hub.

Conclusion

To add the memory in chains using the LangChain framework, simply install modules to set up the environment for building the LLM. After that, import the libraries required to build the chains in the LLM and then add the memory state to it. After adding the memory state to the chain, simply give a command to the chain to get the output and then give another command within the context of the previous one to get the correct reply. This post has elaborated on the process of adding a memory state in chains using the LangChain framework.