Quick Outline

This post will demonstrate the following:

- How to Add Memory to Both an Agent and its Tools in LangChain

- Step 1: Installing Frameworks

- Step 2: Setting up Environments

- Step 3: Importing Libraries

- Step 4: Adding ReadOnlyMemory

- Step 5: Setting up Tools

- Step 6: Building the Agent

- Method 1: Using ReadOnlyMemory

- Method 2: Using the Same Memory for Both Agent and Tools

- Conclusion

How to Add Memory to Both an Agent and its Tools in LangChain?

Adding memory to the agents and tools enables them to work better with the ability to use the chat history of the model. With memory, the agent can efficiently decide which tool to deploy and when. It is preferred to use the “ReadOnlyMemory” for both agents and tools so they won’t be able to modify it. To learn the process of adding memory to both agents and tools in LangChain, go through the listed steps:

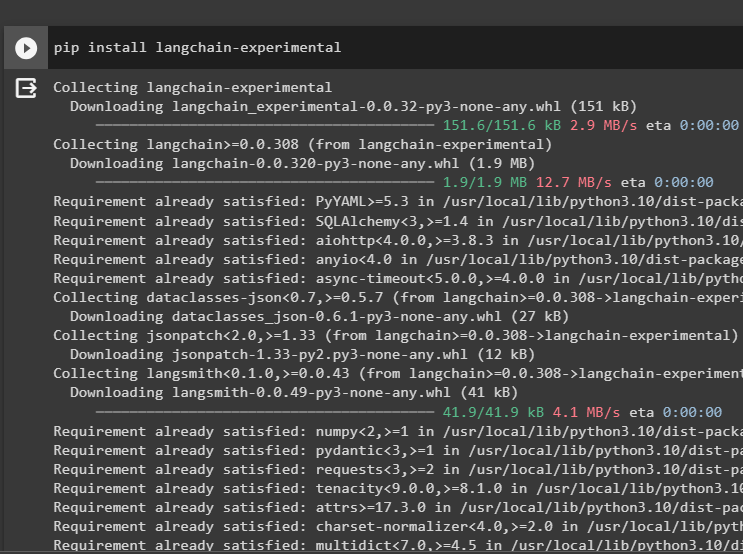

Step 1: Installing Frameworks

First of all, install the langchain-experimental module to get its dependencies for building language models and tools for the agent. LangChain experimental is the module that gets the dependencies for building models that are mostly used for experiments and tests:

Get the google-search-results modules with the OpenAI dependencies to get the most relevant answers from the internet:

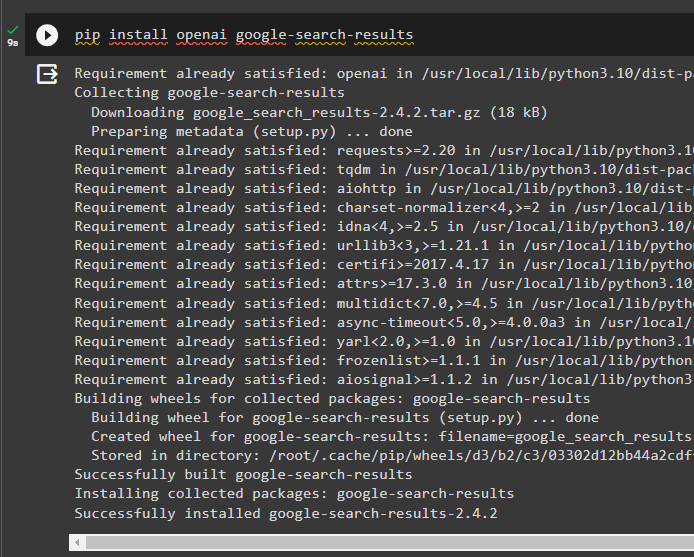

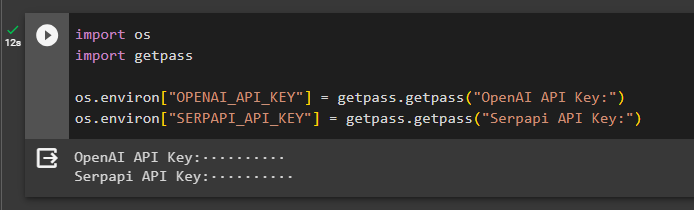

Step 2: Setting up Environments

To build the model that gets answers from the internet, it is required to set up the environments using the OpenAI and SerpAPi keys:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

os.environ["SERPAPI_API_KEY"] = getpass.getpass("Serpapi API Key:")

Step 3: Importing Libraries

After setting up the environments, import the libraries to build the tools for the agent and the additional memory to integrate with them. The following code uses the agents, memory, llms, chains, prompts, and utilities to get the required libraries:

from langchain.memory import ConversationBufferMemory, ReadOnlySharedMemory

from langchain.llms import OpenAI

#get the library for building the chain using LangChain

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

#get the library for getting the information from the internet

from langchain.utilities import SerpAPIWrapper

Step 4: Adding ReadOnlyMemory

Configure the template for the agent to get started with performing tasks as soon as the user provides the input. After that, add the “ConversationBufferMemory()” to store the chat history of the model and initialize the “ReadOnlyMemory” for the agents and its tools:

{chat_history}

#set the structure for extracting the precise and easy summary

Summarize the chat for {input}:

"""

prompt = PromptTemplate(input_variables=["input", "chat_history"], template=template)

memory = ConversationBufferMemory(memory_key="chat_history")

readonlymemory = ReadOnlySharedMemory(memory=memory)

#summary chain to integrate all the components for getting the summary of the conversation

summary_chain = LLMChain(

llm=OpenAI(),

prompt=prompt,

verbose=True,

memory=readonlymemory,

)

Step 5: Setting up Tools

Now, set up tools like search and summary to get the answer from the internet along with the summary of the chat:

tools = [

Tool(

name="Search",

func=search.run,

description="proper responses to the targeted queries about the recent events",

),

Tool(

name="Summary",

func=summary_chain.run,

description="helpful to summarize the chat and the input to this tool should be a string, representing who will read this summary",

),

]

Step 6: Building the Agent

Configure the agent as soon as the tools are ready to perform the required tasks and extract the answers from the internet. The “prefix” variable is executed before the agents assign any task to the tools and the “suffix” is executed after the tools have extracted the answer:

suffix = """Begin!"

#structure for the agent to start using the tools while using the memory

{chat_history}

Question: {input}

{agent_scratchpad}"""

prompt = ZeroShotAgent.create_prompt(

#configure prompt templates to understand the context of the question

tools,

prefix=prefix,

suffix=suffix,

input_variables=["input", "chat_history", "agent_scratchpad"],

)

Method 1: Using ReadOnlyMemory

Once the agent is set to execute the tools, model with ReadOnlyMemory is the preferred way to build and execute the chains to fetch answers and the process is as follows:

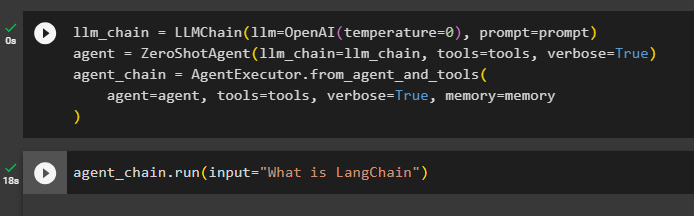

Step 1: Building the Chain

The first step in this method is to build the chain and the executor for the “ZeroShotAgent()” with its arguments. The “LLMChain()” is used to build the connection among all the chats in the language model using the llm and prompt arguments. The agent uses the llm_chain, tools, and verbose as its argument and builds agent_chain to execute both agents and its tools with the memory:

agent = ZeroShotAgent(llm_chain=llm_chain, tools=tools, verbose=True)

agent_chain = AgentExecutor.from_agent_and_tools(

agent=agent, tools=tools, verbose=True, memory=memory

)

Step 2: Testing the Chain

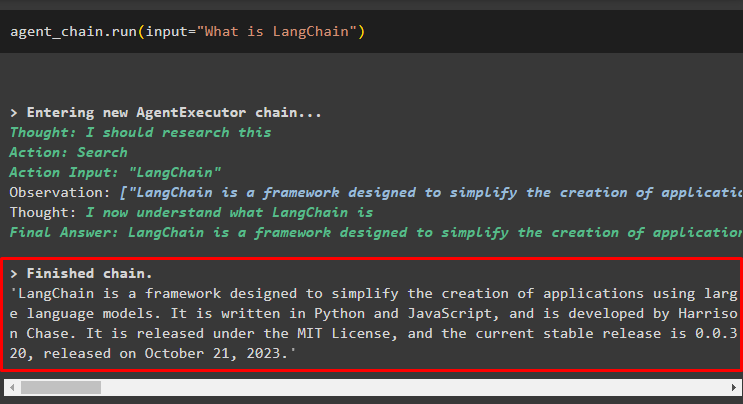

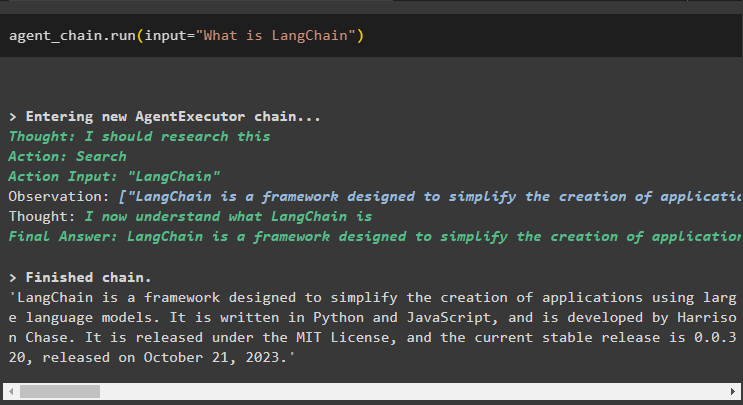

Call the agent_chain using the run() method to ask the question from the internet:

The agent has extracted the answer from the internet using the search tools:

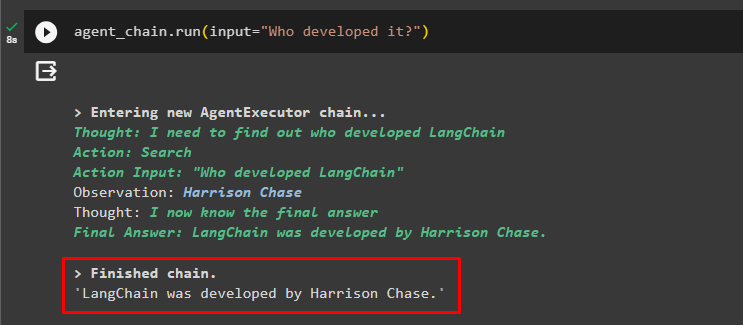

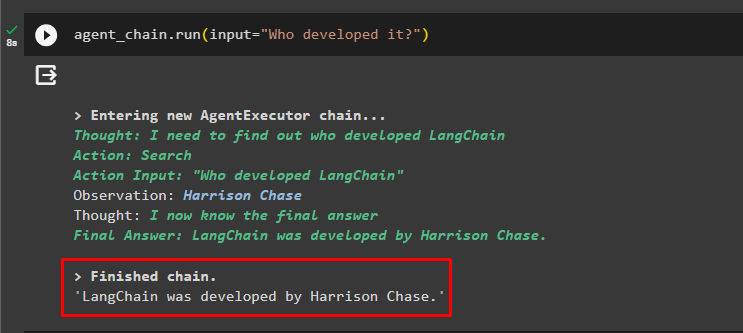

The user can ask the unclear follow-up question to test the memory attached to the agent:

The agent has used the previous chat to understand the context of the questions and fetched the answers as displayed in the following screenshot:

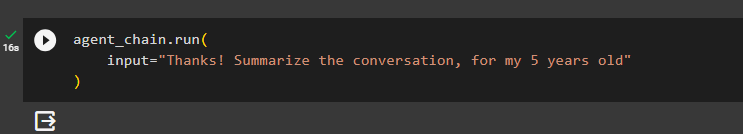

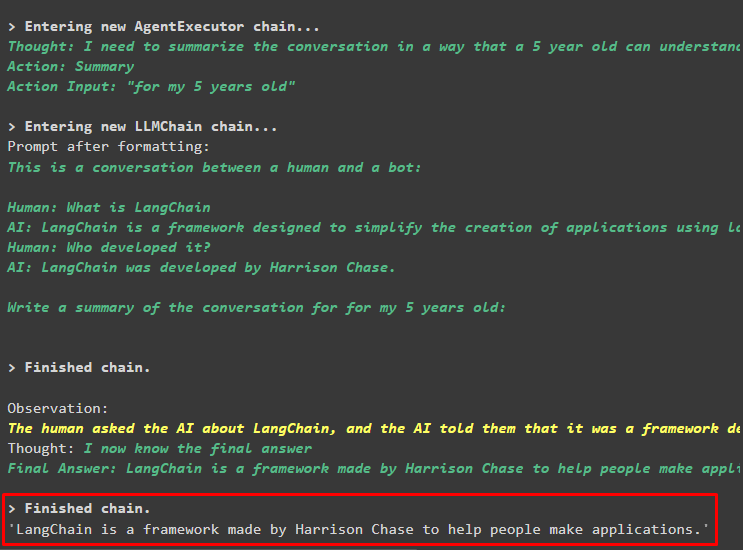

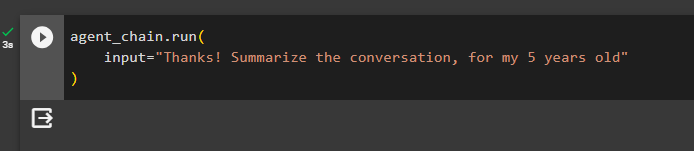

The agent uses the tool(summary_chain) to extract a summary of all the answers extracted previously using the agent’s memory:

input="Thanks! Summarize the conversation, for my 5 years old"

)

Output

The summary of the previously asked questions has been displayed for a 5-year-old in the following screenshot:

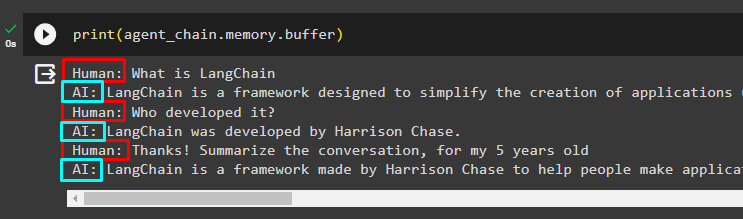

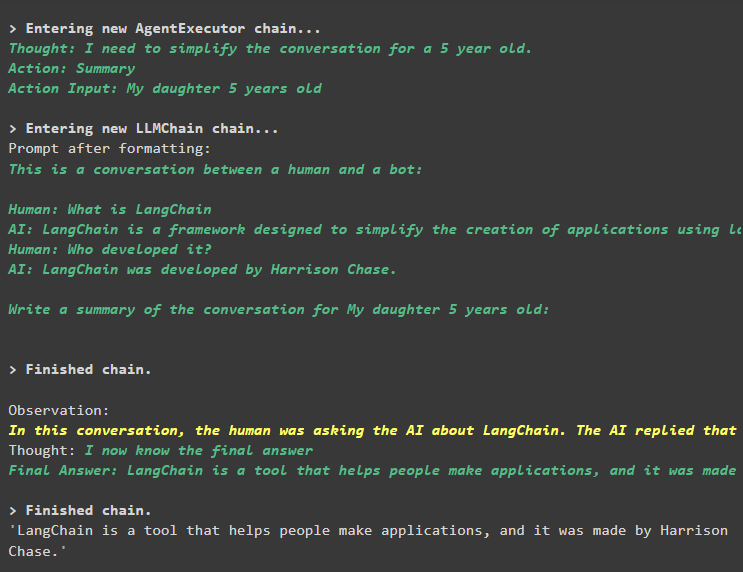

Step 3: Testing the Memory

Print the buffer memory to extract the chats stored in it by using the following code:

The chats in its correct order without any modification has been displayed in the following snippet:

Method 2: Using the Same Memory for Both Agent and Tools

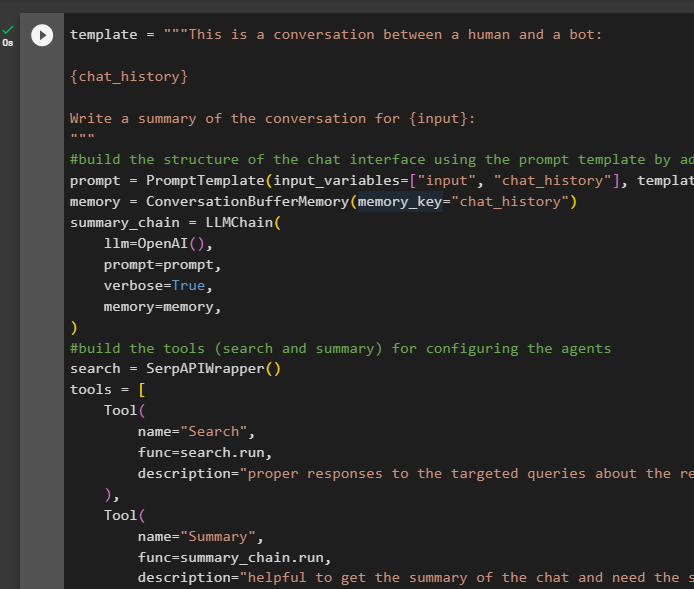

The second method which is not recommended by the platform is using the buffer memory for both agents and tools. The tools can change the chats stored in the memory which might return false outputs in large conversations:

Step 1: Building the Chain

Using the complete code from the template to build the tools and chains for the agents with a small change as the ReadOnlyMemory is not added this time:

{chat_history}

Write a summary of the conversation for {input}:

"""

#build the structure of the chat interface using the prompt template by adding the memory with the chain

prompt = PromptTemplate(input_variables=["input", "chat_history"], template=template)

memory = ConversationBufferMemory(memory_key="chat_history")

summary_chain = LLMChain(

llm=OpenAI(),

prompt=prompt,

verbose=True,

memory=memory,

)

#build the tools (search and summary) for configuring the agents

search = SerpAPIWrapper()

tools = [

Tool(

name="Search",

func=search.run,

description="proper responses to the targeted queries about the recent events",

),

Tool(

name="Summary",

func=summary_chain.run,

description="helpful to get the summary of the chat and need the string input to this tool representing who will read this summary",

),

]

#explain the steps for the agent to use the tools to extract information for the chat

prefix = """Have a conversation with a human, answering the queries in the best possible way by accessing the following tools:"""

suffix = """Begin!"

#structure for the agent to start using the tools while using the memory

{chat_history}

Question: {input}

{agent_scratchpad}"""

prompt = ZeroShotAgent.create_prompt(

#configure prompt templates to understand the context of the question

tools,

prefix=prefix,

suffix=suffix,

input_variables=["input", "chat_history", "agent_scratchpad"],

)

#integrate all the components while building the agent executor

llm_chain = LLMChain(llm=OpenAI(temperature=0), prompt=prompt)

agent = ZeroShotAgent(llm_chain=llm_chain, tools=tools, verbose=True)

agent_chain = AgentExecutor.from_agent_and_tools(

agent=agent, tools=tools, verbose=True, memory=memory

)

Step 2: Testing the Chain

Run the following code:

The answer is successfully displayed and stored in the memory:

Ask the follow-up question without giving much of the context:

The agent uses the memory to understand the question by transforming it and then prints the answer:

Get the summary of the chat using the memory attached to the agent:

input="Thanks! Summarize the conversation, for my 5 years old"

)

Output

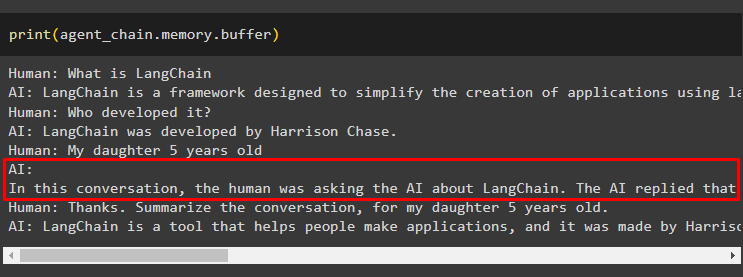

The summary has been extracted successfully, and till now everything seems to be the same but the change comes in the next step:

Step 3: Testing the Memory

Extracting the chat messages from the memory using the following code:

The tool has modified the history by adding another question which was not asked originally. This happens as the model understands the question using a self-ask question. The tool mistakenly thinks that it is asked by the user and treats it as a separate query. So it adds that additional question to the memory as well which then be used to get the context of the conversation:

That’s all for now.

Conclusion

To add memory to both an agent and its tools in LangChain, install the modules to get their dependencies and import libraries from them. After that, build the conversation memory, language model, tools, and the agent to add the memory. The recommended method to add the memory is using the ReadOnlyMemory to the agent and its tools to store the chat history. The user can also use the conversational memory for both agents and tools. But, they get confused sometimes and change the chats in the memory.