Quick Outline

This post will demonstrate the following:

- How to Access the Intermediate Steps of an Agent in LangChain

- Installing Frameworks

- Setting OpenAI Environment

- Importing Libraries

- Building LLM and Agent

- Using the Agent

- Method 1: Default Return Type to Access the Intermediate Steps

- Method 2: Using “dumps” to Access the Intermediate Steps

- Conclusion

How to Access the Intermediate Steps of an Agent in LangChain?

To build the agent in LangChain, the user needs to configure its tools and the structure of the template to get the number of steps involved in the model. The agent is responsible for automating the intermediate steps like thoughts, actions, observations, etc. To learn how to access the intermediate steps of an agent in the LangChain, simply follow the listed steps:

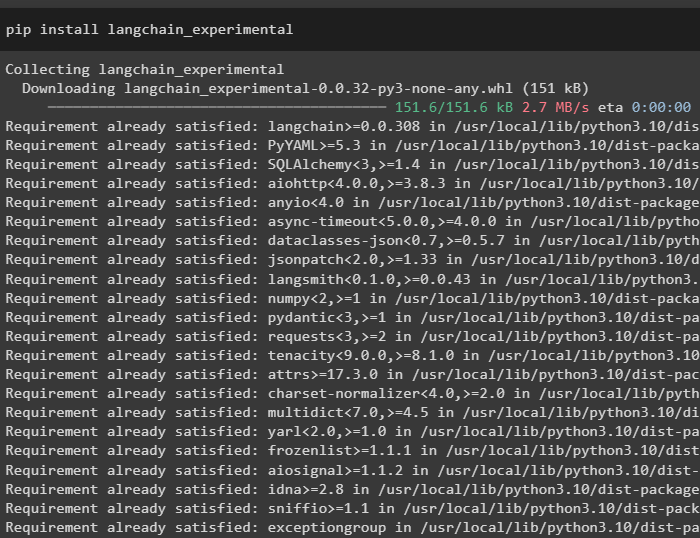

Step 1: Installing Frameworks

First of all, simply install the dependencies of the LangChain by executing the following code in the Python Notebook:

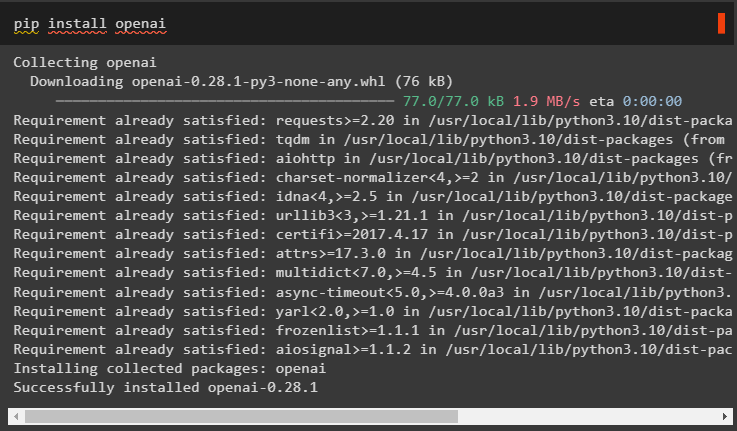

Install the OpenAI module to get its dependencies using the pip command and use them to build the language model:

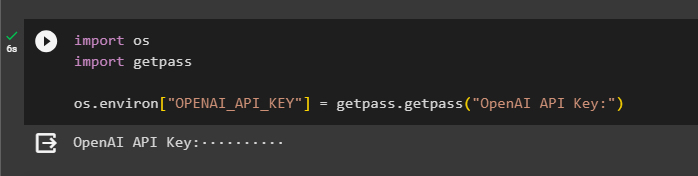

Step 2: Setting OpenAI Environment

Once the modules are installed, set up the OpenAI environment using the API key generated from its account:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 3: Importing Libraries

Now that we have the dependencies installed, use them to import libraries from the LangChain:

from langchain.agents import initialize_agent

from langchain.agents import AgentType

from langchain.llms import OpenAI

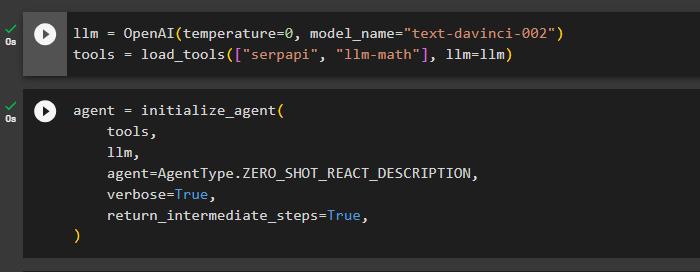

Step 4: Building LLM and Agent

Once the libraries are imported, it is time to use them for building the language model and tools for the agent. Define the llm variable and assign it with the OpenAI() method containing the temperature and model_name arguments. The “tools” variable contains the load_tools() method with the SerpAPi and llm-math tools and the language model in its argument:

tools = load_tools(["serpapi", "llm-math"], llm=llm)

Once the language model and tools are configured, simply design the agent to perform the intermediate steps using the tools in the language model:

tools,

llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True,

return_intermediate_steps=True,

)

Step 5: Using the Agent

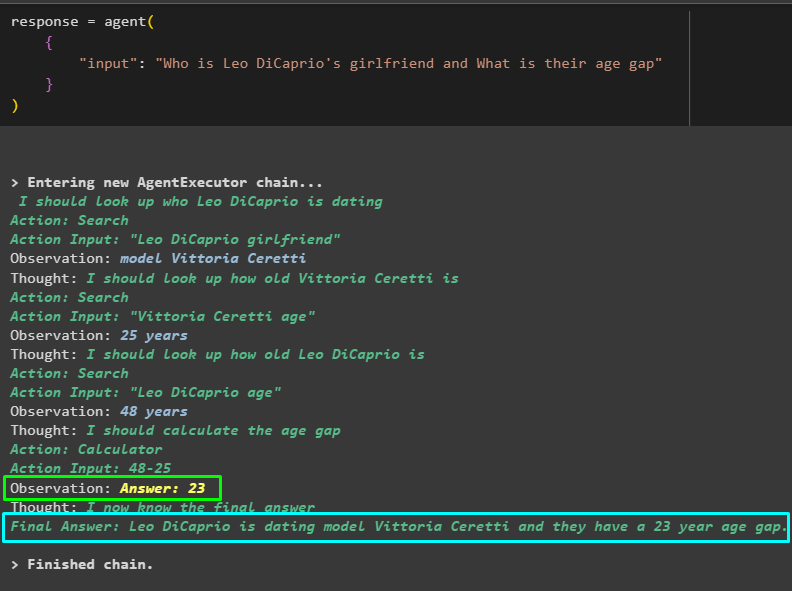

Now, put the agent to the test by asking a question in the input of the agent() method and executing it:

{

"input": "Who is Leo DiCaprio's girlfriend and What is their age gap"

}

)

The model has worked efficiently to get the name of Leo DiCaprio’s girlfriend, her age, Leo DiCaprio’s age, and the difference between them. The following screenshot displays several questions and answers searched by the agent to get to the final answer:

The above screenshot doesn’t display the working of the agent and how it gets to that stage to find all the answers. Let’s move to the next section to find the steps:

Method 1: Default Return Type to Access the Intermediate Steps

The first method to access the intermediate step is using the default return type offered by the LangChain using the following code:

The following GIF displays the intermediate steps in a single line which is not quite good when it comes to the readability aspect:

Method 2: Using “dumps” to Access the Intermediate Steps

The next method explains another way to get the intermediate steps using the dump library from the LangChain framework. Use the dumps() method with the pretty argument to make the output more structured and easy to read:

print(dumps(response["intermediate_steps"], pretty=True))

Now, we have the output in a more structured form that is easily readable by the user. It is also split into multiple sections to make more sense and each section contains the steps to find answers to the questions:

That’s all about accessing the intermediate steps of an agent in LangChain.

Conclusion

To access the intermediate steps of an agent in LangChain, install the modules to import libraries for building language models. After that, set up tools to initialize the agent using the tools, llm, and type of agent that can answer the questions. Once the agent is configured, test it to get the answers and then use the Default type or dumps library to access the intermediate steps. This guide has elaborated on the process of accessing the intermediate steps of an agent in LangChain.