Quick Outline

This post will demonstrate the following:

- How to Add Memory to OpenAI Functions Agent in LangChain

- Step 1: Installing Frameworks

- Step 2: Setting up Environments

- Step 3: Importing Libraries

- Step 4: Building Database

- Step 5: Uploading Database

- Step 6: Configuring Language Model

- Step 7: Adding Memory

- Step 8: Initializing the Agent

- Step 9: Testing the Agent

- Conclusion

How to Add Memory to OpenAI Functions Agent in LangChain?

OpenAI is an Artificial Intelligence (AI) organization that was formed in 2015 and was a non-profit organization at the beginning. Microsoft has been investing a lot of fortune since 2020 as Natural Language Processing (NLP) with AI has been booming with chatbots and language models.

Building OpenAI agents enables the developers to get more readable and to-the-point results from the internet. Adding memory to the agents allows them to understand the context of the chat better and store the previous conversations in their memory as well. To learn the process of adding memory to the OpenAI functions agent in LangChain, simply go through the following steps:

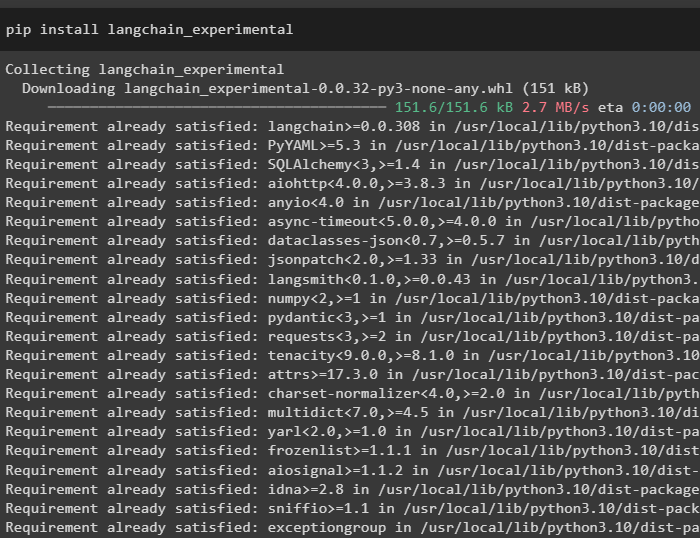

Step 1: Installing Frameworks

First of all, install the LangChain dependencies from the “langchain-experimental” framework using the following code:

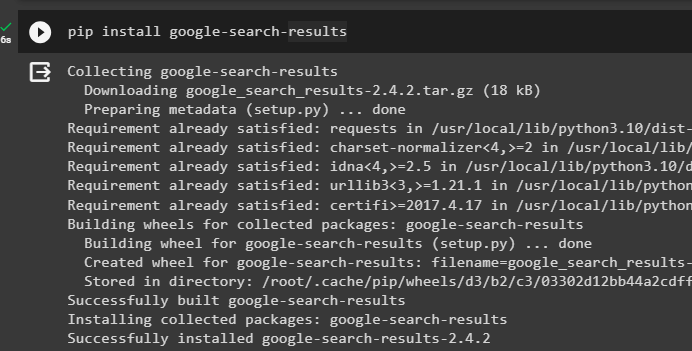

Install the “google-search-results” module to get the search results from the Google server:

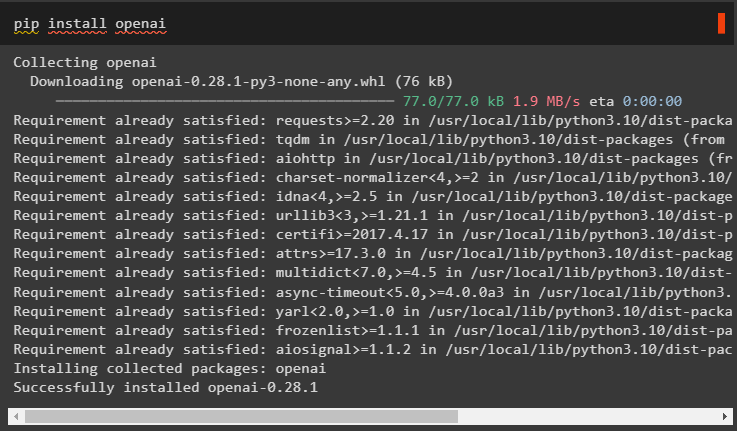

Also, install the OpenAI module that can be used to build the language models in LangChain:

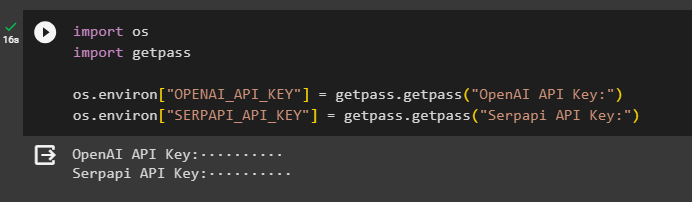

Step 2: Setting up Environments

After getting the modules, set up the environments using the API keys from the OpenAI and SerpAPi accounts:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

os.environ["SERPAPI_API_KEY"] = getpass.getpass("Serpapi API Key:")

Execute the above code to enter the API keys for accessing both the environment and press enter to confirm:

Step 3: Importing Libraries

Now that the setup is complete, use the dependencies installed from the LangChain to import the required libraries for building the memory and agents:

from langchain.llms import OpenAI

#get library to search from Google over the internet

from langchain.utilities import SerpAPIWrapper

from langchain.utilities import SQLDatabase

from langchain_experimental.sql import SQLDatabaseChain

#get library to build tools for initializing the agent

from langchain.agents import AgentType, Tool, initialize_agent

from langchain.chat_models import ChatOpenAI

Step 4: Building Database

To get on with this guide, we need to build the database and connect to the agent to extract answers from it. To build the database, it is required to download SQLite using this guide and confirm the installation using the following command:

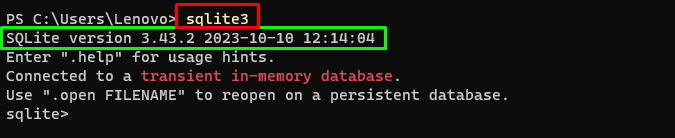

Running the above command in the Windows Terminal displays the installed version of SQLite (3.43.2):

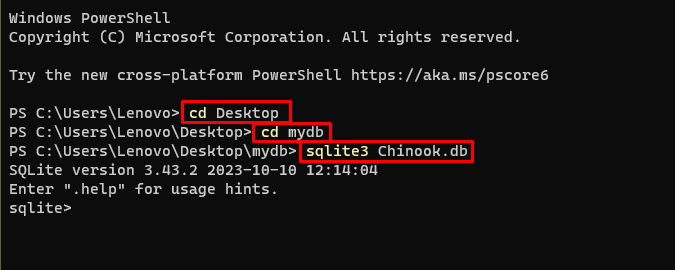

After that, simply head to the directory on your computer where the database will be built and stored:

cd mydb

sqlite3 Chinook.db

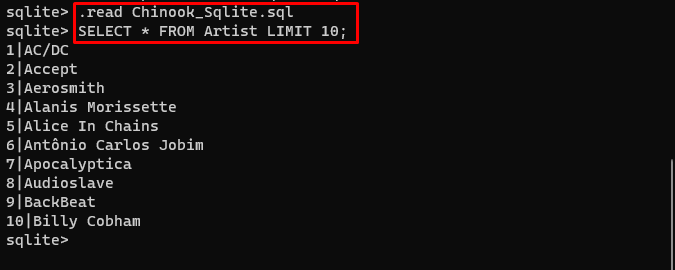

The user can simply download the contents of the database from this link in the directory and execute the following command to build the database:

SELECT * FROM Artist LIMIT 10;

The database has been successfully built and the user can search for data from it using different queries:

Step 5: Uploading Database

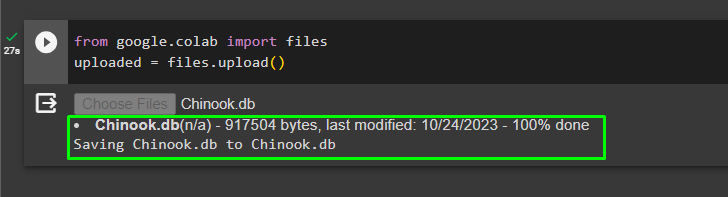

Once the database is built successfully, upload the “.db” file to the Google Collaboratory using the following code:

uploaded = files.upload()

Choose the file from the local system by clicking on the “Choose Files” button after executing the above code:

Once the file is uploaded, simply copy the path of the file which will be used in the next step:

Step 6: Configuring Language Model

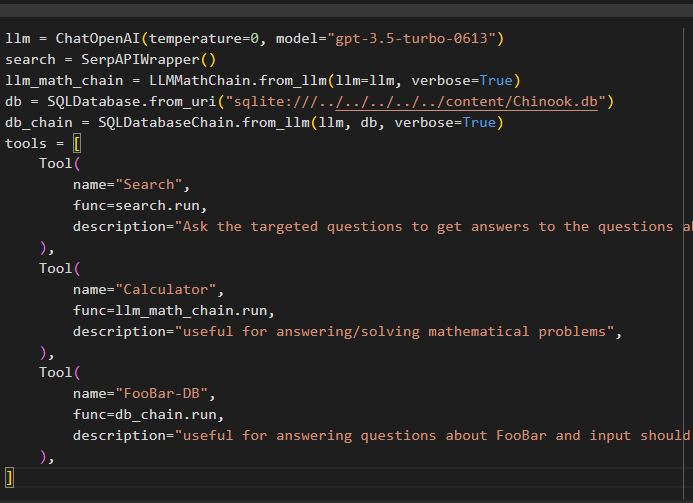

Build the language model, chains, tools, and chains using the following code:

search = SerpAPIWrapper()

llm_math_chain = LLMMathChain.from_llm(llm=llm, verbose=True)

db = SQLDatabase.from_uri("sqlite:///../../../../../content/Chinook.db")

db_chain = SQLDatabaseChain.from_llm(llm, db, verbose=True)

tools = [

Tool(

name="Search",

func=search.run,

description="Ask the targeted questions to get answers to the questions about recent affairs",

),

Tool(

name="Calculator",

func=llm_math_chain.run,

description="useful for answering/solving mathematical problems",

),

Tool(

name="FooBar-DB",

func=db_chain.run,

description="useful for answering questions about FooBar and input should be in the form of a question containing full context",

),

]

- The llm variable contains the configurations of the language model using the ChatOpenAI() method with the name of the model.

- The search variable contains the SerpAPIWrapper() method to build the tools for the agent.

- Build the llm_math_chain to get the answers related to the Mathematics domain using the LLMMathChain() method.

- The db variable contains the path of the file which has the contents of the database. The user needs to change only the last part which is “content/Chinook.db” of the path keeping the “sqlite:///../../../../../” the same.

- Build another chain for answering queries from the database using the db_chain variable.

- Configure tools like search, calculator, and FooBar-DB for searching the answer, answering math questions, and queries from the database respectively:

Step 7: Adding Memory

After configuring the OpenAI functions, simply build and add the memory to the agent:

from langchain.memory import ConversationBufferMemory

agent_kwargs = {

"extra_prompt_messages": [MessagesPlaceholder(variable_name="memory")],

}

memory = ConversationBufferMemory(memory_key="memory", return_messages=True)

Step 8: Initializing the Agent

The last component to build and initialize is the agent, containing all the components like llm, tool, OPENAI_FUNCTIONS, and others to be used in this process:

tools,

llm,

agent=AgentType.OPENAI_FUNCTIONS,

verbose=True,

agent_kwargs=agent_kwargs,

memory=memory,

)

Step 9: Testing the Agent

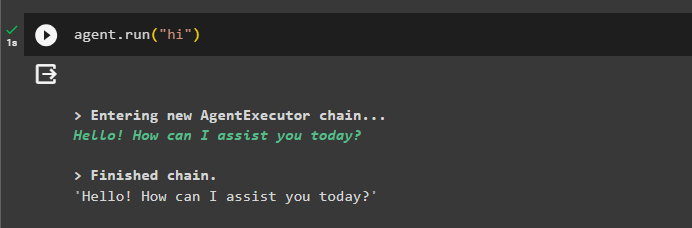

Finally, test the agent by initiating the chat using the “hi” message:

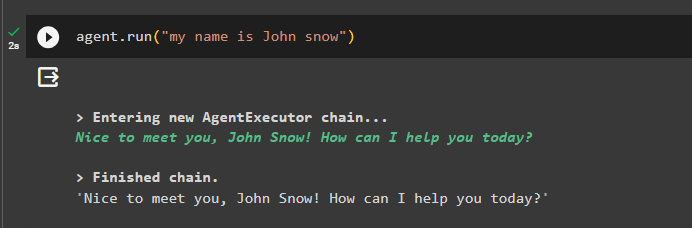

Add some information to the memory by running the agent with it:

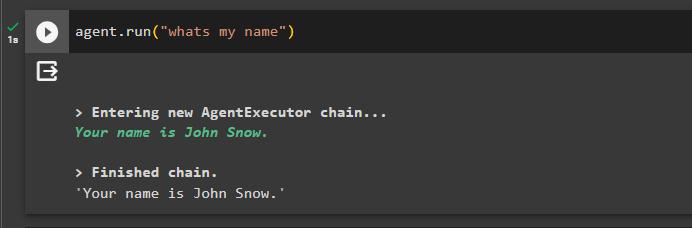

Now, test the memory by asking the question about the previous chat:

The agent has responded with the name fetched from the memory so the memory is running successfully with the agent:

That’s all for now.

Conclusion

To add the memory to the OpenAI functions agent in LangChain, install the modules to get the dependencies for importing the libraries. After that, simply build the database and upload it to the Python notebook so it can be used with the model. Configure the model, tools, chains, and database before adding them to the agent and initialize it. Before testing the memory, build the memory using the ConversationalBufferMemory() and add it to the agent before testing it. This guide has elaborated on how to add memory to the OpenAI functions agent in LangChain.