How to Use a Conversation Buffer Window in LangChain?

The conversation buffer window is used to keep the most recent messages of the conversation in the memory to get the most recent context. It uses the value of the K for storing the messages or strings in the memory using the LangChain framework.

To learn the process of using the conversation buffer window in LangChain, simply go through the following guide:

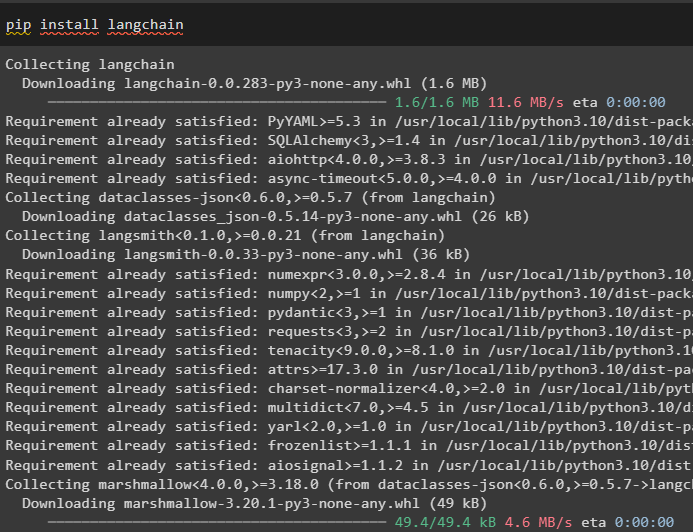

Step 1: Install Modules

Start the process of using the conversation buffer window by installing the LangChain module with the required dependencies for building conversation models:

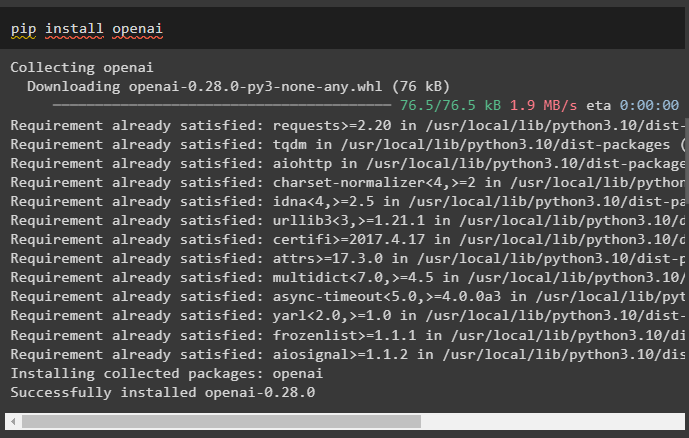

After that, install the OpenAI module that can be used to build the Large Language Models in LangChain:

Now, set up the OpenAI environment to build the LLM chains using the API key from the OpenAI account:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("OpenAI API Key:")

Step 2: Using Conversation Buffer Window Memory

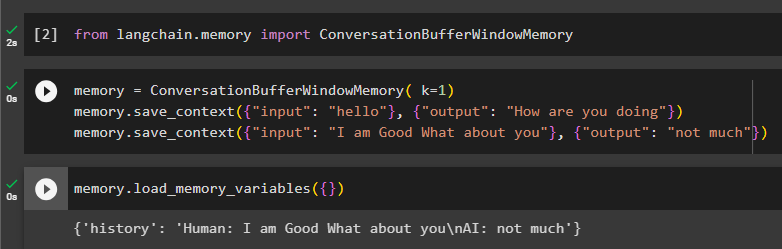

To use the conversation buffer window memory in LangChain, import the ConversationBufferWindowMemory library:

Configure the memory using the ConversationBufferWindowMemory() method with the value of k as its argument. The value of the k will be used to keep the most recent messages from the conversation and then configure the training data using the input and output variables:

memory.save_context({"input": "hello"}, {"output": "How are you doing"})

memory.save_context({"input": "I am Good What about you"}, {"output": "not much"})

Test the memory by calling the load_memory_variables() method to start the conversation:

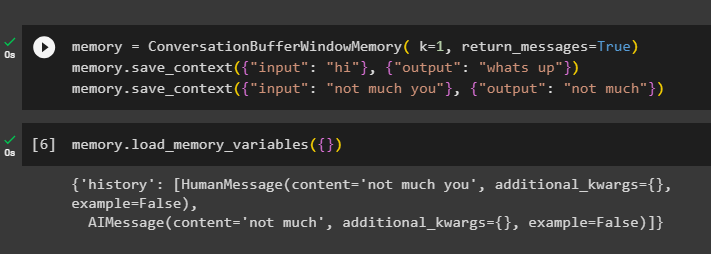

To get the history of the conversation, configure the ConversationBufferWindowMemory() function using the return_messages argument:

memory.save_context({"input": "hi"}, {"output": "whats up"})

memory.save_context({"input": "not much you"}, {"output": "not much"})

Now, call the memory using the load_memory_variables() method to get the response with the history of the conversation:

Step 3: Using Buffer Window in a Chain

Build the chain using the OpenAI and ConversationChain libraries and then configure the buffer memory to store the most recent messages in the conversation:

from langchain.llms import OpenAI

#building summary of the conversation using multiple parameters

conversation_with_summary = ConversationChain(

llm=OpenAI(temperature=0),

#building memory buffer using its function with the value of k to store recent messages

memory=ConversationBufferWindowMemory(k=2),

#configure verbose variable to get more readable output

verbose=True

)

conversation_with_summary.predict(input="Hi, what's up")

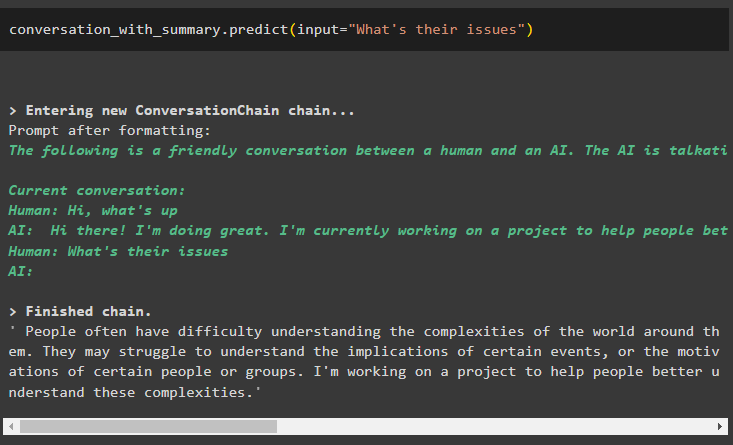

Now keep the conversation going by asking the question related to the output provided by the model:

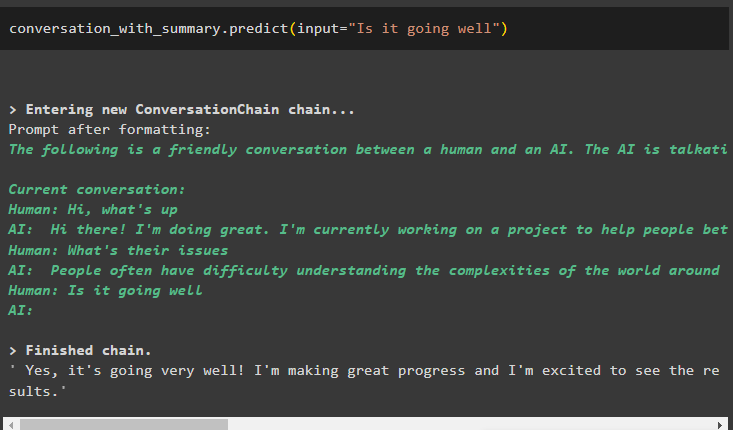

The model is configured to store only one previous message which can be used as the context:

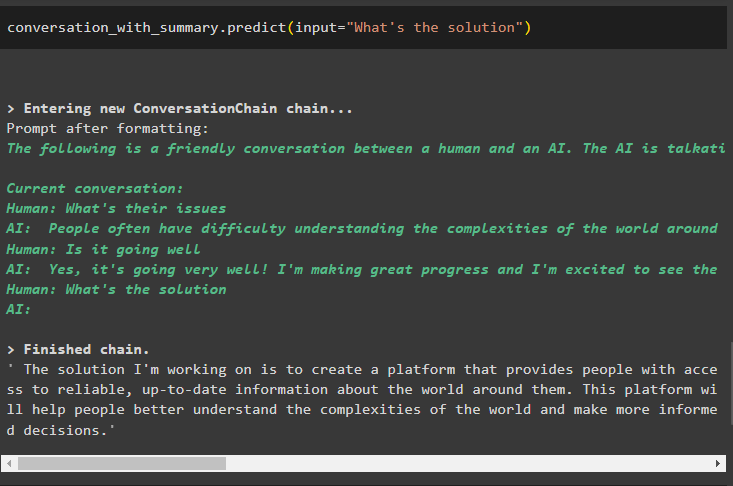

Ask for the solution to the problems and the output structure will keep sliding the buffer window by removing the earlier messages:

That is all about the process of using the Conversation buffer windows LangChain.

Conclusion

To use the conversation buffer window memory in LangChain, simply install the modules and set up the environment using OpenAI’s API key. After that, build the buffer memory using the value of k to keep the most recent messages in the conversation to keep the context. The buffer memory can also be used with chains to instigate the conversation with the LLM or chain. This guide has elaborated on the process of using the conversation buffer window in LangChain.