In this article, we will show you how to check if TensorFlow can use GPU to accelerate the Artificial Intelligence and Machine Learning programs.

Topic of Contents:

- Checking If TensorFlow Is Using GPU from the Python Interactive Shell

- Checking If TensorFlow Is Using GPU by Running a Python Script

- Conclusion

Checking If TensorFlow Is Using GPU from the Python Interactive Shell

You can check if TensorFlow is capable of using GPU and can use GPU to accelerate the A.I. or Machine Learning computations from the Python Interactive Shell.

To open a Python Interactive Shell, run the following command from a Terminal app:

Import TensorFlow with the following Python statement:

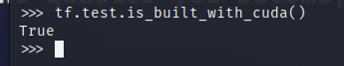

To test if TensorFlow is compiled to use a GPU for AI/ML acceleration, run the tf.test.is_built_with_cuda() in the Python Interactive Shell. If TensorFlow is built to use a GPU for AI/ML acceleration, it prints “True”. If TensorFlow is not built to use a GPU for AI/ML acceleration, it prints “False”.

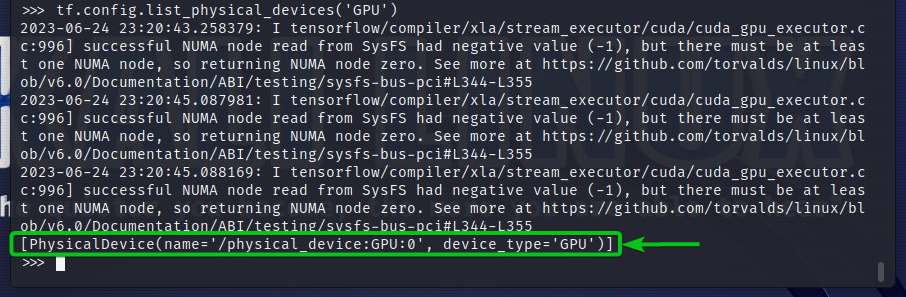

To check the GPU devices that TensorFlow can access, run the tf.config.list_physical_devices(‘GPU’) in the Python Interactive Shell. You will see all the GPU devices that TensorFlow can use in the output. Here, we have only one GPU GPU:0 that TensorFlow can use for AI/ML acceleration.

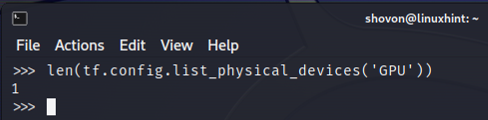

You can also check the number of GPU devices that TensorFlow can use from the Python Interactive Shell. To do that, run the len(tf.config.list_physical_devices(‘GPU’)) in the Python Interactive Shell. As you can see, we have one GPU that TensorFlow can use for AI/ML acceleration.

Checking If TensorFlow Is Using GPU by Running a Python Script

You can check if TensorFlow is using a GPU by writing and running a simple Python script as well.

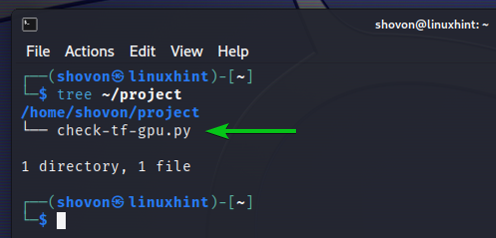

Here, we created a Python source file which is “check-tf-gpu.py” in the project directory (~/project in my case) to test if TensorFlow is using a GPU.

The contents of the “check-tf-gpu.py” Python source file are as follows:

hasGPUSupport = tf.test.is_built_with_cuda()

gpuList = tf.config.list_physical_devices('GPU')

print("Tensorflow Compiled with CUDA/GPU Support:", hasGPUSupport)

print("Tensorflow can access", len(gpuList), "GPU")

print("Accessible GPUs are:")

print(gpuList)

Here’s how our ~/project directory looks after creating the “check-tf-gpu.py” Python script:

You can run the “check-tf-gpu.py” Python script from the ~/project directory as follows:

The output of the “check-tf-gpu.py” Python script will show you whether TensorFlow is compiled with CUDA/GPU support, the number of GPUs that are available for TensorFlow, and the list of GPUs that are available for TensorFlow.

Conclusion

We showed you how to check if TensorFlow can use a GPU to accelerate the AI/ML programs from the Python Interactive Shell. We also showed you how to check if TensorFlow can use a GPU to accelerate the AI/ML programs using a simple Python script.