Syntax:

The prompt template contains the following different parts that could be helpful as input to the language models:

- A prompt template can be a question to the large language model.

- A prompt template can also be a set of instructions for the language model.

- A prompt may have a group of examples that can help the model to generate a good response.

The syntax for this function is simple and is shown in the following:

The function call method for the prompt template takes in the two input parameters where “input_varaible” contains the name of the product for which we ask the question to the language model and “a_template” represents the question.

Example 1: Method to Create a Prompt Template for the Language Model

Let’s jump into the implementation of the prompt template by following the syntax that we learned in the previous section of this article. To work with the language models, we have to install any recent version of Python first, or we can simply use the Google Colab/Jupyter notebook online. We install the Python IDE “Pycharm” since it provides a better import for all the necessary libraries.

After the installation of Python, the next step is to download and install the LangChain library to the project. This library allows us to work with all the functions that are required for the language model and the prompt template is one of those functions. To install the LangChain, we use the “! pip install langchain” command and run this command in the Python terminal shell.

Once this library and all its packages are installed, we then import the “prompt Template” from the LangChain. Now, we create a prompt using the “prompt Template()” function. This prompt is then the input to our language model. To create the prompt, call the “PrompTemplate()”function and pass the input variable to its input arguments as the “product”. Let’s say we want to know about a company that makes or provides the best books for reading. To the second parameter a “template “, this parameter is the question that we may want to ask about our specified product. For this example, let’s set this question as “How would you suggest a good name for the product”.

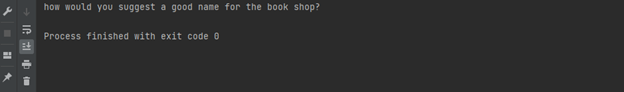

Now, we call another function which is known as “prompt. format ()”. We pass the product to this function or, in other words, we specify our product which, in this scenario, is “book_shop”. Now, we print the results of this function by calling the print() function and run the code to check for its output.

prompt= PromptTemplate(input_variables=["product"],

template="How would you suggest a good name for the {product}?",)

print(prompt.format(product="book shop"))

After running the code, we get an output which is a suggestion for the model to suggest a name for a bookshop. We display the code and the picture for this example in the given image.

Example 2: Creating a Prompt Template with Multiple Input Variables

So far in this article, we discussed the method for creating a prompt template with a single input variable where the input variable contains the information of the product about which we want to ask the question from the model.

Here, in this example, we will work with the prompt template which has multiple input variables which means that instead of just defining the product as we did in the previous example, we describe the product in two forms: one is the “adjective” which desctibes the type of the content that we want for our product and the “content” where the content specifically contains the product name. To make it more obvious to you, let’s give an example.

As we already discussed, to work with the prompt template, we first have to install and import the LangChain. Then, after this installation and import from LangChain, we import its “prompTemplate” package. Once this package is imported to our project, we create a “multi_prompt”. We call the “PromptTemplate()” function and specify the “multi_variables” to the arguments of this function as two variables which are “adjective” and “content”, respectively. As to its second argument, we pass a “template” where we ask a question using both the variables adjective and the content.

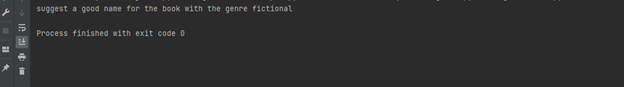

For this example, let’s say we want to ask the model with the question about the book with the specified genre which is fictional. Now, we call the “prompt. format()” function again and pass the adjective as a genre which is “fictional” and the content as “book” which is the name of the product to the parameters of this function. The results are then displayed with the print method. The code with the output for this example is attached in the following:

multi_prompt= PromptTemplate(input_variables=["adjective", "content"],

template="suggest a good name for the {content} with the genre {adjective}",)

print(prompt.format(adjective="fictional", content="book"))

The output of the previously mentioned code should display a question about the name of the book whose content is related to the fictional genres. Since we asked our model a question where we want our model to suggest a name of the book which has fictional content, the prompTemplate helps in creating the prompts that are the input to the large language models to generate an improved response.

Conclusion

This article covers the two examples where we implement the promptTemplate method. With the help of the large language model library which is “LangChain”, we imported the promptTemplate and created the prompts with both single and multiple input variables.