What Are %ul and %lu Format Specifiers?

To start, it is important to note that both %ul and %lu format specifiers are used when programming in C and they both represent unsigned long data types. However, the position of the letters “l” and “u” differs. The letters “u” and “l” stand for “unsigned” and “long,” respectively. The intended argument type is determined by the sequence of these letters. The “%u” specifies that the character or string it is applied to has the data type of an unsigned int while the “%l” part specifies that it is an unsigned long data type. In other words, %ul indicates an unsigned long data type while %lu indicates the same but with an additional “long” size modifier.

As a result, if you use %ul on a variable that isn’t an unsigned long integer, you can get a suffix l at the end of the output. When trying to print a variable with a certain data type, it’s critical to utilize the appropriate format specifier.

Here is some sample code showing how %ul and %lu format specifiers differ:

%ul Format Specifier in C

int main() {

unsigned long int i = 1234567890;

printf("Using %%ul format specifier: %ul\n", i);

return 0;

}

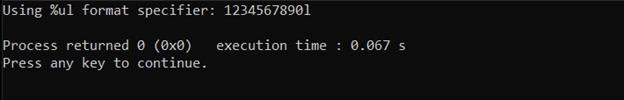

In the above code, we define the variable i as an unsigned long integer and initialize it to 1234567890. The printf command then uses the %ul format specifier to print the value of i. Because just %u is the major component of the specifier and l is outside of the format, it will print out the number with the suffix l at the end.

Output

%lu Format Specifier in C

int main() {

unsigned long int i = 1234567890;

printf("Using %%lu format specifier: %lu\n", i);

return 0;

}

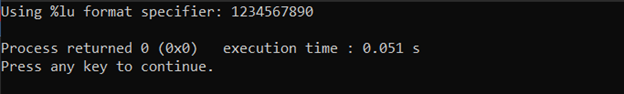

In the above code, the unsigned long integer variable i is declared and initialized to 1234567890 and then printed using the %lu format specifier.

Output

Differences Between %ul and %lu Format Specifier in C

1: Range of Values

The main difference between %ul and %lu has to do with the range of values they are expected to represent. When programming in C, the unsigned long data type uses a different format from the other data types, including the unsigned int data type. A 32-bit int requires only 32 bits of memory to store its value, whereas an unsigned long requires 64 bits for the same type of data, thus having a more significant range than an int. This means that %ul will accept values from 0 to +2^32-1, while the %lu specifier will accept values from 0 to +2^64-1.

2: Precision

There is also a difference in the precision of values they are expected to represent. With the %ul specifier, a programmer is only able to represent values up to 2^32-1, while the %lu specifier can represent values up to 2^64-1. While this might not seem like a big difference at first glance, it can indirectly affect the accuracy of the results. A program designed to store a value that has, for example, a range of +2^64 will run into errors when using %ul specifiers because they will not be able to store the data in the desired format, thus leading to a loss of accuracy.

3: Memory

Finally, %ul and %lu also differ in their use of memory. The %ul specifier requires 32 bits of memory for data, whereas %lu requires 64 bits for the same type of data, meaning that %lu takes up around twice as much memory as %ul. This might not seem like a huge difference in small, low-scale programs, but this can quickly become unmanageable as a program’s memory usage often increases with complexity, meaning that %lu is not ideal when dealing with large-scale applications.

4: Format

It is important to understand the exact format of the output when using %ul or %lu. Specifically, the %ul format specifier always outputs the integer as an 8-digit hexadecimal value while the %lu format specifier outputs the integer as an 8-digit decimal value. This means that if an integer is represented as a hexadecimal value, it should be printed as a %ul whereas if the integer is represented as a decimal value, it should be printed as a %lu.

Final Thoughts

It is important to understand the exact differences between %ul and %lu format specifiers when working with the C language. Although they may seem similar, the primary difference is that %ul format requires an unsigned long integer parameter whereas the %lu format expects a long unsigned integer input. The %ul format specifier always outputs the integer as an 8-digit hexadecimal value while the %lu format specifier outputs the integer as an 8-digit decimal value. Finally, it is important to note that the %ul and %lu format specifiers can only be used when working with variables that have the type ‘long’.