Before that, we have to create PySpark DataFrame for demonstration.

Example:

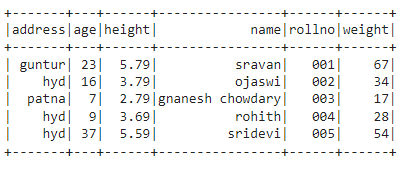

We will create a dataframe with 5 rows and 6 columns and display it using the show() method.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[

{'rollno':'001','name':'sravan','age':23,

'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,

'height':3.79,'weight':34,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':7,

'height':2.79,'weight':17,'address':'patna'},

{'rollno':'004','name':'rohith','age':9,

'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,

'height':5.59,'weight':54,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#display dataframe

df.show()

Output:

Method -1 : Using select() method

We can get the count from the column in the dataframe using the select() method. Using the count() method, we can get the total number of rows from the column. To use this method, we have to import it from pyspark.sql.functions module, and finally, we can use the collect() method to get the count from the column

Syntax:

Where,

- df is the input PySpark DataFrame

- column_name is the column to get the total number of rows (count).

If we want to return the count from multiple columns, we have to use the count () method inside the select() method by specifying the column name separated by a comma.

Syntax:

Where,

- df is the input PySpark DataFrame

- column_name is the column to get the total number of rows (count).

Example 1: Single Column

This example will get the count from the height column in the PySpark dataframe.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#import the count function

from pyspark.sql.functions import count

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[

{'rollno':'001','name':'sravan','age':23,

'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,

'height':3.79,'weight':34,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':7,

'height':2.79,'weight':17,'address':'patna'},

{'rollno':'004','name':'rohith','age':9,

'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,

'height':5.59,'weight':54,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#return the number of values/rows from the height column

#using count

df.select(count('height')).collect()

Output:

In the above example, the count from the height column is returned.

Example 2:Multiple Columns

This example will get the count from the height, age, and weight columns in the PySpark dataframe.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#import the count function

from pyspark.sql.functions import count

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[

{'rollno':'001','name':'sravan','age':23,

'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,

'height':3.79,'weight':34,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':7,

'height':2.79,'weight':17,'address':'patna'},

{'rollno':'004','name':'rohith','age':9,

'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,

'height':5.59,'weight':54,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#return the count from the height ,age and weight columns

df.select(count('height'),count('age'),count('weight')).collect()

Output:

In the above example, the count from the height, age and weight columns is returned.

Method – 2 : Using agg() method

We can get the count from the column in the dataframe using the agg() method. This method is known as aggregation, which groups the values within a column. It will take dictionary as a parameter in that key will be column name and value is the aggregate function, i.e., count. Using the count () method, we can get the number of rows from the column, and finally, we can use the collect() method to get the count from the column.

Syntax:

Where,

- df is the input PySpark DataFrame

- column_name is the column to get the total number of rows (count).

- the count is an aggregation function used to return the number of rows

If we want to return the count from multiple columns, we must specify the column name with the count function separated by a comma.

Syntax:

Where,

- df is the input PySpark DataFrame

- column_name is the column to get the total number of rows (count).

- the count is an aggregation function used to return the total number of rows

Example 1: Single Column

This example will get the count from the height column in the PySpark dataframe.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[

{'rollno':'001','name':'sravan','age':23,

'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,

'height':3.79,'weight':34,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':7,

'height':2.79,'weight':17,'address':'patna'},

{'rollno':'004','name':'rohith','age':9,

'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,

'height':5.59,'weight':54,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#return the number of rows the height column

df.agg({'height': 'count'}).collect()

Output:

In the above example, the count from the height column is returned.

Example 2: Multiple Columns

This example will get the count from the height, age, and weight columns in the PySpark dataframe.

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[

{'rollno':'001','name':'sravan','age':23,

'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,

'height':3.79,'weight':34,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':7,

'height':2.79,'weight':17,'address':'patna'},

{'rollno':'004','name':'rohith','age':9,

'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,

'height':5.59,'weight':54,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#return the number of rows from the height,age and weight columns

df.agg({'height': 'count','age': 'count','weight': 'count'}).collect()

Output:

In the above example, the count from the height, age and weight columns is returned.

Method – 3 : Using groupBy() method

We can get the count from the column in the dataframe using the groupBy() method. This method will return the total number of rows by grouping similar values in a column. We have to use count() function after performing groupBy() function

Syntax:

Where,

- df is the input PySpark DataFrame

- group_column is the column where values are grouped based on this column

- the count is an aggregate function used to return the total number of rows based on grouped rows

Example :

In this example, we are going to group the address column and get the count

import pyspark

#import SparkSession for creating a session

from pyspark.sql import SparkSession

#create an app named linuxhint

spark_app = SparkSession.builder.appName('linuxhint').getOrCreate()

# create student data with 5 rows and 6 attributes

students =[

{'rollno':'001','name':'sravan','age':23,

'height':5.79,'weight':67,'address':'guntur'},

{'rollno':'002','name':'ojaswi','age':16,

'height':3.79,'weight':34,'address':'hyd'},

{'rollno':'003','name':'gnanesh chowdary','age':7,

'height':2.79,'weight':17,'address':'patna'},

{'rollno':'004','name':'rohith','age':9,

'height':3.69,'weight':28,'address':'hyd'},

{'rollno':'005','name':'sridevi','age':37,

'height':5.59,'weight':54,'address':'hyd'}]

# create the dataframe

df = spark_app.createDataFrame( students)

#return the count of rows by grouping address column

df.groupBy('address').count().collect()

Output:

There are three unique values in the address field – hyd, guntur, and patna. So the count will be formed by grouping the values across the address values.

Row(address='guntur', count=1),

Row(address='patna', count=1)]

Conclusion:

We discussed how to get the count from the PySpark DataFrame using the select() and agg() methods. To get the total number of rows by grouping with other columns, we used the groupBy along with the count() function.